Efficient Proxmox Cluster Deployment through Automation with Ansible

Manually setting up and managing servers is usually time-consuming, error-prone, and difficult to scale. This becomes especially evident during large-scale rollouts, when building complex infrastructures, or during the migration from other virtualization environments. In such cases, traditional manual processes quickly reach their limits. Consistent automation offers an effective and sustainable solution to these challenges.

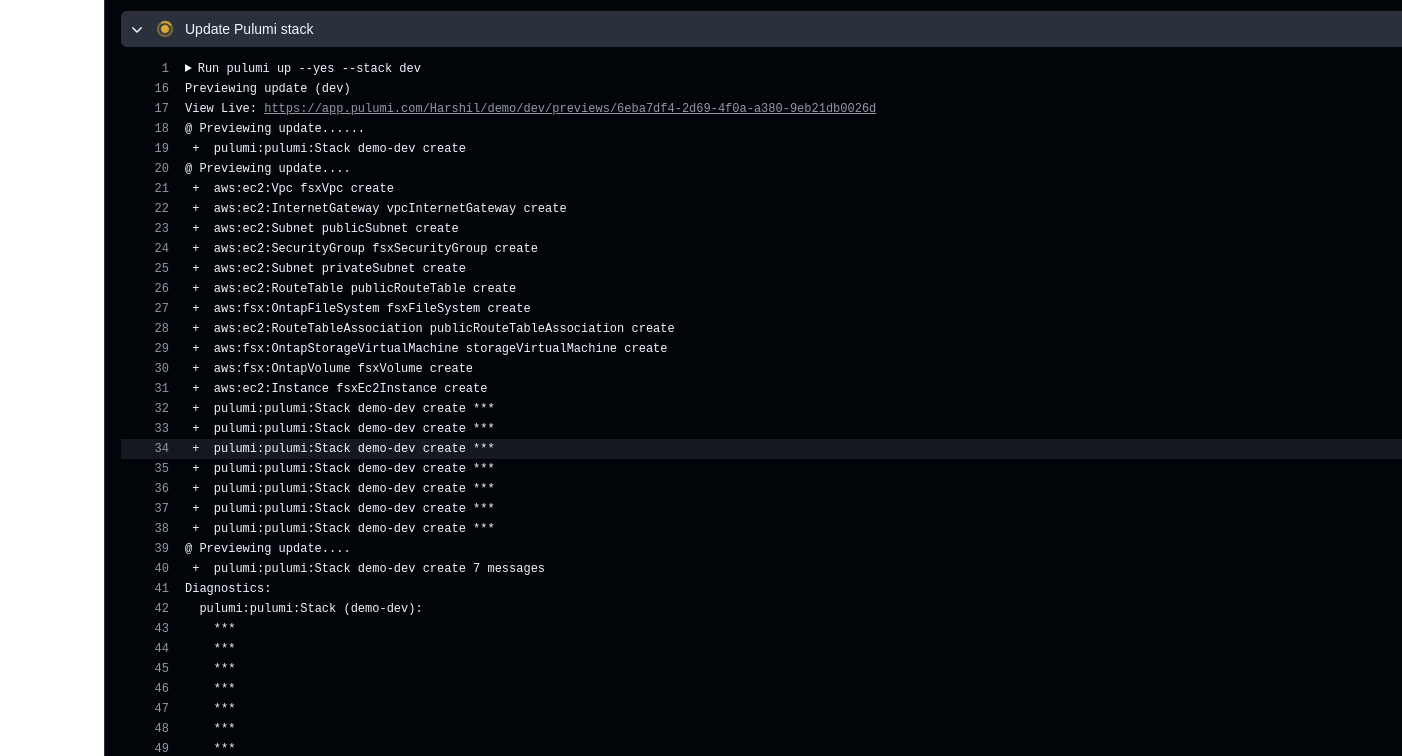

To enable fully automated deployment of Proxmox clusters, our team member, known in the open-source community under the alias gyptazy, has developed a dedicated Ansible module called proxmox_cluster. This module handles all the necessary steps to initialize a Proxmox cluster and add additional nodes. It has been officially included in the upstream Ansible Community Proxmox collection and is available for installation via Ansible Galaxy starting with version 1.1.0. As a result, the manual effort required for cluster deployment is significantly reduced. Further insights can be found in his blog post titled “How My BoxyBSD Project Boosted the Proxmox Ecosystem“.

By adopting this solution, not only can valuable time be saved, but a solid foundation for scalable and low-maintenance infrastructure is also established. Unlike fragile task-based approaches that often rely on Ansible’s shell or command modules, this solution leverages the full potential of the Proxmox API through a dedicated module. As a result, it can be executed in various scopes and does not require SSH access to the target systems.

This automated approach makes it possible to deploy complex setups efficiently while laying the groundwork for stable and future-proof IT environments. Such environments can be extended at a later stage and are built according to a consistent and repeatable structure.

Benefits

Using the proxmox_cluster module for Proxmox cluster deployment brings several key advantages to modern IT environments. The focus lies on secure, flexible, and scalable interaction with the Proxmox API, improved error handling, and simplified integration across various use cases:

- Use of the native Proxmox API

- Full support for the Proxmox authentication system

- API Token Authentication support

- No SSH access required

- Usable in multiple scopes:

- From a dedicated deployment host

- From a local system

- Within the context of the target system itself

- Improved error handling through API abstraction

Ansible Proxmox Module: proxmox_cluster

The newly added proxmox_cluster module in Ansible significantly simplifies the automated provisioning of Proxmox VE clusters. With just a single task, it enables the seamless creation of a complete cluster, reducing complexity and manual effort to a minimum.

Creating a Cluster

Creating a cluster requires now only a single task in Ansible by using the proxmox_cluster module:

- name: Create a Proxmox VE Cluster community.proxmox.proxmox_cluster: state: present api_host: proxmoxhost api_user: root@pam api_password: password123 api_ssl_verify: false link0: 10.10.1.1 link1: 10.10.2.1 cluster_name: "devcluster"

Afterwards, the cluster is created and additional Proxmox VE nodes can join the cluster.

Joining a Cluster

Additional nodes can now also join the cluster using a single task. When combined with the use of a dynamic inventory, it becomes easy to iterate over a list of nodes from a defined group and add them to the cluster within a loop. This approach enables the rapid deployment of larger Proxmox clusters in an efficient and scalable manner.

- name: Join a Proxmox VE Cluster

community.proxmox.proxmox_cluster:

state: present

api_host: proxmoxhost

api_user: root@pam

api_password: password123

master_ip: "{{ primary_node }}"

fingerprint: "{{ cluster_fingerprint }}"

cluster_name: “devcluster"Cluster Join Informationen

In order for a node to join a Proxmox cluster, the cluster’s join information is generally required. To avoid defining this information manually for each individual cluster, this step can also be automated. As part of this feature, a new module called cluster_join_info has been introduced. It allows the necessary data to be retrieved automatically via the Proxmox API and made available for further use in the automation process.

- name: List existing Proxmox VE cluster join information

community.proxmox.proxmox_cluster_join_info:

api_host: proxmox1

api_user: root@pam

api_password: "{{ password | default(omit) }}"

api_token_id: "{{ token_id | default(omit) }}"

api_token_secret: "{{ token_secret | default(omit) }}"

register: proxmox_cluster_joinConclusion

While automation in the context of virtualization technologies is often focused on the provisioning of guest systems or virtual machines (VMs), this approach demonstrates that automation can be applied at a much deeper level within the underlying infrastructure. It is also possible to fully automate scenarios in which nodes are initially deployed using a customer-specific image with Proxmox VE preinstalled, and then proceed to automatically create the cluster.

As an official Proxmox partner, we are happy to support you in implementing a comprehensive automation strategy tailored to your environment and based on Proxmox products. You can contact us at any time!

We are very pleased to be hosting the 5th PostgreSQL User Group NRW Meetup – in our new offices right next to Borussia Park in Mönchengladbach! (more…)

Introduction

If you regularly deal with code that needs extensive internationalization capabilities, chances are, that you’ve used functionality from one of the ICU libraries before. Being developed by the Unicode Consortium, ICU provides reliable, mature and extensive implementations for all kinds of tools for internationalization and Unicode text operations. Traditionally, there have been two implementations of ICU, ICU4C implemented in C and ICU4J implemented in Java. These libraries have been the gold standard in correct Unicode text handling and i18n for many years. But for some years now, the Unicode Consortium has been developing on ICU4X, a relatively new implementation in Rust.

The focus of ICU4X is on availability on many platforms and in many programming languages. While older implementations like ICU4C and ICU4J are very mature and at the moment provide more functionality than ICU4X, these libraries have a very large code size and large runtime memory footprint, making them infeasible to use in resource constrained environments like in web browsers or on mobile or embedded devices. ICU4X takes care to reduce library code size and provides additional facilities to optimize the code size of both the library itself and the Unicode data shipped with an application.

In this article, I will provide an overview of what ICU4X can do and how to do it. If you’ve worked with other ICU implementations before, many of them will probably feel familiar, if, on the other hand, you never came in contact with ICU, this article should give you a good introduction on how to perform various Unicode text operations using ICU4X.

Prerequisites

I will be showing a lot of code examples on how to use ICU4X in Rust. While it should not be strictly necessary to understand Rust to understand the basics of what’s going on, some familiarity with the language will definitely help to understand the finer details. If you’re unfamiliar with Rust and want to learn more, I recommend The Rust Book as an introduction.

During the examples I’ll be referring to various functions and types from ICU4X without showing their types in full detail. Feel free to open API documentation alongside this article, to look up any types for the functions mentioned.

Test setup

If you want to run the example for yourself, I recommend setting up a cargo project with the appropriate dependency:

$ cargo new --bin icu4x-blog $ cd icu4x-blog $ cargo add icu

This initializes a basic Cargo.toml and src/main.rs. Now you can paste any example code into the generated main functions inside main.rs and run your examples using cargo run:

$ cargo run

Finished `dev` profile [unoptimized + debuginfo] target(s) in 0.02s

Running `target/debug/icu4x-blog`

Hello, world!

For now this only outputs the default “Hello, world!“ message generated by cargo. So let’s go on to add our own examples.

Locales

The behavior of some of ICU4X’s operations depends on lingual or cultural context. When they do, we need to specify what lingual or cultural background we want. We do this in the form of so-called Locales. At its core, a locale is identified by a short string identifying a language and region. They usually look something like “en-US” for an American English locale, or “de-AT” for German language locale as spoken in Austria.

Locales don’t do anything exciting on their own. They only tell other operations how to behave, so construction is basically the only thing we do with Locales. There are two main ways to construct a locale. We can use the locale! macro to construct and validate a static Locale like this:

let en_us = icu::locid::locale!("en-US");

println!("{en_us}");Or we can try to parse a locale from a string at runtime:

let de_at = "de-AT".parse::<icu::locid::Locale>().unwrap();

println!("{de_at}");Note that parsing a locale can fail on invalid inputs. This is encoded by the parse function returning a Result<Locale, ParserError>. In the example above we use unwrap to ignore the possibility of an error, which will panic on actual invalid inputs:

let invalid_locale = "Invalid!".parse::<icu::locid::Locale>().unwrap();

println!("{invalid_locale}");Taken together, these examples will produce the following output:

$ cargo run [...] en-US de-AT thread 'main' panicked at src/main.rs:8:67: called `Result::unwrap()` on an `Err` value: InvalidLanguage note: run with `RUST_BACKTRACE=1` environment variable to display a backtrace

In practical scenarios, you will probably want to detect locales dynamically at runtime via some standard mechanism provided by your operating system or some other execution platform. Unfortunately, there is currently no standardized way to detect ICU4X locales from external sources, but progress to implementing such a solution is tracked in this issue.

Now that we’ve looked on how to construct Locales, let’s look at some operations that need locales to function.

Collation

The first operation we’re going to look at is collation. You’re probably familiar with the concept of comparing strings lexicographically. In Rust the str type already implements the Ord trait, allowing us easy lexicographic comparisons and sorting of strings. However not all languages and cultures agree on which order letters should be sorted in. As an example, in Germany the letter Ä is usually sorted right after A, while in Sweden the letter Ä is usually sorted after Z. Rust’s standard method of comparing strings does not take these regional differences into account. ICU4X provides us with collation functionality to compare and sort strings with these cultural differences in mind.

Construction

The first step for doing so is to create a Collator like this:

let de_de = icu::locid::locale!("de-DE");

let collator_de = icu::collator::Collator::try_new(&de_de.into(), Default::default()).unwrap();The first parameter is the locale we want collation for. Or technically, it’s a DataLocale, because that’s what try_new wants. The difference doesn’t need to concern us too much right now. Just know, that we can convert a Locale to a DataLocale using .into(). The second parameter is a CollatorOptions structure, which we could use to specify more specific options for the collation. We won’t look at the specific options here and instead just use the default options, but check out the API documentation if you’re curious about what options you can specify. At last, we unwrap the Collator, since creating it can fail in cases where no collation data for the given locale could be found. We’ll talk about this possibility later when talking about data handling.

Now that we have one collator for the de-DE locale, let’s build another for Swedish (sv-SE):

let sv_se = icu::locid::locale!("sv-SE");

let collator_sv = icu::collator::Collator::try_new(&sv_se.into(), Default::default()).unwrap();Usage

Now that we have built some collators, let’s sort some strings with the standard Rust comparison and different locales to see the different results:

let mut strings = ["Abc", "Äbc", "ZYX"];

strings.sort_by(Ord::cmp);

println!("Rust default sorted: {strings:?}");

strings.sort_by(|a, b| collator_de.compare(a, b));

println!("Collated de-DE: {strings:?}");

strings.sort_by(|a, b| collator_sv.compare(a, b));

println!("Collated sv-SE: {strings:?}");This produces the following output:

$ cargo run [...] Rust default sorted: ["Abc", "ZYX", "Äbc"] Collated de-DE: ["Abc", "Äbc", "ZYX"] Collated sv-SE: ["Abc", "ZYX", "Äbc"]

As predicted, the German collation sorted the strings differently than the Swedish collation. Incidentally, the default Rust order sorted these specific strings the same as the Swedish collation, though in practice you shouldn’t rely on coincidences like this and always use the correct collation when sorting Strings for display purposes.

Calendars

Sometimes it’s easy to forget, but not all cultures use the same calendar system. And even different cultures sharing the same calendar system might use different formats to represent dates. ICU4X provides support for converting between representations of different calendars and formatting them according to local taste. Besides the Gregorian calendar, popular in most regions of the world, and the ISO calendar often used for technical purposes, many other calendars, such as Japanese, Ethiopian or Indian are supported. However, no functionality for retrieving the current time is currently provided, so in real applications you will have to convert from some other representation first.

Construction

In the next example, we have a known date given as an ISO date, that we want to display in some locale:

let iso_date = icu::calendar::Date::try_new_iso_date(1978, 3, 8).unwrap();

Next we create a DateFormatter:

let local_formatter = icu::datetime::DateFormatter::try_new_with_length(

&icu::locid::locale!("th").into(),

icu::datetime::options::length::Date::Medium,

)

.unwrap();We will use the formatter to format dates into locale specific textual representation. During creation we get to pick a locale (or rather a DataLocale again). We’re picking the Thai locale, because unlike most of the world it uses the Buddhist calendar instead of the Gregorian calendar. We also get to pick a format length that gives us some control over the length of the date format. We use the medium length, which uses abbreviated month names, if these are available in the locale, or numeric months otherwise.

Formatting

Now we just format the date and print it:

let local_format = local_formatter.format(&iso_date.to_any()).unwrap();

println!("{local_format}");Which gives us this output:

$ cargo run [...] 8 มี.ค. 2521

If, like me, you’re not well versed in the Buddhist calendar and Thai month names, this probably won’t tell you much. But that’s exactly the point of using an i18n library like ICU4X. We can use general operations that do the correct thing for any supported locale, without having to understand the intricacies of every specific locale.

When calling format you have to be careful to pass a date that belongs to a calendar suitable for the locale of the formatter. Even though its parameter type suggests that a date from any calendar can be used, the operation only accepts dates from the ISO calendar or dates from correct calendar for that locale (i.e. in this example we could have also passed a date that was already formatted according to the Buddhist calendar). In this case, we used a ISO date, which is always accepted. If you have a date in an entirely different calendar it gets more complicated. You would need to convert your date to the correct target calendar explicitly and then pass it to format. For this you obtain the needed calendar using AnyCalendar::new_for_locale and do the conversion using Date::to_calendar.

Normalization

Unicode texts are represented as a sequence of numbers called code points. But not each code point has its own atomic meaning. Some sequences of code points combine into groups to represent more complex characters. Due to this complexity it is possible in many cases, that different sequences of code points represent the same sequence of semantic characters. As an example, the letter Ä can be represented as the single code point U+00C4 or as the sequence of code points U+0041 U+0308 (an A followed by combining two dots above). This has implications when we want to compare strings for equality. Naively we might want to compare strings by checking if each of the code points are equal. But that would mean that strings that compare different, because they contain different code points, actually contain the semantically same characters.

To deal with this situation, ICU4X gives us string normalization. The idea is as follows: Before comparing strings to each other we “normalize” each string. Normalization transforms the string into a normalized representation, thereby ensuring that all strings that are semantically equal also have the same normalized representation. This means that once we have normalized the strings we want to compare, we can simply compare the resulting strings by code point to determine if the original strings where semantically the same.

Normalization forms

Before we can perform this normalization, we need to understand that there are multiple forms of normalization. These forms are differentiated by two properties. On one axis, they can be composing or decomposing. On the other axis, they can be canonical or compatible.

Composed normalization forms ensure that the normalized form has as few code points as possible, e.g. for the letter Ä the single code point form would be used. Decomposed normalization on the other hand always chooses the representation requiring the most code points available, e.g. for the letter Ä the two code point form would be used. With composed normalization we need less storage space to store the normalized form. However, composed normalization is also usually slower to perform than decomposed normalization, because internally composed normalization first has to run decomposed normalization and then compress the result. As a rule of thumb, it is usually recommended that composed normalization be used when the normalized strings are stored on disk or sent over the network, whereas decomposed normalization should be used when the normalized form is only used internally within an application.

Canonical normalization only considers different code point representations of the same characters to be equal. Compatible normalization goes a step further and considers characters that convey the same meaning, but differ in representation, to be equal. As an example, under compatible normalization the characters “2”, “²” and “②” are all considered equal, whereas under canonical normalization they are different. Compatible normalization can be appropriate when normalizing identifiers such as usernames to detect close-but-different lookalikes.

Taking all of this together, this gives us four different possible forms of normalization:

- NFC: Composing and Canonical

- NFKC: Composing and Compatible

- NFD: Decomposing and Canonical

- NFKD: Decomposing and Compatible

Performing normalization

Once we have decided on a normalization form to use, actually performing the normalization is easy. Here’s an example using NFD normalization:

let string1 = "\u{00C4}";

let string2 = "\u{0041}\u{0308}";

let rust_equal = string1 == string2;

let normalizer = icu::normalizer::DecomposingNormalizer::new_nfd();

let normalized1 = normalizer.normalize(string1);

let normalized2 = normalizer.normalize(string2);

let normalized_equal = normalized1 == normalized2;

println!(

"1: {string1}, 2: {string2}, rust equal: {rust_equal}, normalized equal: {normalized_equal}"

)$ cargo run [...] 1: Ä, 2: Ä, rust equal: false, normalized equal: true

As we can see, string1 and string2 look the same when printed, but the == operator doesn’t consider them equal. However, normalizing both strings and comparing the results, does compare them equal.

NFKD normalization can be used by constructing the normalizer using DecomposingNormalizer::new_nfkd. NFC and NFKC are accessible using ComposingNormalizer::new_nfc and ComposingNormalizer::new_nfkc respectively.

Segmentation

When we look into Unicode texts, we’ll often find that they aren’t only made up of individual code points, but rather of larger constructs consisting of multiple code points, such as words or lines. When processing text, it is often necessary to recognize, where boundaries between these individual pieces are. In ICU4X this process is called segmentation and it provides us with four different types of segments to recognize: graphemes, words, sentences, and lines. The process of segmenting is very similar for each one, but each of them also has their own quirks, so we’ll look at each of them in turn.

Graphemes

As previously mentioned, some code points combine with other code points thereby gaining a different meaning than each code point would have individually. If we break strings apart between two combined code points, the code points can no longer combine and thus revert to their individual meaning. Here’s an example of such unintentional changes in meaning happening:

let string1 = "\u{61}\u{308}\u{6f}\u{308}\u{75}\u{308}";

let string2 = "stu";

println!("string1: {string1}, string2: {string2}");

let (split1, split2) = string1.split_at(4);

println!("split1: {split1}, split2: {split2}");

println!("combined: {string2}{split2}");

$ cargo run [...] string1: äöü, string2: stu split1: äo, split2: ̈ü combined: stüü

First, note that the output of split1 and split2 shows that what was previously an ö has now been split into an o and a loose pair of two dots. Even worse: when we combine string2 and split2 in a single output, the dots at the start of split2 combine with the last character of string2 forming an extra “ü” that was never intended to exist.

Graphemes to the rescue

So how do we know where it is safe to split a string, without altering the meaning of its contained characters? For this purpose, Unicode defines the concept of grapheme clusters, which is a sequence of code points that have a single meaning together, but are unaffected by the meaning of code points around them. As long as we’re careful to split strings only on the boundaries between grapheme clusters, we can be sure not to inadvertently change the semantics of characters contained in the string. Similarly, when we build a user interface for text editing or text selection, we should be careful to present a single grapheme cluster to the user as a single unbreakable unit.

To find out, where the boundaries between grapheme cluster are, ICU4X gives us the GraphemeClusterSegmenter. Let’s look at how it would have segmented our string from earlier:

let string = "\u{61}\u{308}\u{6f}\u{308}\u{75}\u{308}";

println!("string: {string}");

let grapheme_boundaries: Vec<usize> = icu::segmenter::GraphemeClusterSegmenter::new()

.segment_str(string)

.collect();

println!("grapheme boundaries: {grapheme_boundaries:?}");$ cargo run [...] string: äöü grapheme boundaries: [0, 3, 6, 9]

As we can see, the segment_str function returns an iterator over indices where boundaries between grapheme clusters are located. Naturally the first index is always 0 and the last index is always the end of the string. We can also see, that the index 4, where we split our string in the last example, was not a boundary between grapheme clusters, and thus our split caused the change in meaning we observed. Had we instead split the string at the indices 3 or 6, we would have not had the same problems.

Words

Sometimes it is helpful to separate a string into its individual words. For this purpose, we get the aptly named WordSegmenter. So let’s get right into it:

let string = "Hello world";

println!("string: {string}");

let word_boundaries: Vec<usize> = icu::segmenter::WordSegmenter::new_auto()

.segment_str(string)

.collect();

println!("word boundaries: {word_boundaries:?}");$ cargo run [...] string: Hello world word boundaries: [0, 5, 6, 11]

So far this is very similar to the GraphemeClusterSegmenter we’ve seen before. But what if we want the words themselves and not only their boundaries? We can just iterate over windows of two boundaries at a time and slice the original string:

let words: Vec<&str> = word_boundaries

.windows(2)

.map(|bounds| &string[bounds[0]..bounds[1]])

.collect();

println!("words: {words:?}");

$ cargo run [...] words: ["Hello", " ", "world"]

This looks better. It gives use the two words we expect. It also gives us the white space between words. If we do not want that, we can ask the WordSegmenter to tell us if a given boundary comes after a real word or just some white space and filter on that:

let word_boundaries: Vec<(usize, icu::segmenter::WordType)> =

icu::segmenter::WordSegmenter::new_auto()

.segment_str(string)

.iter_with_word_type()

.collect();

println!("word boundaries: {word_boundaries:?}");

let words: Vec<&str> = word_boundaries

.windows(2)

.filter_map(|bounds| {

let (start, _) = bounds[0];

let (end, word_type) = bounds[1];

if word_type.is_word_like() {

Some(&string[start..end])

} else {

None

}

})

.collect();

println!("words: {words:?}");

$ cargo run [...] word boundaries: [(0, None), (5, Letter), (6, None), (11, Letter)] words: ["Hello", "world"]

In case you were wondering why the constructor for WordSegmenter is called new_auto, it’s because there are multiple algorithms for word segmentation to choose from. There are also new_dictionary and new_lstm and not every algorithm works equally well for different writing systems. new_auto is a good choice in the general case, as it automatically picks a good implementation based on the actual data encountered in the string.

Sentences

If we want to break strings into sentences, SentenceSegmenter does just that. There’s not much special to it, so let’s get right into it:

let string = "here is a sentence. This is another sentence.";

println!("string: {string}");

let sentence_boundaries: Vec<usize> = icu::segmenter::SentenceSegmenter::new()

.segment_str(string)

.collect();

println!("sentence boundaries: {sentence_boundaries:?}");

let words: Vec<&str> = sentence_boundaries

.windows(2)

.map(|bounds| &string[bounds[0]..bounds[1]])

.collect();

println!("words: {words:?}");$cargo run [...] string: here is a sentence. This is another sentence. sentence boundaries: [0, 20, 45] words: ["here is a sentence. ", "This is another sentence."]

No surprises there, so let’s move on.

Lines

The LineSegmenter identifies boundaries at which strings may be split into multiple lines. Let’s see an example:

let string = "The first line.\nThe\u{a0}second line.";

println!("string: {string}");

let line_boundaries: Vec<usize> = icu::segmenter::LineSegmenter::new_auto()

.segment_str(string)

.collect();

println!("line boundaries: {line_boundaries:?}");

let lines: Vec<&str> = line_boundaries

.windows(2)

.map(|bounds| &string[bounds[0]..bounds[1]])

.collect();

println!("lines: {lines:?}");$ cargo run

[...]

string: The first line.

The second line.

line boundaries: [0, 4, 10, 16, 28, 33]

lines: ["The ", "first ", "line.\n", "The\u{a0}second ", "line."]This gives us more individual “lines” than we might have previously anticipated. That’s because the LineSegmenter not only gives us boundaries on line breaks already contained in the string, but also gives us boundaries in places where a soft line break could be placed. This can be very useful if you want to wrap a long string over multiple lines.

If you want to differentiate whether a given boundary is a hard line break contained in the string or just an opportunity for an optional line break, you can negotiate the character right before the line break using icu::properties::maps::line_break.

Case Mapping

When processing Unicode texts, there is sometimes the need to transform letter between lower case and upper case. ICU4X gives us various tool for this, so let’s look at each of them.

UPPERCASE and lowercase

Lowercasing and uppercasing are very simple operations on the surface. They do similar things to Rust’s built-in str::to_lowercase and str::to_uppercase methods. So let’s see why ICU4X has separate support for them:

let string = "AaBbIıİi";

println!("string: {string}");

let locale = icu::locid::locale!("de-DE");

let cm = icu::casemap::CaseMapper::new();

let lower = cm.lowercase_to_string(string, &locale.id);

let upper = cm.uppercase_to_string(string, &locale.id);

println!("lower: {lower}, upper: {upper}");$cargo run [...] string: AaBbIıİi lower: aabbiıi̇i, upper: AABBIIİI

So far this looks like the familiar lowercasing and uppercasing operations from most languages’ standard libraries. But note that we had to provide locale.id to run these operations. The twist here is that the rules for lowercasing and uppercasing can vary by language, which is reflected in ICU4X’s variants of these operations. Observe how the result changes if we use the locale tr-TR instead of de-DE:

$ cargo run [...] string: AaBbIıİi lower: aabbııii, upper: AABBIIİİ

With ICU4X we don’t need to know the details of how different lowercase and uppercase letters pair up in different languages. As long as we pass the correct locale, ICU4X will do the correct thing.

Note however, that uppercasing and lowercasing operations are only intended for display purposes. If you want to compare strings case-insensitively, you want case folding instead, which we will look at later.

Titlecasing

Titlecasing is the process of uppercasing the first letter of a segment and lowercasing all other characters. So for example, if we wanted to titlecase every word in a string, we would first use a WordSegmenter to extract every word and then use a TitlecaseMapper to perform the titlecasing on every word.

let string = "abc DŽ 'twas words and more wORDS";

println!("string: {string}");

let locale = icu::locid::locale!("de-DE");

let cm = icu::casemap::TitlecaseMapper::new();

let word_segments: Vec<usize> = icu::segmenter::WordSegmenter::new_auto()

.segment_str(string)

.collect();

let titlecased: String = word_segments

.windows(2)

.map(|bounds| {

let word = &string[bounds[0]..bounds[1]];

cm.titlecase_segment_to_string(word, &locale.id, Default::default())

})

.collect();

println!("titlecased: {titlecased}");$ cargo run [...] string: abc DŽ 'twas words and more wORDS titlecased: Abc Dž 'Twas Words And More Words

Again we had to provide &locale.id to specify which language-specific rules to obey during case transformations. Additionally we can pass other options as a third parameter. Here we’ve used the default options, but feel free to checkout out the API documentation to see what other options are supported.

Note how DŽ was transformed to Dž, even though it is a single letter whose regular uppercase form is DŽ. This is because each character has separate uppercase and titlecase forms, which just happen to be the same for most latin characters. Also note that 'twas was transformed to 'Twas. This is because the TitlecaseMapper titlecases the first letter in a word and skips over non-letter characters at the start of a word when doing so.

Case folding

Sometimes we want to tell whether two strings are equal while ignoring differences in casing. Traditionally this has been done by transforming both strings to lower case or upper case to eliminate differences in casing and comparing those strings. With Unicode strings, for some characters simple lowercasing or uppercasing isn’t enough to eliminate all differences in casing. As an example, the German letter ß uppercases to SS, but there’s also an uppercase version of ß: ẞ, which uppercases to itself, but lowercases to a regular ß. To consistently eliminate all casing differences, we need to map SS, ß, and ẞ all to the same output character. Luckily for us, ICU4X gives us the case folding operation, which promises to do just that. Let’s see it in action:

let string = "SSßẞ";

println!("string: {string}");

let locale = icu::locid::locale!("de-DE");

let cm = icu::casemap::CaseMapper::new();

let upper = cm.uppercase_to_string(string, &locale.id);

let lower = cm.lowercase_to_string(string, &locale.id);

let folded = cm.fold_string(string);

println!("upper: {upper}, lower: {lower}, folded: {folded}");$ cargo run [...] string: SSßẞ upper: SSSSẞ, lower: ssßß, folded: ssssss

As we see, in the folded string all the different versions of ß have been consistently turned into ss, which successfully eliminates all casing differences. It also means that a single ß would be considered equal to a lowercase ss, which we might not have considered equal otherwise. This is a kind of ambiguity that is hard to avoid when comparing strings case-insensitively.

Note that we didn’t have to specify any locale or language for the case folding operation. This is because case folding is often used for identifiers that are supposed to behave identically regardless of the lingual context they’re used in. The case folding operation tries to use rules that work best across most languages. However, they don’t work perfectly for Turkic languages. To deal with this, there’s an alternative case folding operation fold_turkic_string just for Turkic languages. In most cases you’ll probably want to use the general folding operation, unless you’re really sure you need the special behavior for Turkic languages.

Case-insensitive comparison

Given the case folding operation, we could implement a function to compare two strings case-insensitively like this:

fn equal_ci(a: &str, b: &str) -> bool {

let cm = icu::casemap::CaseMapper::new();

cm.fold_string(a) == cm.fold_string(b)

}

Data handling

So far we’ve looked at various operations that work correctly in a vast number of locales over strings made up of a huge amount of valid code points. On the surface, these operations were relatively easy to use and most of the time we only needed to specify our input and a desired locale to get the correct result. However, in the background ICU4X needs a lot of data about different locales and Unicode characters to do the right thing in every situation. But so far, we never had to be concerned with this data at all.

So where does ICU4X get all this data from? In the default configuration we’ve been using so far, the data is shipped as part of the library and compiled directly into our application executable. This has the benefit that we don’t need to worry about shipping the data along with the binary and getting access to it at runtime, as the data is always included in the binary. But it comes at the cost of sometimes dramatically increased binary sizes. Since data for a large number of locales is included by default, we’re talking about tens of megabytes of data being included in the binary.

Alternatives to embedded data

Since ICU4X is designed to run even in minimalist environments, such as embedded devices, forcing this increased application binary size on every application would be unacceptable. Instead, ICU4X provides multiple ways to access the relevant data. Besides using the included default set of data, you can also generate you own set of data using icu4x-datagen. This allows you to reduce the data included from the beginning, either by limiting the number of locales to include or by limiting the functionalities supported by the data. Furthermore you have the choice between compiling this data directly into your application binary or putting it into separate data files that your application then parses at runtime.

Reducing the set of available runtime data of course comes with the benefit of reducing the data size needed to ship with your application. On the other hand it has the drawback of reducing the set of operations you can successfully run at runtime. Each bit of data you remove, can have the effect of making some operation fail, if no data is available to perform that operation with the requested locale. As with many other things, reducing the data size has obvious benefits, but it is always a tradeoff. In the examples above we usually used unwrap to ignore the possibility of errors, but in a real application you’ll probably want more sophisticated error handling, like falling back to some non-failing behavior or at least reporting the error to the user.

I’ll avoid going through all the available options in detail, and instead refer to ICU4X’s official Tutorial on data management instead. It should explain all the supported ways to make the required data available to your application.

Conclusion

I hope this has given you a satisfactory overview of what ICU4X can do. As we have seen, a lot of functionality works well out of the box. In other areas functionality is still lacking. For example, I’ve mentioned earlier, that there’s currently no comfortable way to detect the user’s preferred locale in a standard way from the execution environment. Another area where ICU4X is currently lacking behind its C and Java counterparts is translation support. ICU4X and ICU4J provide capabilities for formatting localized message using MessageFormats, which ICU4X still lacks. Similarly, ICU4X doesn’t currently seem to have functionality to deal with resource bundles.

Even though ICU4X doesn’t have all the functionality you might expect of it yet, overall it seems like a good choice for those cases, where it already brings all the required functionality. Given some more time, we may even see more and more of the missing functionality to land in ICU4x.

ProxLB – Version 1.1.0 of the Advanced Loadbalancer for Proxmox Clusters is Ready!

Finally, it’s here – and it’s no April Fool’s joke! The long-awaited version 1.1.0 of ProxLB has been officially released! This new version features a complete code refactoring, making maintenance easier and laying the groundwork for future expansions. Additionally, numerous bugs have been fixed, and more features have been implemented. ProxLB is the result of the dedication of our employee Florian Paul Azim Hoberg, better known as gyptazy, who has applied his knowledge and passion to create a powerful open-source solution for Proxmox clusters. We – as credativ GmbH – believe in the power of open-source software and support him by spending time to this project during the business time.

Closing the gap

Automatic maintenance mode handling

One of the standout features of ProxLB is its maintenance mode. When one or more nodes are placed in maintenance mode, all VMs and containers running on them are automatically moved to other nodes, ensuring the best possible resource utilization across the cluster. This allows for seamless updates, reboots, or hardware maintenance without disrupting ongoing operations.

Custom affinity rules

Furthermore, ProxLB offers extensive customization options through affinity and anti-affinity rules. Administrators can specify whether certain VMs should run together on the same node or be deliberately separated. This is particularly useful for high-availability applications or specialized workloads. Another practical feature is the ability to identify the optimal node for new guests. This function can be easily integrated into CI/CD pipelines using tools like Ansible or Terraform to automate deployments and further enhance cluster efficiency. You can see how this works with ProxLB and Terraform in this example.

ProxLB also stands out with its deep integration into the Proxmox API. It fully supports the Access Control List (ACL), eliminating the need for additional SSH access. This not only enhances security but also simplifies management.

Whether used as a one-time operation or in daemon mode, ProxLB is a flexible, transparent, and efficient cluster management solution. Thanks to its open-source license, users can customize the software to meet their specific needs and contribute to its further development.

Download

ProxLB can be installed in many different ways where it can operate and run inside of an dedicated VM (even inside the Proxmox cluster), on bare-metal, on a Proxmox node itself or on containers like LXC or Docker. The project also provides ready to use container images that can directly be used in Docker or Podman. The project’s docs provide you a more detailed overview of the different types and ways to install and use ProxLB, which can simply be found right here. While you can find below resources for a quick-start you should think about switching to the project’s Debian based repository for long-term usage.

| Typ | Download |

| Debian Package | proxlb_1.1.0_all.deb |

| Container Image | cr.gyptazy.com/proxlb/proxlb:latest |

Conclusion

With version 1.1.0, ProxLB lives up to its reputation as an indispensable tool for Proxmox administrators, especially for those transitioning from VMware. Try out the new version and experience how easy and efficient load balancing can be in your cluster! We are also happy to support you with the integration and operation of ProxLB in your cluster, as well as with all other Proxmox-related topics, including planning a migration from other hypervisor technologies to Proxmox!

From March, 1st 2025, the Mönchengladbach-based open source specialist credativ IT Services GmbH will once again operate as an independent company on the market. credativ GmbH was acquired by NetApp in May 2022 and integrated into NetApp Deutschland GmbH on February, 1st 2023. This step enabled the company to draw on extensive experience and a broader resource base. However, after intensive collaboration within the storage and cloud group, it has become clear that credativ can offer the best conditions for addressing the needs of its customers in an even more targeted manner thanks to its regained independence. The transfer of operations will be supported by all 46 employees.

“We have decided to take this step to focus on our core business areas and create the best possible conditions for further growth. This means maximum flexibility for our customers. We would like to thank the NetApp management for this extraordinary opportunity,‘ said David Brauner, Managing Director of credativ IT Services GmbH.

’The change is a testament to the confidence we have in the credativ team and their ability to lead the business towards a prosperous future,” explained Begoña Jara, Vice President of NetApp Deutschland GmbH here in Germany.

What does this change mean for credativ customers?

As a medium-sized company, the open source service provider can rely on even closer collaboration and more direct communication with its customers. An agile structure should enable faster and more customised decisions, and thus a more flexible response to requests and requirements. Naturally, the collaboration with the various NetApp teams and their partner organisations will also continue as before.

credativ has been a service provider in the open source sector since 1999, with a strong focus on IT infrastructure, virtualisation, and cloud technologies. credativ also has a strong team that focuses on open source-based databases, such as PostgreSQL, and related technologies. In the next few weeks, the new company will be renamed credativ GmbH.

Introduction

Proxmox Virtual Environment (VE) is a powerful open-source platform for enterprise virtualization. It supports advanced Dynamic Memory Management features, including Kernel Samepage Merging (KSM) and Memory Ballooning, which can optimize memory usage and improve performance. This blog post evaluates the effectiveness of KSM and Memory Ballooning features in Proxmox VE using Linux virtual machines (VMs). We will set up a VM with Proxmox VE for a test environment, perform tests, and analyze the results to understand how these features can benefit virtualized environments. Additionally, we will have a look at the security concerns of enabling KSM and the risks associated with using ballooning, especially in database environments.

What’s KSM?

Kernel Samepage Merging (KSM), is a memory deduplication feature in Linux kernel that scans for identical memory pages in different processes and merge them into a single page to reduce the memory usage. It is particularly useful in virtualized environments where multiple VMs may have similar or identical data in memory, such as when running the same operating system or applications.

KSM was introduced long ago since the Linux kernel version 2.6.32 in 2009. However, it does not stop the developers to introduced new features for KSM as shown by the 6.x kernel. There are new changes introduced that you can find here: Breakdown of changes to Kernel Samepage Merging (KSM) by Kernel Version. As you can see, the kernel developers are constantly adding new features for KSM to the Linux Kernel to further improve its functionality.

The current Linux Kernel used in Proxmox VE is 6.8.x for example. It supports the newly added „Smart Scan“ feature which we going to test together in this blog post.

What’s Memory Ballooning?

Memory Ballooning is a technique used in virtualized environments to dynamically adjust the memory allocation of VMs based on their current needs. A “balloon driver” within the guest VM allocates unused memory into a pool of memory (the “balloon”), allowing the hypervisor to reallocate memory resources to other VMs as needed. This helps optimizing memory usage across the host system, ensuring that memory is efficiently utilized and not wasted on idle VMs.

Tests Setup

To evaluate KSM and ballooning features in Proxmox VE, we set up a test cluster consisting of one node which we operate within a VM that offers 16GB of RAM. That sample cluster will then run multiple Linux Guest VMs on top of it to demonstrate the KSM and Memory Ballooning features.

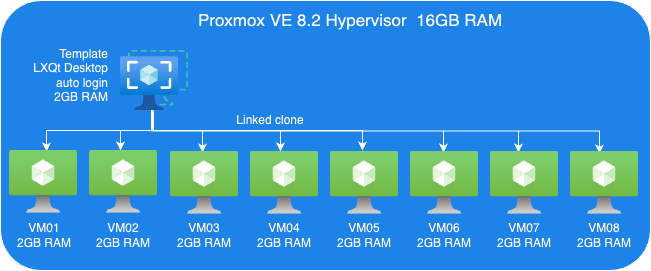

The following picture shows an overview of our test VM setup:

Proxmox VE Host:

- A VM to install Proxmox VE 8.2.

- 8 Cores vCPU

- 16GB RAM

- 200GB Virtio storage

Linux Guest VM Template:

- Linux Guest

- 2GB RAM

- Install Debian LXQt desktop

- 16GB Virtio storage

Linux Guest VMs:

- 8 VMs, Linked-Clone from template

Perform tests

We perform two sets of tests. First, we just evaluate KSM. Then, we perform another tests set to testing Memory ballooning without KSM.

Guest VMs Setup for KSM Tests:

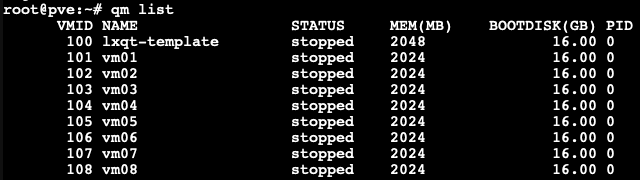

- We cloned 8 VMs out of our VM template with 2GB RAM each, as you can see in the picture below.

Each VM configured with 2GB RAM without ballooning enabled.

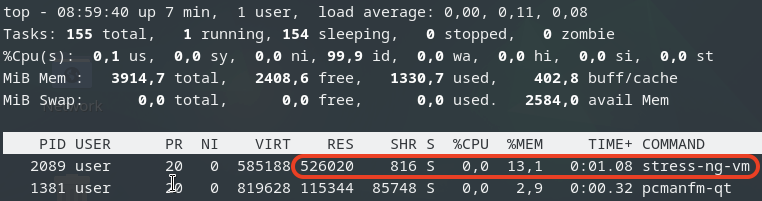

Next, we boot those 8 VMs up and start them with LXQt desktop auto-login without triggering KSM. Here, we want to check how much memory each of those VMs consumes before applying any kind of reducing mechanism.

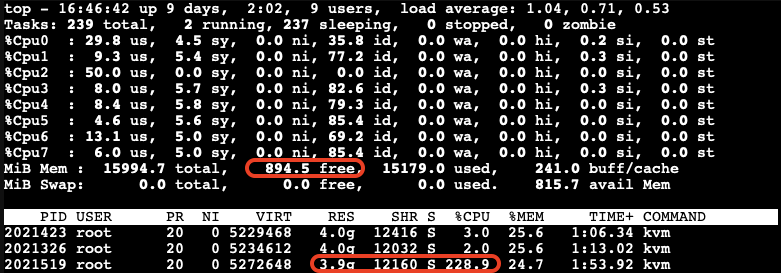

As you can see, all 8 VMs consume 13154.1MB in total. The screenshot above has been captured on our Proxmox VE host.

- Enable KSM Smart Scan by the command on host:

# echo "scan-time" > /sys/kernel/mm/ksm/advisor_mode

- Enable KSM run:

# echo 1 > /sys/kernel/mm/ksm/run

Observations on KSM Smart Scan

The KSM Smart Scan feature appears to be more efficient compared to the classic ksmtuned method, as it comes with optimizations for page scanning that skip pages if de-duplication was not successful in previous attempts. This reduces the CPU time required for scanning pages significantly, which is especially helpful when the system has reached a “steady state“. During our tests, we did not observe ksmd occupying significant system resources, resulting that KSM Smart Scan can optimize memory usage with minimal overhead.

Test Results

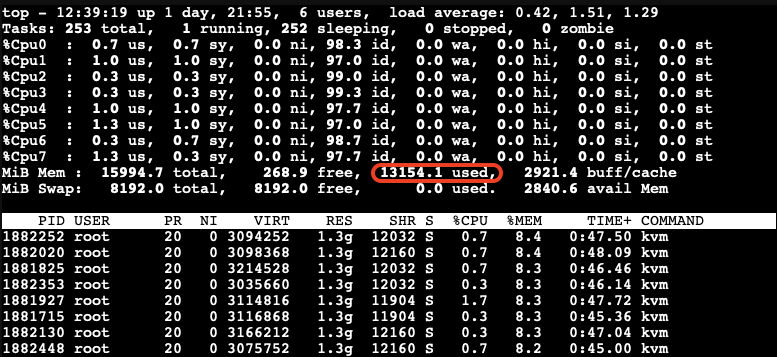

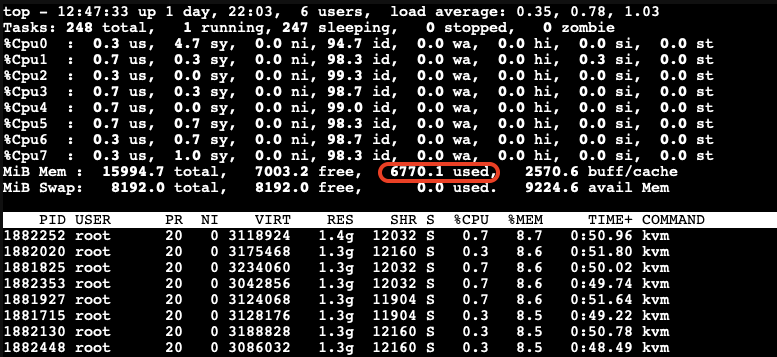

- After a while as the KSM is scanning and merging pages. The used Mem reduced to 6770.1 Mib.

- We also can see the KSM sharing status on Proxmox VE WebUI.

A significant reduction in memory usage was observed. Although there was a slight increase in CPU usage by ksmd during KSM operation, there was no significant degradation in VM performance. This indicates that KSM operates efficiently without imposing a heavy load on the system. The merging of identical pages resulted in better memory utilization, allowing more VMs to run on the same host without additional hardware.

Kernel Samepage Merging (KSM) in Windows VMs

KSM is a native feature in the Linux kernel that works at the hypervisor level, scanning memory pages across all VMs and merging identical pages into a single shared page. This process reduces the overall memory footprint of the VMs.

For Windows VMs, the hypervisor treats their memory similarly to Linux VMs, identifying and merging identical pages. This means that the benefits of KSM can also extend to Windows VMs running on Proxmox VE due to the fact that Proxmox itself runs Linux and therefore utilizes the KSM kernel feature no matter what OS the guests VMs on top of Proxmox VE are running.

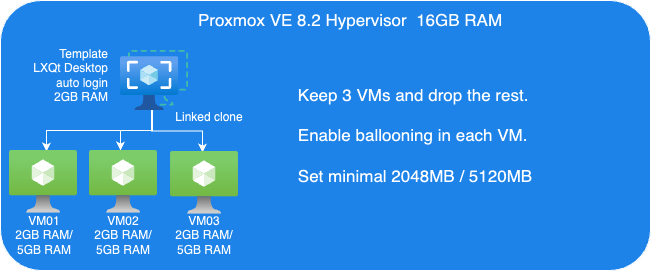

Guest VMs Setup for Ballooning Tests:

Next, let’s have a look at Memory Balloning in another test. In order to evaluate the balloning features in Proxmox VE. To evaluate the ballooning features in Proxmox VE, we will repurpose the Proxmox VE environment used for KSM tests with the following adjustments:

- Retain three VMs and remove the others.

- Enable Ballooning in each VM.

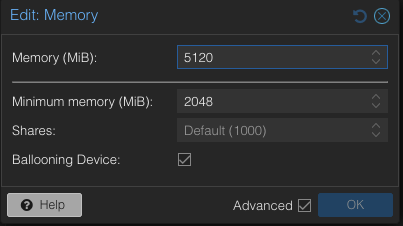

- Set the minimum memory to 2048MB and the maximum memory to 5120MB in each VM

.

Disable the KSM:

To disable KSM manually, execute the following command:

# echo 2 > /sys/kernel/mm/ksm/run

The following picture shows an overview of our Ballooning test VMs setup:

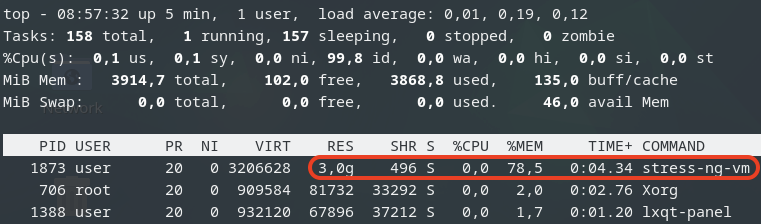

Due to memory ballooning, we should now have more memory available for each VM. Let’s test this by using stress-ng to allocate 4GB of memory on each guest VM, and hold the allocated memory in seconds you may specify:

$ stress-ng --vm-bytes 4G -m 1 –vm-hang <seconds>

The –vm-hang <seconds> option specify how many seconds that VM hangs before unmapping memory.

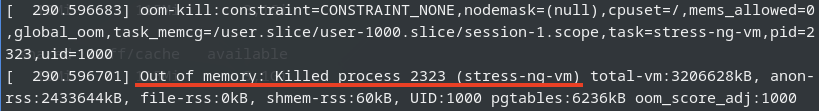

OOM-Killer!

We observed the OOM-killer being triggered on the Proxmox VE host.

Having the OOM-killer triggered on the host is problematic. Allocating 5GB of memory to each VM resulted in excessive overcommitment, causing the OOM-killer to activate due to insufficient memory to handle the host’s workload.

OOM-killer triggered are always problematic, but it triggered on the host are even worse compared to triggered within guest VMs since you never know what VM gets terminated and killed or at least it’s really hard to forecast.

One of the basic purpose of Memory balloning is to ovoid OOM-killer triggered on the host system since they can cause „more“ damage than a OOM-killer triggered within a specific VM.

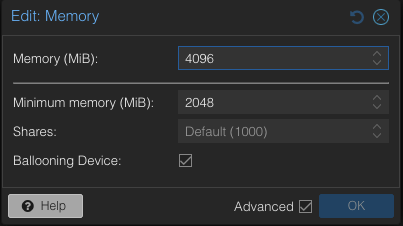

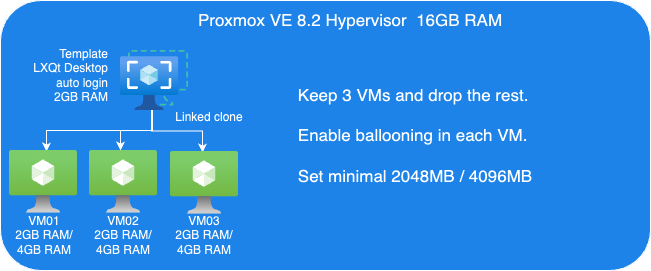

Reduce Maximum Memory Configuration in VMs for Ballooning Tests

To address the overcommitment issue, let’s reduce the maximum memory configuration in each VM to 4GB.

- Adjust the maximum memory setting for each VM to 4GB.

- Boot up three VMs.

Next, we’ll use stress-ng in the guest VM to allocate 3GB of memory and then hang for a specified duration without CPU usage on each guest VM:

$ stress-ng--vm-bytes 3G -m 1 --vm-hang <seconds>

Check Memory Usage on the Host

After running the stress-ng test, we check the memory usage on the host:

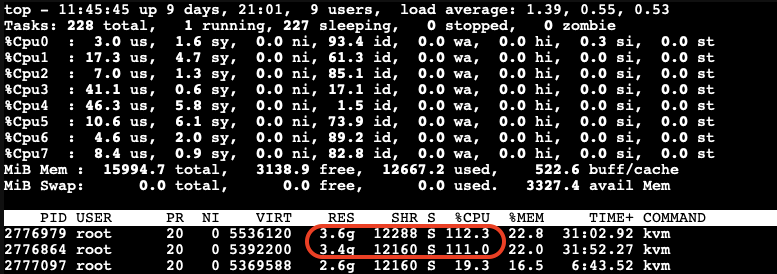

The free memory on the host is now low. The third VM, which is trying to allocate memory, experiences very high CPU usage due to the limited resources available on the host.

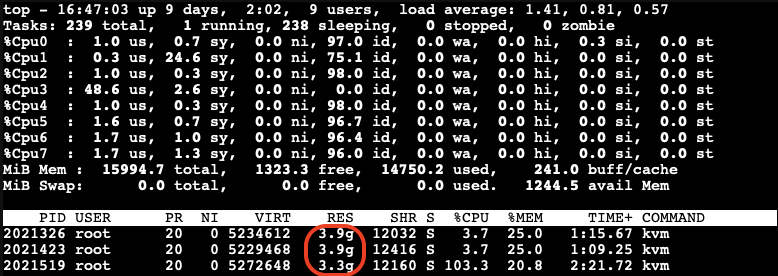

After a while, we can observe the ballooning driver starting to reclaim memory from the guest VMs on the host. Each VM’s RES (occupied physical memory) got reduced:

The ballooning driver is now reclaiming memory from each guest VM to increase the available free memory on the host. This action helps to maintain the host’s workload but causes all other guest VMs to slow down due to reduced memory allocation.

Impact of Ballooning on Guest VMs

The slowed down VMs eventually do not have enough available free memory to maintain their workloads. As a result, the OOM-killer is triggered inside the guest VMs:

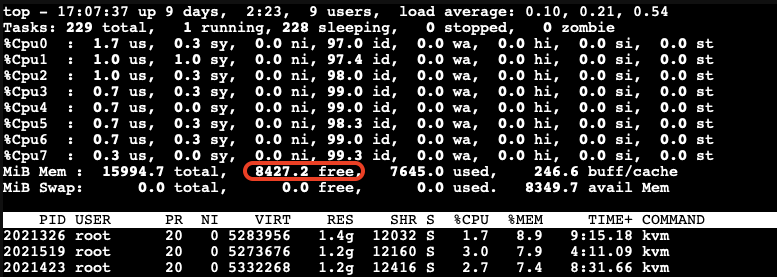

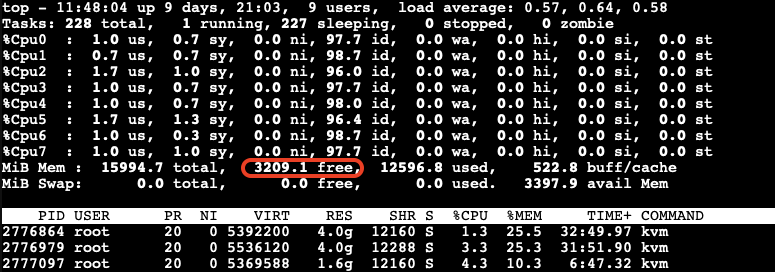

All the VMs hang for a while, and then the OOM-killer triggers to terminate the stress-ng process. After this, the VMs return to their normal state, and there is sufficient available free memory on the host:

When Does Memory Stealing Get Triggered?

To determine when memory stealing gets triggered, let’s conduct another tests. We will use the same stress-ng command to allocate 3GB of memory on two VMs.

Next, we will gradually allocate memory on the third VM, starting with 512MB and then incrementally adding another 512MB until we observe memory reclaiming being triggered.

As we gradually increase the memory allocation on the third VM, we monitor the host’s memory usage:

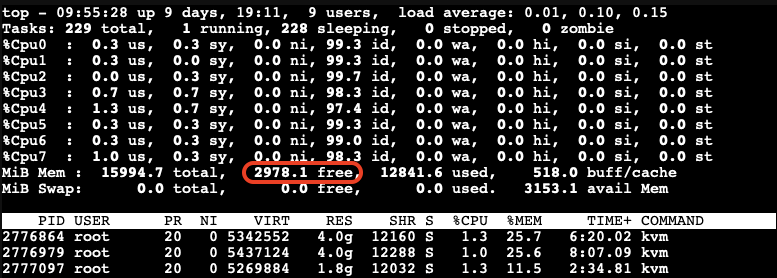

We observe that memory stealing is not yet triggered when the available free memory on the host reaches 2978.1MB (approximately 18.5%) of the total memory.

Let’s allocate a bit more memory on the third VM to further reduce the available free memory on the host. We found that when the available free memory on the host reaches around 15% of the total memory, the ballooning driver triggers to stealing memory from the guest VMs:

At this point, we can see the memory allocated to the VMs being reduced and the CPU usage increasing significantly.

The memory stealing process continues until the available free memory on the host reaches 20% of the total memory again. After releasing the allocated memory from the third VM, we observe that the reclaiming process stops when the available free memory on the host reaches 20% of the total memory.

Visualizing the Ballooning Tests Results

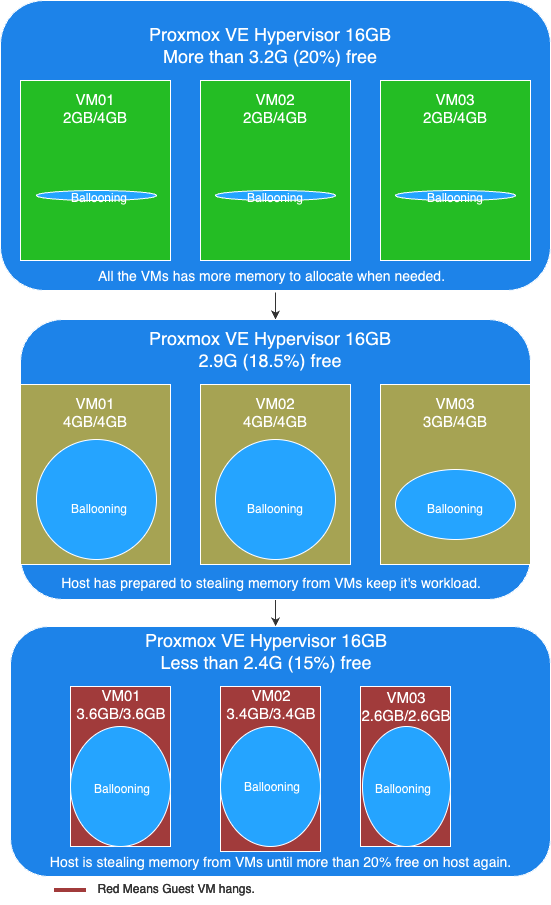

The following picture below illustrates the observations from our tests:

In this picture, you can see the following key points:

- More than 20% free available memory on host: The initial memory allocation to the VMs, where each VM is configured to be able allocated a maximum 4GB of memory.

- Free available memory reached 18.6% on host: The first and second VMs have allocated their maximum of 4GB of memory. The incremental allocation of memory to the third VM begins, starting with 512MB and increasing by 512MB increments.

- Triggering Memory Stealing: The point at which the available free memory on the host drops to around 15% of the total memory, triggering the ballooning driver to reclaim memory from the guest VMs. The red color in guest VMs indicates increased CPU usage as the ballooning driver stealing memory, affecting the performance of the guest VMs.

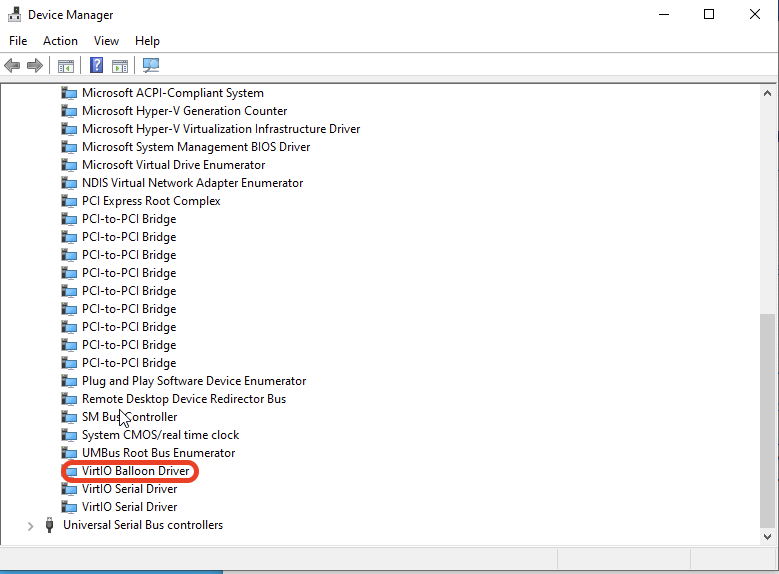

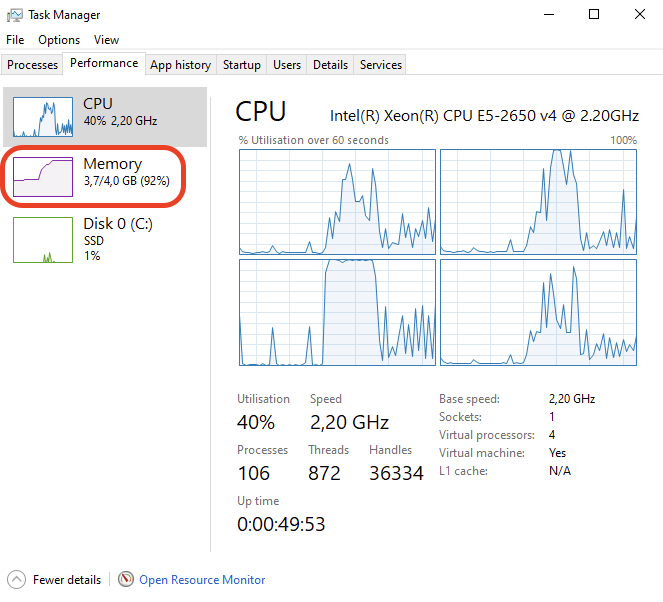

Memory Ballooning in Windows VMs

Memory ballooning also works with Windows VMs in Proxmox VE by Windows VirtIO Drivers. You can find the drivers ISO from the Proxmox wiki or download directly from upstream VirtIO drivers ISO.

Compared to Linux VMs

Memory hot plug is supported in Linux VMs, allowing the total amount of memory to change dynamically when the ballooning driver is active. This means that in Linux VMs, you can see the total memory allocation adjust in real-time as the ballooning driver works. Windows does not support memory hot plug in the same way. As a result, you won’t see the total amount of memory adjusted in a Windows VM. Instead, you will observe an increase in the amount of used memory. Despite this difference, the end result is the same: the available free memory is reduced as the ballooning driver reclaims memory.

This screenshot shows you will observe the used memory increased when ballooning is active to stealing memory inside Windows VM.

Results

Memory ballooning in Proxmox VE is a powerful feature for dynamically managing memory allocation among VMs, optimizing the host’s overall memory usage. However, it’s crucial to understand the thresholds that trigger memory reclaiming to avoid performance degradation. It is recommended to set an appropriate minimum memory limit to ensure that no more memory can be stolen once this minimum threshold is reached, this way to keep the stability of the guest VM and preventing the OOM-killer from terminating processes inside the guest VM. By appropriately setting, carefully monitoring, and adjusting memory allocations, you can ensure a stable and efficient virtual environment.

Security Concerns

Implications of Enabling KSM

According to the Kernel Samepage Merging (KSM) document from Proxmox VE wiki. It mentioned the implications of KSM. There are already some document proof by researchers that “Memory Deduplication as Threat to the Guest OS” , it is possible to perform “Remote Memory-Deduplication Attacks”, and also possible compromising Linux VMs by “New FFS Rowhammer Attack Hijacks Linux VMs”.

In the concern, you should only enable KSM when you have full control of all the VMs. If you are using Proxmox VE to provide hosting services, you better consider disabling KSM to protect your users. Furthermore, you should check your country’s regulations, as disabling KSM may be a legal requirement.

Risks When Using Databases with Ballooning

Memory ballooning dynamically adjusts the memory allocation of VMs based on demand. While this feature is beneficial for optimizing memory usage, it poses certain risks when used with database like PostgreSQL, which rely heavily on available memory for performance. If the balloon driver reclaims too much memory, where overcommitting memory pages can lead to trigger OOM-Killer to kill the process with the highest score until the high memory stress situation is over. And the process with the highest score metrics could be on memory consumption which highly possibility the database itself.

In the concern, you better running database server in VM without Memory Ballooning enabled, or set no overcommit policy in the Linux kernel inside the guest VM if you don’t have such control.

Conclusion

Our tests demonstrate that KSM and memory ballooning are effective features in Proxmox VE for optimizing memory usage in virtualized environments. KSM can significantly reduce memory usage by merging identical pages across VMs, while memory ballooning allows dynamic adjustment of memory allocation based on demand.

Memory ballooning in Proxmox VE is a powerful feature for dynamically managing memory allocation among VMs, optimizing the host’s overall memory usage. However, it’s crucial to understand the thresholds that trigger memory reclaiming to avoid performance degradation. By carefully monitoring and adjusting memory allocations, you can ensure a stable and efficient virtual environment.

Together, these features can enhance the efficiency and performance of virtualized workloads, making Proxmox VE a robust solution for enterprise virtualization.

By leveraging KSM and memory ballooning, organizations can achieve better resource utilization and potentially reduce hardware costs. If you have full control of the host and all the VMs, consider enabling these features in Proxmox VE to explore these potential benefits.

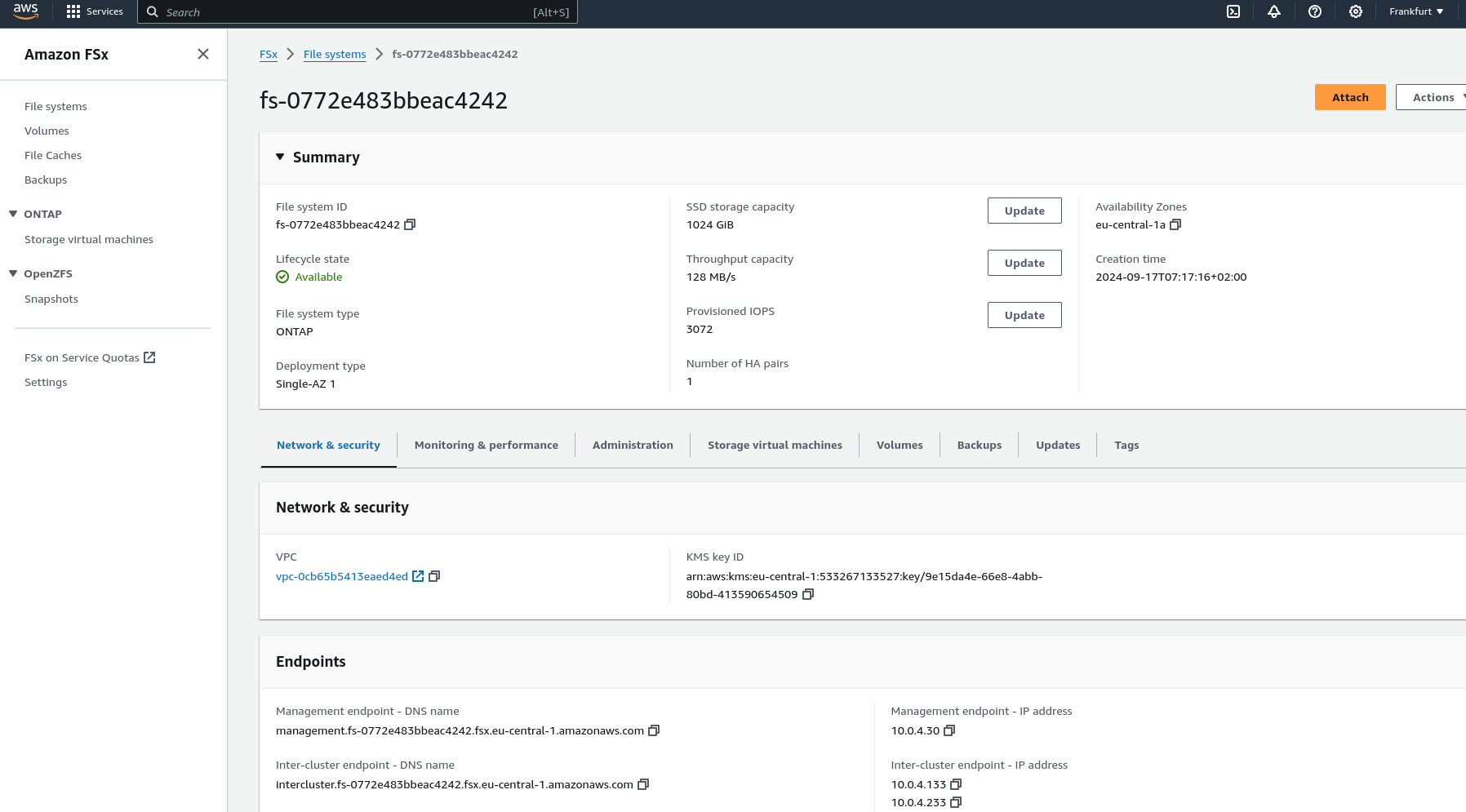

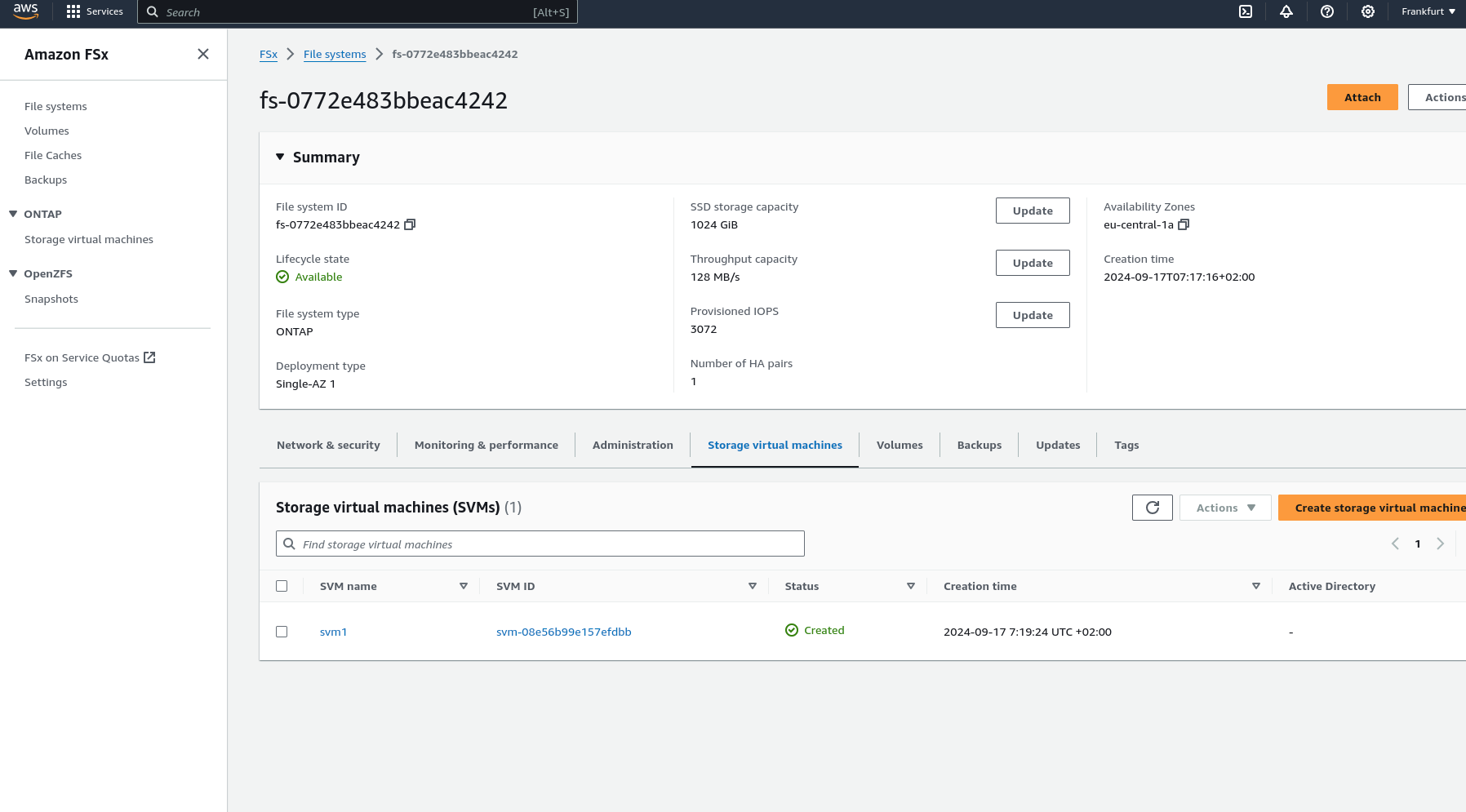

Introduction

In our previous article, we introduced NetApp Storage and NVMe-oF for Breakthrough Performance in Proxmox Virtualization Environments. That article introduced LVM with NVMe-oF via TCP in NetApp storage with Proxmox VE, highlighting its potential to deliver high-performance storage solution that suitable for latency-sensitive applications like virtualized data servers . And it works over Ethernet network without other specialized hardware such as Fibre Channel or InfiniBand, which can be cost-prohibitive for many enterprises.

While NVMe-oF offers significant performance benefits, it is primarily supported on newer and higher-end NetApp ONTAP storage systems, like the AFF series. For organizations with older or hybrid storage systems, iSCSI remains a viable and cost-effective alternative that leverages existing Ethernet infrastructure and provides reliable performance for virtualization environments.

In this blog post, we will delve into using iSCSI (Internet Small Computer Systems Interface) in NetApp Storage with Proxmox VE.

Setup

Hardware and Software Used in This Example

- NetApp Storage System: Provides shared storage capabilities essential for clustering in virtual environments via iSCSI. The example uses ONTAP Release 9.15.1.

- Proxmox VE Cluster: An open source virtualization platform. The example uses the current release 8.2.

- Network Infrastructure: Standard Ethernet network (1GbE or 10GbE).

- iSCSI Initiator: Built into Proxmox VE.

What is iSCSI

iSCSI (Internet Small Computer Systems Interface) is a network protocol that allows for the transport of block-level storage data over IP (Internet Protocol) networks. The protocol allows clients (called initiators) to send SCSI commands over TCP/IP to storage devices (targets) on remote servers. It allows for the connection of storage devices over a standard network infrastructure without requiring specialized hardware and cabling.

It offers a flexible, cost-effective, and scalable storage solution that integrates well with virtualization environments, providing the necessary features and performance to support modern virtualized workloads.

How iSCSI Works

- Initiator and Target: iSCSI communication involves two main components:

- Initiator: The client-side component, typically a server or host, that initiates the connection to the storage device.

- Target: The server-side component, typically a storage array or device, that provides access to the storage resources.

- LUNs (Logical Unit Numbers): Storage resources on the iSCSI target are presented as LUNs. The initiator accesses these LUNs as if they were local disks, allowing the operating system to format, partition, and use them just like any other block storage device.

Configuration

On NetApp Storage

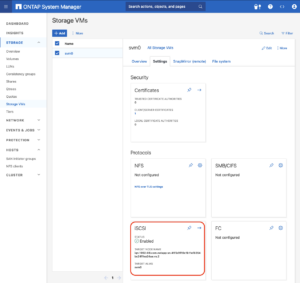

The guide presumes that users have already established the foundational storage setup, including the configuration of Storage Virtual Machines (SVMs). It highlights that the administration of these systems is relatively straightforward, thanks to the intuitive web interface – ONTAP System Manager provided by NetApp storage systems. Users can expect a user-friendly experience when managing their storage solutions, as the web interface is designed to simplify complex tasks. This also includes the whole setup for iSCSI storage, which requires to enabling iSCSI in general on the SVM, setting up the SAN Initiator group and mapping it to LUNs.

Note: All changes can of course also be performed in an automated way by orchestrating the ONTAP API.

Enable iSCSI target on SVM

To enable iSCSI at the SVM level on a NetApp storage system, this can typically be done by following these summarized steps, which can be accessed through the system’s web interface ONTAP System Manager.

Navigate to the Storage menu. Then, navigate to Storage VMs. Specify the SVM name you wish to configure:

- Configure iSCSI Protocol: Within the SVM settings, look for a section or tab related to proto

cols. Locate the iSCSI option and enable it. This might involve checking a box or switching a toggle to the ‘on’ position. - Save and Apply Changes: After enabling iSCSI, ensure to save the changes. There might be additional prompts or steps to confirm the changes, depending on the specific NetApp system and its version.

Remember to check for any prerequisites or additional configuration settings that might be required for iSCSI operation, such as network settings, licensing, or compatible hardware checks. The exact steps may vary slightly depending on the version of ONTAP or the specific NetApp model you are using. Always refer to the latest official NetApp documentation or support resources for the most accurate guidance.

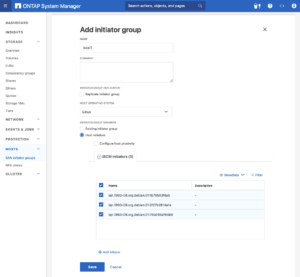

Create SAN initiator group

Navigate to the HOSTS menu. Then, navigate toSAN initiator groups. Select the specific initiator group name you wish to configure:

- Configure Host initiators: On each Proxmox VE nodes, look up /etc/iscsi/initiatorname.iscsi file and collect the InitiatorName (iSCSI host IQN). Add the each InitiatorName as host initiators in the initiator group in ONTAP System Manager.

- Save and Apply Changes: After adding all the initiators, ensure to save the changes. There might be additional prompts or steps to confirm the changes, depending on the specific NetApp system and its version.

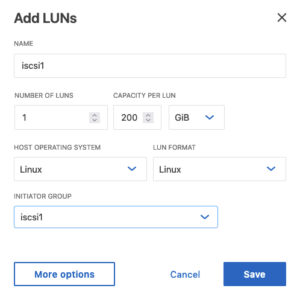

Create LUNs with initiator group

Navigate to the Storage menu. Then, navigate to LUNS. Select and specific LUN name you wish to configure:

- Add LUNs: Specify the number of LUNs you want to configure. Set the Host Operating System to Linux. Select the Initiator Group created in the previous step.

- Save and Apply Changes: After adding LUNs, ensure to save the changes. There might be additional prompts or steps to confirm the changes, depending on the specific NetApp system and its version.

Configuring Proxmox Node

General

After configuring the NetApp storage appliance for the creation of the iSCSI Target and LUNs, we can now configure Proxmox VE cluster to use and access the iSCSI storage. This can be easily configured by the Proxmox web interface. In general, this process consists of:

- Connecting with the iSCSI Block Storage.

- Creating an LVM (Logical Volume Manager) which is shareable across nodes on Proxmox VE, however, these instructions are likely to work on both older and newer versions, too.

The next steps in this blog post will cover the process in detail and guide you through the necessary steps on the Proxmox VE which can be done on the Proxmox web interface.

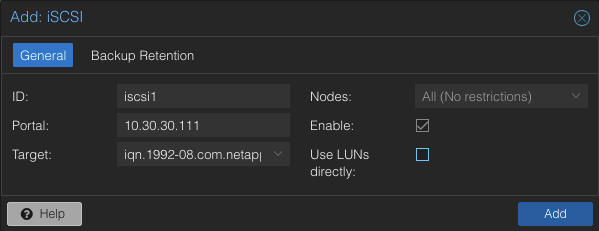

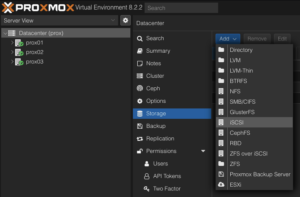

Connecting With the iSCSI Block Storage

To use this iSCSI block storage on Proxmox VE cluster, follow the steps to log in to the web frontend of the Proxmox VE cluster, and add the storage at the datacenter level:

Navigate to the Storage Configuration: Go to Datacenter -> Storage -> Add -> iSCSI.

Define the New iSCSI Storage Details:

- ID: iscsi1 (this can be any text and is jut an identifier).

- Portal: Enter the IP address of the iSCSI-enabled SVM.

- Target: This will be detected if you specify the correct IP for the iSCSI-enabled SVM.

- Nodes: <Select all nodes that should use the iSCSI storage>

- Enable: Yes

- Use LUNs directly: No

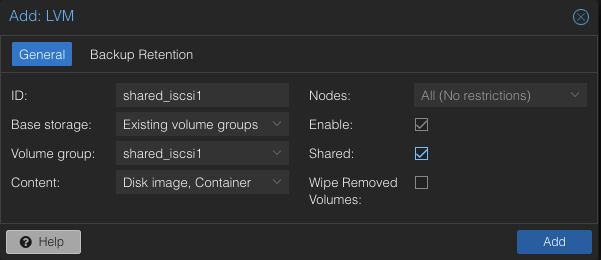

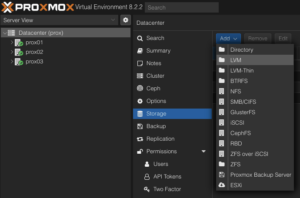

Create LVM on the iSCSI Block Storage

To use this LVM Volume Group over iSCSI block storage on all Proxmox VE nodes within the cluster, the Volume Group must be added and integrated at the datacenter level. Follow these steps to configure it through the Proxmox VE web interface:

Navigate to the Storage Configuration: Go to Datacenter -> Storage -> Add -> LVM.

Define the New LVM Storage Details:

- ID: shared_iscsi1 (this can be any text and is just an identifier)

- Base storage: Existing volume groups

- Volume group: shared_iscsi1

- Content: Disk image, Container

- Nodes: <Select all nodes that should use it>

- Enables: Yes

- Shared: Yes

- Wipe Removed Volumes: No

Press Add to attach the new volume to the selected nodes. The LVM storage will then available for use.

Conclusion

The utilization of iSCSI via TCP in addition to Proxmox VE in a virtualization environment presents a compelling solution for organizations looking for cost-effective shared storage architectures. This approach leverages the widespread availability and compatibility of Ethernet-based networks, avoiding the need for specialized hardware such as Fibre Channel, which can be cost-prohibitive for many enterprises.

However, block-level storage with SAN protocols (FC/iSCSI/NVMe-oF) is typically restricted to the VM Disk and Container Image content types supported by Proxmox VE. Additionally, guest VM snapshots and thin provisioning are currently not supported when using LVM/iSCSI storage in Proxmox VE.

There are alternatives that NetApp storage can also serve to meet the more complete needs of Proxmox VE. The NAS protocols, such as NFS, support all content types of Proxmox VE and are typically configured once at the datacenter level. Guest VMs can use disks of type raw, qcow2, or VMDK on NAS protocol storage. Furthermore, guest VM snapshots and thin provisioning are supported with the qcow2 format.

At your convenience, we are available to provide more insights into NetApp storage systems, covering both hardware and software aspects. Our expertise also extends to open-source products, especially in establishing virtualization environments using technologies like Proxmox and OpenShift or in maintaining them with configuration management. We invite you to reach out for any assistance you require.

You might also be interested in learning how to migrate VMs from VMware ESXi to Proxmox VE or how to include the Proxmox Backup Server and NetApp Storage and NVMe-oF for Breakthrough Performance in Proxmox Virtualization Environments into your infrastructure.

ONTAP snapshots for Proxmox VE

In modern IT infrastructure, virtualization is the key to efficient resource management. With virtualization, memory, cpu, network and storage resources can easily be assigned to and shared between virtual machines (VM). Virtualization also comes with the advantage of being able to easily change the resources assigned to virtual machines or to clone virtual machines as needed. Since the virtual machines hard disk is just a file, it can easily be resized, copied and backuped.

Motivation

One of the many advantages of virtualization is the ability to easily create snapshots of the virtual machine disk images.

Proxmox VE offers this ability for the qcow2 disk image format, but not for the raw format on NFS based storage. When creating a VM disk snapshot with Proxmox VE on the local filesystem, Proxmox VE uses the features of the underlying filesystem to create snapshots. In cases, in which the VM disk is placed on a NFS storage, it is not possible for Proxmox VE to use any filesystem features to create a snapshot and therefore has to fallback to file based snapshots.

Since NetApp ONTAP offers snapshot features on file level and on volume level using its own filesystem features, it could be an advantage to use this features over the Proxmox VE file based snapshots.

The common way to connect Proxmox VE with NetApp ONTAP would be NFS. NetApp ONTAP also supports iSCSI, but that is out of scope, as an iSCSI connected storage offers full access to the filesystem and therefore Proxmox VE could use the filesystem features.

ONTAP snapshots

A FileClone is a copy of a file, that points to the same blocks as the original file, only changes are written to new blocks. Therefore creating a FileClone happens instantly and writing to a FileClone does not create any overhead as a file based snapshot in Proxmox VE does. Also deleting or better merging the snapshot back into the virtual disk image is not necessary, as a FileClone is a full copy of the original virtual disk. Just delete the original virtual disk and continue using the FileClone or switch back to the original virtual disk image and delete the clone if a roll-back is necessary.

A VolumeClone works the same way as the FileClone, but on volume level. A VolumeClone creates an instant copy of a complete volume, a Snapshot. The Snapshot references the same blocks as the original volume and changes on the original volume are written to new blocks. It is also possible to access the Snapshot by creating a new volume from it, this is called a FlexClone volume.

This can be used to create a snapshot of a Proxmox VE storage with all its virtual machine disk images instead of creating snapshots of single virtual machine disk images. With the FlexClone volume functionality it is easy to access the data in the clone.

Since Proxmox VE does not support ONTAP features directly, there is a small Python script that uses the ONTAP Rest API and the Proxmox VE API to make this features easily accessible. The script is able to create a FileClone of a virtual machine disk image, it can create the clone from a running VM, but is also able to suspend or stop a VM before creating the clone and then start the VM again.

The script also gives easy access to the VolumeClone and FlexClone features, it is able to create, manage, mount, unmount and delete clones of a volume. When mounting a clone of a volume, it will also automatically be attached as an additional storage to Proxmox VE.

NFS features

In Linux kernel 5.3 the NFS mount option `nconnect` was introduced. By default a NFS client will use one TCP connection to the server, this can be a bottleneck in the case of high NFS work loads. With the nconnect option the number of TCP connections per server can be increased up to 16 connections.

nconnect is supported by Proxmox VE since version 6.2 and by ONTAP 9 and can be easily used by adding the nconnect=<value> to the mount options for the NFS share on the client side.

The other interesting NFS mount option is max_connect. max_connect sets the maximum number of connections to different server IPs belonging to the same NFSv4.1+ server. This is called trunking and is a multipath functionality. The difference to nconnect is, that nconnect sets the number of TCP connections to one server IP, while max_connect sets the number of TCP connections to the same server over multiple IPs. This is supported by ONTAP since version 9.14.1.

Combining the options is possible, but might not lead to the desired result.

More

To further optimize the resource usage, data deduplication on storage systems is an important feature, since especially virtual machine disk images share the same (operating system) data between each other. Deduplication is reducing the used space in this use case very effective. ONTAP supports deduplication.

The issue of table and index bloat due to failed inserts on unique constraints is well known and has been discussed in various articles across the internet. However, these discussions sometimes lack a clear, practical example with measurements to illustrate the impact. And despite the familiarity of this issue, we still frequently see this design pattern—or rather, anti-pattern—in real-world applications. Developers often rely on unique constraints to prevent duplicate values from being inserted into tables. While this approach is straightforward, versatile, and generally considered effective, in PostgreSQL, inserts that fail due to unique constraint violations unfortunately always lead to table and index bloat. And on high-traffic systems, this unnecessary bloat can significantly increase disk I/O and the frequency of autovacuum runs. In this article, we aim to highlight this problem once again and provide a straightforward example with measurements to illustrate it. We suggest simple improvement that can help mitigate this issue and reduce autovacuum workload and disk I/O.

Two Approaches to Duplicate Prevention

In PostgreSQL, there are two main ways to prevent duplicate values using unique constraints:

1. Standard Insert Command (INSERT INTO table)

The usual INSERT INTO table command attempts to insert data directly into the table. If the insert would result in a duplicate value, it fails with a “duplicate key value violates unique constraint” error. Since the command does not specify any duplicate checks, PostgreSQL internally immediately inserts the new row and only then begins updating indexes. When it encounters a unique index violation, it triggers the error and deletes the newly added row. The order of index updates is determined by their relation IDs, so the extent of index bloat depends on the order in which indexes were created. With repeated “unique constraint violation” errors, both the table and some indexes accumulate deleted records leading to bloat, and the resulting write operations increase disk I/O without achieving any useful outcome.

2. Conflict-Aware Insert (INSERT INTO table … ON CONFLICT DO NOTHING)

The INSERT INTO table ON CONFLICT DO NOTHING command behaves differently. Since it specifies that a conflict might occur, PostgreSQL first checks for potential duplicates before attempting to insert data. If a duplicate is found, PostgreSQL performs the specified action—in this case, “DO NOTHING”—and no error occurs. This clause was introduced in PostgreSQL 9.5, but some applications either still run on older PostgreSQL versions or retain legacy code when the database is upgraded. As a result, this conflict-handling option is often underutilized.

Testing Example

To be able to do testing we must start PostgreSQL with “autovacuum=off”. Otherwise with instance mostly idle, autovacuum will immediately process bloated objects and it would be unable to catch statistics. We create a simple testing example with multiple indexes: