Mönchengladbach (DE) / Naarden (NL) — February 4th, 2026

Splendid Data and credativ GmbH announce strategic partnership to accelerate Oracle-to-PostgreSQL migration for enterprises.

Splendid Data and credativ GmbH today announced a strategic partnership to help organisations modernize complex Oracle database environments by migrating to native PostgreSQL in a predictable, scalable and future-proof manner.

The partnership addresses growing enterprise demand to reduce escalating database licensing costs while regaining autonomy, ensuring digital sovereignty and preparing data platforms for AI-driven use cases. PostgreSQL is increasingly selected as a strategic database foundation due to its open architecture, strong ecosystem and suitability for modern, data-intensive workloads.

Splendid Data contributes its Cortex automation platform, designed to industrialize large-scale Oracle-to-PostgreSQL migrations and reduce risk across complex database estates. credativ adds deep PostgreSQL expertise, enterprise-grade platform delivery and 24×7 operational support for mission-critical environments. Together, the partners also align on PostgresPURE, a production-grade, pure open-source PostgreSQL platform without proprietary extensions or vendor lock-in.

“Enterprises are making long-term decisions about licensing exposure, autonomy and AI readiness,” said Michel Schöpgens, CEO of Splendid Data. “This partnership combines migration automation with trusted PostgreSQL operations.”

David Brauner, Managing Director at credativ, added: “Together we enable customers to modernize faster while maintaining control, transparency and operational reliability.”

The initial geographic focus of the partnership is Germany, where credativ has a strong customer base. The model is designed to scale toward international enterprise customers over time. Joint activities will include coordinated go-to-market efforts, enablement of credativ teams on Cortex, and the delivery of initial pilot projects with shared customers.

Both companies view the partnership as a long-term collaboration aimed at setting a new standard for large-scale, open-source database modernization in Europe.

About credativ

credativ GmbH is an independent consulting and service company focusing on open source software. Following the spin-off from NetApp in spring 2025, credativ combines decades of community expertise with professional enterprise standards to make IT infrastructures secure, sovereign and future-proof, without losing the connection to its history of around 25 years of work in and with the open source community.

About Splendid Data

Splendid Data is a PostgreSQL specialist focused on large-scale Oracle-to-PostgreSQL migrations for enterprises with complex database estates. Its Cortex platform enables automated, repeatable migrations, while PostgresPURE provides a production-grade, open-source PostgreSQL platform without vendor lock-in.

Contact credativ:

Peter Dreuw, Head of Sales & Marketing (peter.dreuw@credativ.de)

Contact Splendid Data:

Michel Schöpgens, CEO (michel.schopgens@splendiddata.com)

Announcing the initial release of the python-multiprocessing-intenum module, providing a multiprocessing safe enum value for the Python programming language.

Background

Within a large customer project involving multiple components running in a Kubernetes environment, we faced a specific problem with no generic solution available when implementing a daemon worker component.

As only one calculation can be running at a time, the component has an internal state to track whether it is idle and ready to accept new calculations, busy calculating or pending to shut itself down, e.g. during a rolling upgrade.

Besides the actual calculation process that is being spawned, the component provides a Django Rest Framework based API from multiple web server processes or threads that allows another scheduler component to query the current state of the worker and submit new calculation jobs. Thus the internal state needs to be safely accessed and modified from multiple processes and/or threads.

The natural data type to track a state in most high level programming languages including Python is a enum type that one can assign symbolic names corresponding to the states. When it comes to sharing data across multiple processes or threads in Python, the multiprocessing module comes into play, which provides shared ctypes objects, ie. basic data types such as character and integer, as well as locking primitives to handle sharing larger complex data structures.

It does not provide a ready to use way to share an enum value though.

The problem being pretty common, it called for a generic and reusable solution and so the idea for IntEnumValue was born, an implementation of a multiprocessing safe shared object for IntEnum enum values.

Features

To the user it appears and can be used much like a Python IntEnum, which happens to be an enum, whose named values each correspond to an unique integer.

Internally, it then uses a multiprocessing.Value shared ctypes integer object to store that integer value of the enum in a multiprocessing safe way.

To support a coding style that proactively guards against programming errors, the module is fully typed allowing static checking with mypy and implemented as a generic class taking the type of the designated underlying specific enum as a parameter.

The python-multiprocessing-intenum module comes with unit tests providing full coverage of its code.

Usage Examples

To illustrate the usage and better demonstrate the features, an example of a multithreaded worker is used, which has an internal state represented as an enum that should be tracked across its threads.

To use a multiprocessing safe IntEnum, first define the actual underlying IntEnum type:

class WorkerStatusEnum(IntEnum):

UNAVAILABLE = enum.auto()

IDLE = enum.auto()

CALCULATING = enum.auto()

IntEnumValue is a generic class, accepting a type parameter for the type of the underlying IntEnum.

Thus define the corresponding IntEnumValue type for that IntEnum like this:

class WorkerStatus(IntEnumValue[WorkerStatusEnum]):

pass

What this does, is define a new WorkerStatus type that is an IntEnumValue parametrized to handle the specific IntEnum type WorkerStatusEnum only.

It can be used like an enum to track the state:

>>> status = WorkerStatus(WorkerStatusEnum.IDLE) >>> status.name 'IDLE' >>> status.value 2 >>> with status.get_lock(): ... status.set(WorkerStatusEnum.CALCULATING) >>> status.name 'CALCULATING' >>> status.value 3

Trying to set it to a different type of IntEnum however is caught as a TypeError:

>>> class FrutEnum(IntEnum):

... APPLE = enum.auto()

... ORANGE = enum.auto()

>>> status.set(FrutEnum.APPLE)

Traceback (most recent call last):

File "", line 1, in

File "/home/elho/python-multiprocessing-intenum/src/multiprocessing_intenum/__init__.py", line 60, in set

raise TypeError(message)

TypeError: Can not set '<enum 'WorkerStatusEnum'>' to value of type '<enum 'FrutEnum'>'

This helps to guard against programming errors, where a different enum is erroneously being used.

Besides being used directly, the created WorkerStatus type can, of course, also be wrapped in a dedicated class, which is what the remaining examples will further expand on:

class WorkerState:

def __init__(self) -> None:

self.status = WorkerStatus(WorkerStatusEnum.IDLE)

When using multiple multiprocessing.Value instances (including IntEnumValue ones) that should share a lock to allow ensuring that they can only be changed in a consistent state, pass that shared lock as a keyword argument on instantiation:

class WorkerState:

def __init__(self) -> None:

self.lock = multiprocessing.RLock()

self.status = WorkerStatus(WorkerStatusEnum.IDLE, lock=self.lock)

self.job_id = multiprocessing.Value("i", -1, lock=self.lock)

def get_lock(self) -> Lock | RLock:

return self.lock

To avoid having to call the set() method to assign a value to the IntEnumValue attribute, it is suggested to keep the actual attribute private to the class and implement getter and setter methods for a public property that hides this implementation detail, e.g. as follows:

class WorkerState:

def __init__(self) -> None:

self._status = WorkerStatus(WorkerStatusEnum.IDLE)

@property

def status(self) -> WorkerStatusEnum:

return self._status # type: ignore[return-value]

@status.setter

def status(self, status: WorkerStatusEnum | str) -> None:

self._status.set(status)

The result can be used in a more elegant manner by simply assigning to the status attribute:

>>> state = WorkerState() >>> state.status.name 'IDLE' >>> with state.get_lock(): ... state.status = WorkerStatusEnum.CALCULATING >>> state.status.name 'CALCULATING'

The specific IntEnumValue type can override methods to add further functionality.

A common example is overriding the set() method to add logging:

class WorkerStatus(IntEnumValue[WorkerStatusEnum]):

def set(self, value: WorkerStatusEnum | str) -> None:

super().set(value)

logger.info(f"WorkerStatus set to '{self.name}'")

Putting all these features and use cases together allows handling internal state of multiprocessing worker in an elegant, cohesive and robust way.

credativ GmbH, a specialized service provider for open source solutions, is solidifying its position as a strategic partner for security-critical IT infrastructures. Since December 15, the company has been officially certified according to the international standards ISO/IEC 27001:2022 (Information Security) and ISO 9001:2015 (Quality Management).

Expertise from corporate structures – Agility as an independent company

This success is particularly remarkable, as credativ GmbH has only been operating independently on the market in its current form since March 1, 2025. The speed of certification within just nine months after the spin-off from NetApp Deutschland GmbH is no coincidence: the time within the corporate structures of NetApp was used specifically to build up in-depth know-how in highly regulated processes and global security standards.

A strong signal for public authorities and major clients

The certification covers all services provided by credativ GmbH. In doing so, the company creates a seamless basis of trust, which is essential, in particular, for cooperation with

Statements on the certification

David Brauner, Managing Director of credativ GmbH, emphasizes the strategic importance:

“Since our restart into independence on March 1, we have had a clear goal: to seamlessly transfer the high standards that we know from our time in the corporate environment into our new structure. The certification is a promise to our customers in the public sector and in the enterprise sector that we not only understand the high requirements for compliance and security, but also master them at the highest level.”

Alexander Wirt, CTO of credativ GmbH, adds:

“Quality and security are just as deeply rooted in us as the connection to open source. We have used the valuable experience from our time at NetApp to put our internal processes on a certifiable footing from day one. For our customers, this means that they receive the usual open source excellence, combined with the security of a partner working according to the most modern ISO standards.”

The advantages at a glance:

- Corporate-proven knowledge: Transfer of the highest compliance standards into an agile service environment.

- Highest data security: Protection of sensitive information according to the latest standard ISO/IEC 27001:2022.

- Verified process quality: Consistent management according to ISO 9001:2015 across all service areas.

- Ideal partner for the public sector: Fulfillment of all regulatory requirements for public authorities and critical infrastructures.

About credativ GmbH

credativ GmbH is an independent consulting and service company focusing on open source software. Following the spin-off from NetApp in spring 2025, credativ combines decades of community expertise with professional enterprise standards to make IT infrastructures secure, sovereign and future-proof, without losing the connection to its history of around 25 years of work in and with the open source community.

The European PostgreSQL Conference (PGConf.EU) is one of the largest PostgreSQL events worldwide. In this year it was held 21–24 October in Riga, Latvia. Our company, credativ GmbH, was a bronze sponsor of the conference, and I had the privilege to represent credativ with my talk “Database in Distress: Testing and Repairing Different Types of Database Corruption.” In addition, I volunteered as a session host on Thursday and Friday. The conference itself covered a wide range of PostgreSQL topics – from cloud-native deployments to AI integration, from large-scale migrations to resiliency. Below are highlights from sessions I attended, organised by day.

My talk about database corruption

I presenting my talk on Friday afternoon. In it I dove into real-world cases of PostgreSQL database corruption I encountered over the past two years. To investigate these issues, I built a Python tool that deliberately corrupts database pages and then examined the results using PostgreSQL’s pageinspect extension. During the talk I demonstrated various corruption scenarios and the errors they produce, explaining how to diagnose each case. A key point was that PostgreSQL 18 now enables data checksums by default at initdb. Checksums allow damaged pages to be detected and safely “zeroed out” (skipping corrupted data) during recovery. Without checksums, only pages with clearly corrupted headers can be automatically removed using the zero_damaged_pages = on setting. Other types of corruption require careful manual salvage. I concluded by suggesting improvements (in code or settings) to make recovery easier on clusters without checksums.

Tuesday: Kubernetes and AI Summits

Tuesday began with two half-day Summits. The PostgreSQL on Kubernetes Summit explored running Postgres in cloud-native environments. Speakers compared Kubernetes operators (CloudNativePG, Crunchy, Zalando, etc.), backup/recovery in Kubernetes, scaling strategies, monitoring, and zero-downtime upgrades. They discussed operator architectures and multi-tenant DBaaS use cases. Attendees gained practical insight into trade-offs of different operators and how to run Kubernetes-based Postgres for high availability.

In the PostgreSQL & AI Summit, experts examined Postgres’s role in AI applications. Topics included vector search (e.g. pgvector), hybrid search, using Postgres as context storage for AI agents, conversational query interfaces, and even tuning Postgres with machine learning. Presenters shared best practices and integration strategies for building AI-driven solutions with Postgres. In short, the summit explored how PostgreSQL can serve AI workloads (and vice versa) and what new features or extensions are emerging for AI use cases.

Wednesday: Migrations, Modelling, and Performance

Joaquim Oliveira (European Space Agency) discussed moving astronomy datasets (from ESA’s Gaia and Euclid missions) off Greenplum. The team considered both scaling out with Citus and moving to EDB’s new Greenplum-based cloud warehouse. He covered the practical pros and cons of each path and the operational changes required to re-architect such exascale workloads. The key lesson was planning architecture, tooling, and admin shifts needed before undertaking a petabyte-scale migration.

Boriss Mejias (EDB) emphasised that data modelling is fundamental to software projects. Using a chess-tournament application as an example, he showed how to let PostgreSQL enforce data integrity. By carefully choosing data types and constraints, developers can embed much of the business logic directly in the schema. The talk demonstrated “letting PostgreSQL guarantee data integrity” and building application logic at the database layer.

Roberto Mello (Snowflake) reviewed the many optimizer and execution improvements in Postgres 18. For example, the planner now automatically eliminates unnecessary self-joins, converts IN (VALUES…) clauses into more efficient forms, and transforms OR clauses into arrays for faster index scans. It also speeds up set operations (INTERSECT, EXCEPT), window aggregates, and optimises SELECT DISTINCT and GROUP BY by reordering keys and ignoring redundant columns. Roberto compared query benchmarks across Postgres 16, 17, and 18 to highlight these gains.

Nelson Calero (Pythian) shared a “practical guide” for migrating 100+ PostgreSQL databases (from gigabytes to multi-terabytes) to the cloud. His team moved hundreds of on-prem VM databases to Google Cloud SQL. He discussed planning, downtime minimisation, instance sizing, tools, and post-migration tuning. In particular, he noted challenges like handling old version upgrades, inheritance schemas, PostGIS data, and service-account changes. Calero’s advice included choosing the right cloud instance types, optimising bulk data loads, and validating performance after migration.

Jan Wieremjewicz (Percona) recounted implementing Transparent Data Encryption (TDE) for Postgres via the pg_tde extension. He took the audience through the entire journey – from the initial idea, through patch proposals, to community feedback and design trade-offs. He explained why existing PostgreSQL hooks weren’t enough, what friction was encountered, and how customer feedback shaped the final design. This talk served as a “diary” of what it takes to deliver a core encryption feature through the PostgreSQL development process.

Stefan Fercot (Data Egret) demonstrated how to use Patroni (for high availability) together with pgBackRest (for backups). He walked through YAML configuration examples showing how to integrate pgBackRest into a Patroni-managed cluster. Stefan showed how to rebuild standby replicas from pgBackRest backups and perform point-in-time recovery (PITR) under Patroni’s control. The talk highlighted real-world operational wisdom: combining these tools provides automated, repeatable disaster recovery for Postgres clusters.

Thursday: Cloud, EXPLAIN, and Resiliency

Maximilian Stefanac and Philipp Thun (SAP SE) explained how SAP uses PostgreSQL within Cloud Foundry (SAP’s open-source PaaS). They discussed optimisations and scale challenges of running Postgres for SAP’s Business Technology Platform. Over the years, SAP’s Cloud Foundry team has deployed Postgres on AWS, Azure, Google Cloud, and Alibaba Cloud. Each provider’s offerings differ, so unifying automation and monitoring across clouds is a major challenge. The talk highlighted how SAP contributes Postgres performance improvements back to the community and what it takes to operate large-scale, cloud-neutral Postgres clusters.

In “EXPLAIN: Make It Make Sense,” Aivars Kalvāns (Ebury) helped developers interpret query plans. He emphasized that after identifying a slow query, you must understand why the planner chose a given plan and whether it is optimal. Aivars walked through EXPLAIN output and shared rules of thumb for spotting inefficiencies – for example, detecting missing indexes or costly operators. He illustrated common query anti-patterns he has seen in practice and showed how to rewrite them in a more database-friendly way. The session gave practical tips for decoding EXPLAIN and tuning queries.

Chris Ellis (Nexteam) highlighted built-in Postgres capabilities that simplify application development. Drawing on real-world use cases – such as event scheduling, task queues, search, geolocation, and handling heterogeneous data – he showed how features like range types, full-text search, and JSONB can reduce application complexity. For each use case, Chris demonstrated which Postgres feature or data type could solve the problem. This “tips & tricks” tour reinforced that leveraging Postgres’s rich feature set often means writing less custom code.

Andreas Geppert (Zürcher Kantonalbank) described a cross-cloud replication setup for disaster resilience. Faced with a requirement that at most 15 minutes of data could be lost if any one cloud provider failed, they could not use physical replication (since their cloud providers don’t support it). Instead, they built a multi-cloud solution using logical replication. The talk covered how they keep logical replicas up-to-date even as schemas change (noting that logical replication doesn’t automatically copy DDL). In short, logical replication enabled resilient, low-RPO operation across providers despite schema evolution.

Derk van Veen (Adyen) tackled the deeper rationale behind table partitioning. He emphasised the importance of finding the right partition key – the “leading figure” in your data – and then aligning partitions across all related tables. When partitions share a common key and aligned boundaries, you unlock multiple benefits: decent performance, simplified maintenance, built-in support for PII compliance, easy data cleanup, and even transparent data tiering. Derk warned that poorly planned partitions can hurt performance terribly. In his case, switching to properly aligned partitions (and enabling enable_partitionwise_join/_aggregate) yielded a 70× speedup on 100+ TB financial tables. All strategies he presented have been battle-tested in Adyen’s multi-100 TB production database.

Friday: Other advanced Topics

Nicholas Meyer (Academia.edu) introduced thin cloning, a technique for giving developers real production data snapshots for debugging. Using tools like DBLab Engine or Amazon Aurora’s clone feature, thin cloning creates writable copies of live data inexpensively. This lets developers reproduce production issues exactly – including data-dependent bugs – by debugging against these clones of real data. Nicholas explained how Academia.edu uses thin clones to catch subtle bugs early by having dev and QA teams work with near-production data.

Dave Pitts (Adyen) explained why future Postgres applications may use both B-tree and LSM-tree (log-structured) indexes. He outlined the fundamental differences: B-trees excel at point lookups and balanced reads/writes, while LSM-trees optimise high write throughput and range scans. Dave discussed “gotchas” when switching workloads between index types. The talk clarified when each structure is advantageous, helping developers and DBAs choose the right index for their workload.

A panel led by Jimmy Angelakos addressed “How to Work with Other Postgres People”. The discussion focused on mental health, burnout, and neurodiversity in the PostgreSQL community. Panelists highlighted that unaddressed mental-health issues cause stress and turnover in open-source projects. They shared practical strategies for a more supportive culture: personal “README” guides to explain individual communication preferences, respectful and empathetic communication practices, and concrete conflict resolution techniques. The goal was to make the Postgres community more welcoming and resilient by understanding diverse needs and supporting contributors effectively.

Lukas Fittl (pganalyze) presented new tools for tracking query plan changes over time. He showed how to assign stable Plan IDs (analogous to query IDs) so that DBAs can monitor which queries use which plan shapes. Lukas introduced the new pg_stat_plans extension (leveraging Postgres 18’s features) for low-overhead collection of plan statistics. He explained how this extension works and compared it to older tools (the original pg_stat_plans, pg_store_plans, etc.) and cloud provider implementations. This makes it easier to detect when a query’s execution plan changes in production, aiding performance troubleshooting.

Ahsan Hadi (pgEdge) described pgEdge Enterprise PostgreSQL, a 100% open-source distributed Postgres platform. pgEdge Enterprise Postgres provides built-in high availability (using Patroni and read replicas) and the ability to scale across global regions. Starting from a single-node Postgres, users can grow to a multi-region cluster with geo-distributed replicas for extreme availability and low latency. Ahsan demonstrated how pgEdge is designed for organizations that need to scale from single instances to large distributed deployments, all under the standard Postgres license.

Conclusion

PGConf.EU 2025 was an excellent event for sharing knowledge and learning from the global PostgreSQL community. I was proud to represent credativ and to help as a volunteer, and I’m grateful for the many insights gained. The sessions above represent just a selection of the rich content covered at the conference. Overall, PostgreSQL’s strong community and rapid innovation continue to make these conferences highly valuable. I look forward to applying what I learned in my work and to attending future PGConf.EU events.

This December, we are launching the very first Open Source Virtualization Meeting, also known as the Open Source Virtualization Gathering!

Join us for an evening of open exchange on virtualization with technologies such as KVM, Proxmox, and XCP-ng.

On September 4, 2025, the third pgday Austria took place in the Apothecary Wing of Schönbrunn Palace in Vienna, following the previous events in 2021 and 2022.

On September 4, 2025, the third pgday Austria took place in the Apothecary Wing of Schönbrunn Palace in Vienna, following the previous events in 2021 and 2022.

153 participants had the opportunity to attend a total of 21 talks and visit 15 different sponsors, discussing all possible topics related to PostgreSQL and the community.

Also present this time was the Sheldrick Wildlife Trust, which is dedicated to rescuing elephants and rhinos. Attendees could learn about the project, make donations, and participate in a raffle.

The talks ranged from topics such as benchmarking and crash recovery to big data.

Our colleague was also represented with his talk “Postgres with many data: To MAXINT and beyond“.

As a special highlight, at the end of the day, before the networking event, and in addition to the almost obligatory lightning talks, there was a “Celebrity DB Deathmatch” where various community representatives came together for a very entertaining stage performance to find the best database in different disciplines. To everyone’s (admittedly not great) surprise, PostgreSQL was indeed able to excel in every category.

Additionally, our presence with our own booth gave us the opportunity to have many very interesting conversations and discussions with various community members, as well as sponsors and visitors in general.

For the first time, the new managing director of credativ GmbH was also on site after our re-independence and saw things for himself.

All in all, it was a (still) somewhat smaller, but nonetheless, as always, a very instructive and familiar event, and we are already looking forward to the next one and thank the organizers and the entire team on site and behind the scenes.

Dear Open Source Community, dear partners and customers,

We are pleased to announce that credativ GmbH is once again a member of the Open Source Business Alliance (OSBA) in Germany. This return is very important to us and continues a long-standing tradition, because even before the acquisition by NetApp,

The OSBA

OSBA aims to strengthen the use of Open Source Software (OSS) in businesses and public administration. We share this goal with deep conviction. Our renewed membership is intended not only to promote networking within the community but, above all, to support the important work of the OSBA in intensifying the public dialogue about the benefits of Open Source.

With our membership, we reaffirm our commitment to an open, collaborative, and sovereign digital future. We look forward to further advancing the benefits of Open Source together with the OSBA and all members.

Schleswig-Holstein’s Approach

We see open-source solutions not just as a cost-efficient alternative, but primarily as a path to greater digital sovereignty. The state of Schleswig-Holstein is a shining example here: It demonstrates how public authorities can initiate change and reduce dependence on commercial software. This not only leads to savings but also to independence from the business models and political influences of foreign providers.

Open Source Competition

Another highlight in the open-source scene is this year’s OSBA Open Source Competition. We are particularly pleased that this significant competition is being held this year under the patronage of Federal Digital Minister Dr. Karsten Wildberger. This underscores the growing importance of Open Source at the political level. The accompanying sponsor is the Center for Digital Sovereignty (ZenDiS), which also emphasizes the relevance of the topic.

We are convinced that the competition will make an important contribution to promoting innovative open-source projects and look forward to the impulses it will generate.

Further information about the competition can be found here:

Key Takeaways

- credativ GmbH has rejoined the Open Source Business Alliance (OSBA) in Germany.

- The OSBA promotes the use of open-source software in businesses and public administration.

- Membership reaffirms the commitment to an open digital future and fosters dialogue about Open Source.

- Schleswig-Holstein demonstrates how open-source solutions can enhance digital sovereignty.

- The OSBA’s Open Source Competition is under the patronage of Federal Minister for Digital Affairs Dr. Karsten Wildberger.

Initial situation

Some time ago, Bitnami announced that it would be switching its public container repositories.

Bitnami is known for providing both Helm charts and container images for a wide range of applications. These include Helm Charts and container images for Keycloak, the RabbitMQ cluster operator, and many more. Many of these charts and images are used by companies, private individuals, and open source projects.

Currently, the images provided by Bitnami are based on Debian. In the future, the images will be based on specially hardened distroless images.

A timeline of the changes, including FAQs, can be found on GitHub.

What exactly is changing?

According to Bitnami, the current Dockerhub Repo Bitnami will be converted on August 28, 2025. All images available up to that point will only be available within the Bitnami Legacy Repositories from that date onwards.

Some of the new secure images are already available at bitnamisecure. However, without a subscription, only a very small subset of images will be provided. Currently, there are 343 different repositories under bitnami – but only 44 under bitnamisecure (as of 2025-08-19). In addition, the new Secure Images are only available in the free version under their digests. The only tag available, “latest,” always points to the most recently provided image. The version behind it is not immediately apparent.

Action Required?

If you use Helm charts from Bitnami with container images from the current repository, action is required. Currently used Bitnami Helm charts mostly reference the (still) current repository.

Depending on your environment, the effects of the change may not be noticeable until a later date. For example, when restarting a container or pod, if the cached version of the image has been cleaned up or the pod was started on a node that does not have direct access (cache) to the image. If a container proxy is used to obtain the images, this may happen even later.

Required adjustments

If you want to ensure that the images currently in use can continue to be obtained, you should switch to the Bitnami Legacy Repository. This is already possible.

Affected Helm charts can be patched with adjusted values files, for example.

Unfortunately, the adjustments mentioned above are only a quick fix. If you want to use secure and updated images in the long term, you will have to make the switch.

This may mean switching to an alternative Helm chart or container image, accepting the new conditions of the bitnamisecure repository, or paying for the convenience you have enjoyed so far.

What are the alternatives?

For companies that are not prepared to pay the new license fees, there are various alternatives:

- Build your own container images: Companies can create their own container images based on Dockerfiles and official base images.

- Open source alternatives: There are numerous open source projects that offer similar functionality to Bitnami. Examples include “Helm Hub” and “Artifact Hub”.

- Community charts: Many projects offer their own Helm charts, which are maintained by the community.

References

- https://news.broadcom.com/app-dev/broadcom-introduces-bitnami-secure-images-for-production-ready-containerized-applications

- https://github.com/bitnami/charts/issues/35164

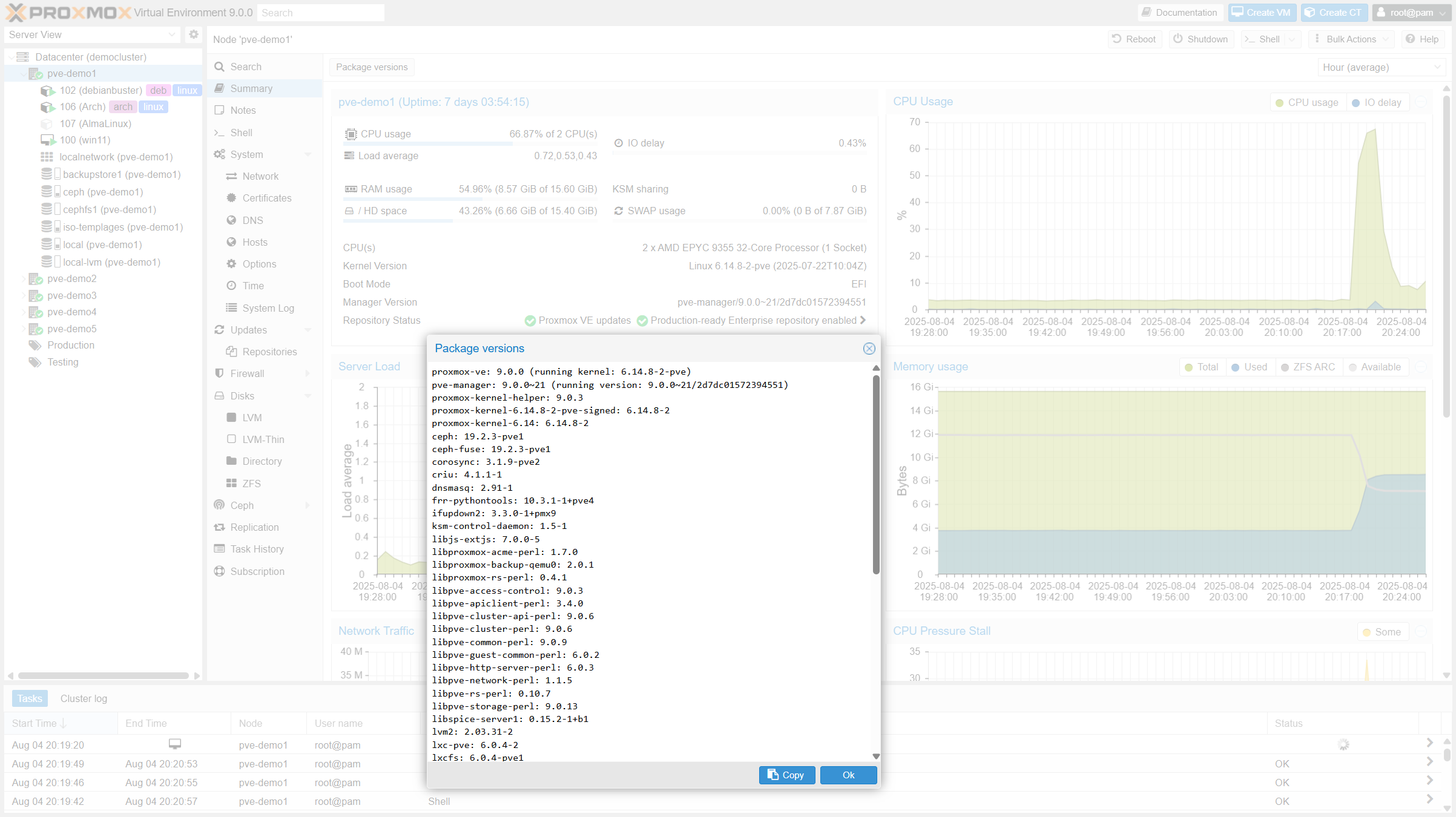

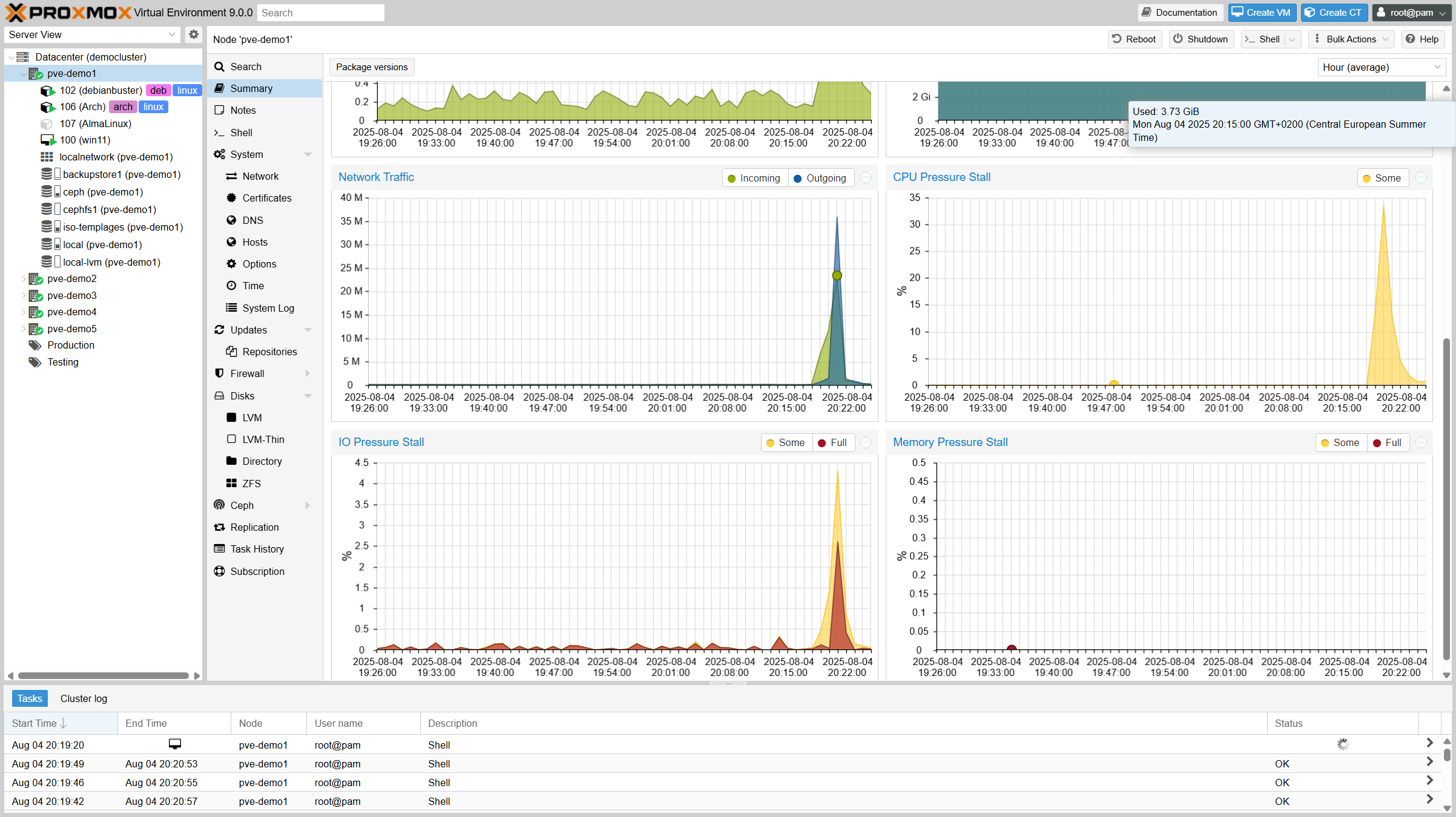

The release of Proxmox Virtual Environment 9.0 marks a significant step forward for the popular open-source virtualisation platform. With a range of improvements in performance, flexibility and user-friendliness, this version stands out from its predecessors and is increasingly geared towards the requirements of businesses.

The most important new features at a glance:

- Basis: Debian 13 "Trixie" & Linux Kernel 6.14

The foundation of Proxmox VE 9.0 is the new Debian 13 "Trixie". In combination with the latest Linux kernel 6.14, users benefit from improved hardware compatibility, increased security and overall better performance. - VM snapshots for LVM shared storage

One of the most anticipated features is native snapshot support for LVM Shared Storage. This is particularly important for environments that use iSCSI or Fibre Channel SANs, as it is now possible to create snapshots as so-called "volume chains". This enables flexible and hardware-independent backup solutions. The snapshot functionality is based on QCow2 and is also available for other storage types. - SDN stack with "fabrics"

The software-defined networking (SDN) stack has been expanded to include the new concept of "fabrics". This makes it easier to create and manage complex, fault-tolerant and scalable network topologies. The new version also supports the OpenFabric and OSPF routing protocols, which simplifies the setup of EVPN networks. - High availability (HA) with affinity rules

New affinity rules make managing high-availability clusters more flexible. Administrators can now define whether VMs or containers should remain on the same node (positive affinity) or be distributed across different nodes (negative affinity) to increase reliability. However, for behaviour familiar from VMware DRS, we still recommend ProxLB as the tool of choice. - Modern and responsive mobile user interface

The mobile web interface has been completely redesigned in Rust using the Yew framework. It offers significantly improved usability, faster loading times and allows basic maintenance tasks to be performed on the go. - Improvements to ZFS

There is also good news for users who use ZFS storage pools: Version 9.0 now allows new hard disks to be added to existing RAIDZ pools with minimal downtime. - Expansion of metrics

In the new version, the host metrics have been revised and expanded.

In summary, Proxmox VE 9.0 represents a solid further development of the platform. The new features and modern foundation make it an even more powerful and reliable solution for businesses and home users who value open source technology.

Further information on upgrading to the new version can also be found here: https://pve.proxmox.com/wiki/Upgrade_from_8_to_9

Our team will also be happy to assist you with upgrading your nodes.

credativ is an authorised reseller for Proxmox VE and will be happy to assist you. In particular, we can help you with the design, setup and operation of automated cluster environments. Migration from existing commercial solutions is high on our list of priorities. We also offer our customers 24/7 support for Proxmox VE.

The two screenshots and the Proxmox logo are from https://www.proxmox.com/de/ueber-uns/details-unternehmen/medienkit.

Introduction

Last Saturday, August 9, the Debian project released the latest version of the Linux distribution “Debian.” Version 13 is also known as ‘Trixie’ and, like its predecessor “Bookworm,” contains many new features and improvements. Since some credativ employees are also active members of the Debian project, the new version is naturally a special reason for us to take a look at some of its highlights.

On Thursday, 26 June and Friday, 27 June 2025, my colleague Patrick Lauer and I had the amazing opportunity to attend Swiss PGDay 2025, held at the OST Eastern Switzerland University of Applied Sciences in Rapperswil. This two-day PostgreSQL conference featured two parallel tracks of presentations in English and German, bringing together users and experts primarily from across Switzerland. Our company, credativ, was among the supporters of this year’s conference.

During the event, Patrick delivered an engaging session titled “Postgres with many data: To MAXINT and beyond,” which built on past discussions about massive-scale Postgres usage. He highlighted the practical issues that arise when handling extremely large datasets in PostgreSQL – for instance, how even a simple SELECT COUNT(*) can become painfully slow, and how backups and restores can take days on very large datasets. He also shared strategies to manage performance effectively at these scales.

I presented a significantly updated version of my talk, “Building a Data Lakehouse with PostgreSQL: Dive into Formats, Tools, Techniques, and Strategies.” It covered modern data formats and frameworks such as Apache Iceberg, addressing key challenges in lakehouse architectures – from governance, privacy, and compliance, to data quality checks and AI/ML use cases. The talk emphasized PostgreSQL’s capability to play a central role in today’s data lakehouse and AI landscape. At the close of the conference, I delivered a brief lightning talk showcasing our new open-source migration tool, “credativ-pg-migrator.”

(c) photos by Gülçin Yıldırım Jelinek

The conference schedule was packed with many high-quality, insightful talks. We would particularly like to highlight:

* Bruce Momjian – “How Open Source and Democracy Drive Postgres”: In his keynote, Bruce Momjian outlined how PostgreSQL’s open-source development model and democratic governance have powered its success. He explained the differences between open-source and proprietary models, reviewed PostgreSQL’s governance history, and illustrated how democratic, open processes result in robust software and a promising future for Postgres.

* Gülçin Yıldırım Jelinek – “Anatomy of Table-Level Locks in PostgreSQL”: session covered the fundamentals of PostgreSQL’s table-level locking mechanisms. Explained how different lock modes are acquired and queued during schema changes, helping attendees understand how to manage lock conflicts, minimize downtime, and avoid deadlocks during high-concurrency DDL operations.

* Aarno Aukia – “Operating PostgreSQL at Scale: Lessons from Hundreds of Instances in Regulated Private Clouds”: the speaker shared lessons from running extensive Postgres environments in highly regulated industries. He discussed architectural patterns, automation strategies, and “day-2 operations” practices that VSHN uses to meet stringent availability, compliance, and audit requirements, including secure multi-tenancy, declarative deployments, backups, monitoring, and lifecycle management in mission-critical cloud-native setups.

* Bertrand Hartwig-Peillon – “pgAssistant”: Author introduced pgAssistant, an open-source tool designed to help developers optimize PostgreSQL schemas and queries before production deployment. He demonstrated how pgAssistant combines deterministic analysis with an AI-driven approach to detect schema inconsistencies and suggest optimizations, effectively automating best practices and performance tuning within development workflows.

* Gianni Ciolli – “The Why and What of WAL”: Gianni Ciolli provided in a great Italian style concise history and overview of PostgreSQL’s Write-Ahead Log (WAL). He explained WAL’s central role in PostgreSQL for crash safety, backups, and replication, showcasing examples of WAL-enabled features like fast crash recovery, efficient hot backups, physical replication, and logical decoding.

* Daniel Krefl – “Hacking pgvector for performance”: The speaker presented an enhanced version of the pgvector extension for massive data processing, optimized by maintaining the vector index outside PostgreSQL memory and offloading computations, including GPU integration. He detailed the process of moving pgvector’s core logic externally for improved speed, demonstrating notable performance gains in the EU AERO project context. He also talked about distributed PostgreSQL XC, XL and TBase, which are unfortunately stuck on the old version 10 and how he ported changes from these projects into the version 16.

* Luigi Nardi – “A benchmark study on the impact of PostgreSQL server parameter tuning”: Luigi Nardi presented comprehensive benchmark results on tuning PostgreSQL configuration parameters. Highlighting that many users default settings, he demonstrated how significant performance improvements can be achieved through proper tuning across various workloads (OLTP, OLAP, etc.), providing actionable insights tailored to specific environments.

* Renzo Dani – “From Oracle to PostgreSQL: A HARD Journey and an Open-Source Awakening”: Author recounted his experiences migrating a complex enterprise application from Oracle to PostgreSQL, addressing significant challenges such as implicit type casting, function overloading differences, JDBC driver issues, and SQL validation problems. He also highlighted the benefits, including faster CI pipelines, more flexible deployments, and innovation opportunities provided by open-source Postgres, along with practical advice on migration tools, testing strategies, and managing trade-offs.

(c) photo by Swiss PostgreSQL User Group

At the end of the first day, all participants enjoyed a networking dinner. We both want to sincerely thank the Swiss PGDay organizers (Swiss PostgreSQL User Group) for an amazing event. Swiss PGDay 2025 was a memorable and valuable experience, offering great learning and networking opportunities. We are also very grateful to credativ for enabling our participation, and we look forward to future editions of this excellent conference.

Effective March 1, 2025, the Mönchengladbach-based open-source specialist credativ IT Services GmbH will once again operate as an independent company in the market. In May 2022, credativ GmbH was acquired by NetApp and integrated into NetApp Deutschland GmbH on February 1, 2023. This step allowed the company to draw on extensive experience and a broader resource base. However, after intensive collaboration within the storage and cloud group, it has become clear that credativ, through its regained independence, can offer the best conditions to address customer needs even more effectively. The transition is supported by all 46 employees.

“We have decided to take this step to focus on our core business areas and create the best possible conditions for further growth. For our customers, this means maximum flexibility. We thank NetApp Management for this exceptional opportunity.”, says David Brauner, Managing Director of credativ IT Services GmbH.

“The change is a testament to the confidence we have in the credativ team and their ability to lead the business towards a prosperous future.“, commented Begoña Jara, Vice President of NetApp Deutschland GmbH.

What Does this Change Mean for Credativ’s Customers?

As a medium-sized company, the open-source service provider can rely on even closer collaboration and more direct communication with its customers. An agile structure is intended to enable faster and more individualized decisions, thus allowing for more flexible responses to requests and requirements. Naturally, collaboration with the various NetApp teams and their partner organizations will continue as before.

Since 1999, credativ has been active in the open-source sector as a service provider with a strong focus on IT infrastructure, virtualization, and cloud technologies. credativ also has a strong team focused on open-source-based databases such as PostgreSQL and related technologies. In the coming weeks, the new company will be renamed credativ GmbH.

Update:

The credativ IT Services GmbH was renamed to credativ GmbH on March 19, 2025. The commercial register entry HRB 23003 remains valid. We kindly ask all customers, however, not to use any bank details of the former credativ GmbH that may still be saved. This GmbH was also renamed by its owners and has no direct relationship with the new credativ GmbH.