Efficient Proxmox Cluster Deployment through Automation with Ansible

Manually setting up and managing servers is usually time-consuming, error-prone, and difficult to scale. This becomes especially evident during large-scale rollouts, when building complex infrastructures, or during the migration from other virtualization environments. In such cases, traditional manual processes quickly reach their limits. Consistent automation offers an effective and sustainable solution to these challenges.

To enable fully automated deployment of Proxmox clusters, our team member, known in the open-source community under the alias gyptazy, has developed a dedicated Ansible module called proxmox_cluster. This module handles all the necessary steps to initialize a Proxmox cluster and add additional nodes. It has been officially included in the upstream Ansible Community Proxmox collection and is available for installation via Ansible Galaxy starting with version 1.1.0. As a result, the manual effort required for cluster deployment is significantly reduced. Further insights can be found in his blog post titled “How My BoxyBSD Project Boosted the Proxmox Ecosystem“.

By adopting this solution, not only can valuable time be saved, but a solid foundation for scalable and low-maintenance infrastructure is also established. Unlike fragile task-based approaches that often rely on Ansible’s shell or command modules, this solution leverages the full potential of the Proxmox API through a dedicated module. As a result, it can be executed in various scopes and does not require SSH access to the target systems.

This automated approach makes it possible to deploy complex setups efficiently while laying the groundwork for stable and future-proof IT environments. Such environments can be extended at a later stage and are built according to a consistent and repeatable structure.

Benefits

Using the proxmox_cluster module for Proxmox cluster deployment brings several key advantages to modern IT environments. The focus lies on secure, flexible, and scalable interaction with the Proxmox API, improved error handling, and simplified integration across various use cases:

- Use of the native Proxmox API

- Full support for the Proxmox authentication system

- API Token Authentication support

- No SSH access required

- Usable in multiple scopes:

- From a dedicated deployment host

- From a local system

- Within the context of the target system itself

- Improved error handling through API abstraction

Ansible Proxmox Module: proxmox_cluster

The newly added proxmox_cluster module in Ansible significantly simplifies the automated provisioning of Proxmox VE clusters. With just a single task, it enables the seamless creation of a complete cluster, reducing complexity and manual effort to a minimum.

Creating a Cluster

Creating a cluster requires now only a single task in Ansible by using the proxmox_cluster module:

- name: Create a Proxmox VE Cluster community.proxmox.proxmox_cluster: state: present api_host: proxmoxhost api_user: root@pam api_password: password123 api_ssl_verify: false link0: 10.10.1.1 link1: 10.10.2.1 cluster_name: "devcluster"

Afterwards, the cluster is created and additional Proxmox VE nodes can join the cluster.

Joining a Cluster

Additional nodes can now also join the cluster using a single task. When combined with the use of a dynamic inventory, it becomes easy to iterate over a list of nodes from a defined group and add them to the cluster within a loop. This approach enables the rapid deployment of larger Proxmox clusters in an efficient and scalable manner.

- name: Join a Proxmox VE Cluster

community.proxmox.proxmox_cluster:

state: present

api_host: proxmoxhost

api_user: root@pam

api_password: password123

master_ip: "{{ primary_node }}"

fingerprint: "{{ cluster_fingerprint }}"

cluster_name: “devcluster"Cluster Join Informationen

In order for a node to join a Proxmox cluster, the cluster’s join information is generally required. To avoid defining this information manually for each individual cluster, this step can also be automated. As part of this feature, a new module called cluster_join_info has been introduced. It allows the necessary data to be retrieved automatically via the Proxmox API and made available for further use in the automation process.

- name: List existing Proxmox VE cluster join information

community.proxmox.proxmox_cluster_join_info:

api_host: proxmox1

api_user: root@pam

api_password: "{{ password | default(omit) }}"

api_token_id: "{{ token_id | default(omit) }}"

api_token_secret: "{{ token_secret | default(omit) }}"

register: proxmox_cluster_joinConclusion

While automation in the context of virtualization technologies is often focused on the provisioning of guest systems or virtual machines (VMs), this approach demonstrates that automation can be applied at a much deeper level within the underlying infrastructure. It is also possible to fully automate scenarios in which nodes are initially deployed using a customer-specific image with Proxmox VE preinstalled, and then proceed to automatically create the cluster.

As an official Proxmox partner, we are happy to support you in implementing a comprehensive automation strategy tailored to your environment and based on Proxmox products. You can contact us at any time!

Introduction

Puppet is a software configuration management solution to manage IT infrastructure. One of the first things to be learnt about Puppet is its domain-specific language – the Puppet-DSL – and the concepts that come with it.

Users can organize their code in classes and modules and use pre-defined resource types to manage resources like files, packages, services and others.

The most commonly used types are part of the Puppet core, implemented in Ruby. Composite resource types may be defined via Puppet-DSL from already known types by the Puppet user themself, or imported as part of an external Puppet module which is maintained by external module developers.

It so happens that Puppet users can stay within the Puppet-DSL for a long time even when they deal with Puppet on a regular basis.

The first time I had a glimpse into this topic was when Debian Stable was shipping Puppet 5.5, which was not too long ago. The Puppet 5.5 documentation includes a chapter on custom types and provider development respectively, but to me they felt incomplete and lacking self contained examples. Apparently I was not the only one feeling that way, even though Puppet’s gem documentation is a good overview of what is possible in principle.

Gary Larizza’s blog post was more than ten years ago. I had another look into the documentation for Puppet 7 on that topic recently, as this is the Puppet version in current’s Debian Stable.

The Puppet 5.5 way to type & provider development is now called the low level method, and its documentation has not changed significantly. However, Puppet 6 upwards recommends a new method to create custom types & providers via the so-called Resource-API, whose documentation is a major improvement compared to the low-level method’s. The Resource-API is not a replacement, though, and has several documented limitations.

Nevertheless, for the remaining blog post, we will re-prototype a small portion of files functionality using the low-level method, as well as the Resource-API, namely the ensure and content properties.

Preparations

The following preparations are not necessary in an agent-server setup. We use bundle to obtain a puppet executable for this demo.

demo@85c63b50bfa3:~$ cat > Gemfile <<EOF source 'https://rubygems.org' gem 'puppet', '>= 6' EOF

demo@85c63b50bfa3:~$ bundle install Fetching gem metadata from https://rubygems.org/........ Resolving dependencies... ... Installing puppet 8.10.0 Bundle complete! 1 Gemfile dependency, 17 gems now installed. Bundled gems are installed into `./.vendor`

demo@85c63b50bfa3:~$ cat > file_builtin.pp <<EOF

$file = '/home/demo/puppet-file-builtin'

file {$file: content => 'This is madness'}

EOF

demo@85c63b50bfa3:~$ bin/puppet apply file_builtin.pp

Notice: Compiled catalog for 85c63b50bfa3 in environment production in 0.01 seconds

Notice: /Stage[main]/Main/File[/home/demo/puppet-file-builtin]/ensure: defined content as '{sha256}0549defd0a7d6d840e3a69b82566505924cacbe2a79392970ec28cddc763949e'

Notice: Applied catalog in 0.04 seconds

demo@85c63b50bfa3:~$ sha256sum /home/demo/puppet-file-builtin

0549defd0a7d6d840e3a69b82566505924cacbe2a79392970ec28cddc763949e /home/demo/puppet-file-builtin

Keep in mind the state change information printed by Puppet.

Low-level prototype

Custom types and providers that are not installed via a Gem need to be part of some Puppet module, so they can be copied to Puppet agents via the pluginsync mechanism.

A common location for Puppet modules is the modules directory inside a Puppet environment. For this demo, we declare a demo module.

Basic functionality

Our first attempt is the following type definition for a new type we will call file_llmethod. It has no documentation or validation of input values.

# modules/demo/lib/puppet/type/file_llmethod.rb

Puppet::Type.newtype(:file_llmethod) do

newparam(:path, namevar: true) {}

newproperty(:content) {}

newproperty(:ensure) do

newvalues(:present, :absent)

defaultto(:present)

end

end

We have declared a path parameter that serves as the namevar for this type – there cannot be other file_llmethod instances managing the same path. The ensure property is restricted to two values and defaults to present.

The following provider implementation consists of a getter and a setter for each of the two properties content and ensure.

# modules/demo/lib/puppet/provider/file_llmethod/ruby.rb

Puppet::Type.type(:file_llmethod).provide(:ruby) do

def ensure

File.exist?(@resource[:path]) ? :present : :absent

end

def ensure=(value)

if value == :present

# reuse setter

self.content=(@resource[:content])

else

File.unlink(@resource[:path])

end

end

def content

File.read(@resource[:path])

end

def content=(value)

File.write(@resource[:path], value)

end

end

This gives us the following:

demo@85c63b50bfa3:~$ cat > file_llmethod_create.pp <<EOF

$file = '/home/demo/puppet-file-lowlevel-method-create'

file {$file: ensure => absent} ->

file_llmethod {$file: content => 'This is Sparta!'}

EOF

demo@85c63b50bfa3:~$ bin/puppet apply --modulepath modules file_llmethod_create.pp

Notice: Compiled catalog for 85c63b50bfa3 in environment production in 0.01 seconds

Notice: /Stage[main]/Main/File_llmethod[/home/demo/puppet-file-lowlevel-method-create]/ensure: defined 'ensure' as 'present'

demo@85c63b50bfa3:~$ bin/puppet apply --modulepath modules file_llmethod_create.pp

Notice: Compiled catalog for 85c63b50bfa3 in environment production in 0.01 seconds

Notice: /Stage[main]/Main/File[/home/demo/puppet-file-lowlevel-method-create]/ensure: removed

Notice: /Stage[main]/Main/File_llmethod[/home/demo/puppet-file-lowlevel-method-create]/ensure: defined 'ensure' as 'present'

demo@85c63b50bfa3:~$ cat > file_llmethod_change.pp <<EOF

$file = '/home/demo/puppet-file-lowlevel-method-change'

file {$file: content => 'This is madness'} ->

file_llmethod {$file: content => 'This is Sparta!'}

EOF

demo@85c63b50bfa3:~$ bin/puppet apply --modulepath modules file_llmethod_change.pp

Notice: Compiled catalog for 85c63b50bfa3 in environment production in 0.02 seconds

Notice: /Stage[main]/Main/File[/home/demo/puppet-file-lowlevel-method-change]/ensure: defined content as '{sha256}0549defd0a7d6d840e3a69b82566505924cacbe2a79392970ec28cddc763949e'

Notice: /Stage[main]/Main/File_llmethod[/home/demo/puppet-file-lowlevel-method-change]/content: content changed 'This is madness' to 'This is Sparta!'

demo@85c63b50bfa3:~$ bin/puppet apply --modulepath modules file_llmethod_change.pp

Notice: Compiled catalog for 85c63b50bfa3 in environment production in 0.02 seconds

Notice: /Stage[main]/Main/File[/home/demo/puppet-file-lowlevel-method-change]/content: content changed '{sha256}823cbb079548be98b892725b133df610d0bff46b33e38b72d269306d32b73df2' to '{sha256}0549defd0a7d6d840e3a69b82566505924cacbe2a79392970ec28cddc763949e'

Notice: /Stage[main]/Main/File_llmethod[/home/demo/puppet-file-lowlevel-method-change]/content: content changed 'This is madness' to 'This is Sparta!'

Our custom type already kind of works, even though we have not implemented any explicit comparison of is- and should-state. Puppet does this for us based on the Puppet catalog and the property getter return values. Our defined setters are also invoked by Puppet on demand, only.

We can also see that the ensure state change notice is defined 'ensure' as 'present' and does not incorporate the desired content in any way, while the content state change notice shows plain text. Both tell us that the SHA256 checksum from the file_builtin.pp example is already something non-trivial.

Validating input

As a next step we add validation for path and content.

# modules/demo/lib/puppet/type/file_llmethod.rb

Puppet::Type.newtype(:file_llmethod) do

newparam(:path, namevar: true) do

validate do |value|

fail "#{value} is not a String" unless value.is_a?(String)

fail "#{value} is not an absolute path" unless File.absolute_path?(value)

end

end

newproperty(:content) do

validate do |value|

fail "#{value} is not a String" unless value.is_a?(String)

end

end

newproperty(:ensure) do

newvalues(:present, :absent)

defaultto(:present)

end

end

Failed validations will look like these:

demo@85c63b50bfa3:~$ bin/puppet apply --modulepath modules --exec 'file_llmethod {"./relative/path": }'

Notice: Compiled catalog for 85c63b50bfa3 in environment production in 0.02 seconds

Error: Parameter path failed on File_llmethod[./relative/path]: ./relative/path is not an absolute path (line: 1)

demo@85c63b50bfa3:~$ bin/puppet apply --modulepath modules --exec 'file_llmethod {"/absolute/path": content => 42}'

Notice: Compiled catalog for 85c63b50bfa3 in environment production in 0.02 seconds

Error: Parameter content failed on File_llmethod[/absolute/path]: 42 is not a String (line: 1)

Content Checksums

We override change_to_s so that state changes include content checksums:

# modules/demo/lib/puppet/type/file_llmethod.rb

require 'digest'

Puppet::Type.newtype(:file_llmethod) do

newparam(:path, namevar: true) do

validate do |value|

fail "#{value} is not a String" unless value.is_a?(String)

fail "#{value} is not an absolute path" unless File.absolute_path?(value)

end

end

newproperty(:content) do

validate do |value|

fail "#{value} is not a String" unless value.is_a?(String)

end

define_method(:change_to_s) do |currentvalue, newvalue|

old = "{sha256}#{Digest::SHA256.hexdigest(currentvalue)}"

new = "{sha256}#{Digest::SHA256.hexdigest(newvalue)}"

"content changed '#{old}' to '#{new}'"

end

end

newproperty(:ensure) do

define_method(:change_to_s) do |currentvalue, newvalue| if currentvalue == :absent should = @resource.property(:content).should digest = "{sha256}#{Digest::SHA256.hexdigest(should)}" "defined content as '#{digest}'" else super(currentvalue, newvalue) end end newvalues(:present, :absent) defaultto(:present) end endThe above type definition yields:

demo@85c63b50bfa3:~$ bin/puppet apply --modulepath modules file_llmethod_create.pp

Notice: Compiled catalog for 85c63b50bfa3 in environment production in 0.02 seconds

Notice: /Stage[main]/Main/File[/home/demo/puppet-file-lowlevel-method-create]/ensure: removed

Notice: /Stage[main]/Main/File_llmethod[/home/demo/puppet-file-lowlevel-method-create]/ensure: defined content as '{sha256}823cbb079548be98b892725b133df610d0bff46b33e38b72d269306d32b73df2'

demo@85c63b50bfa3:~$ bin/puppet apply --modulepath modules file_llmethod_change.pp

Notice: Compiled catalog for 85c63b50bfa3 in environment production in 0.02 seconds

Notice: /Stage[main]/Main/File[/home/demo/puppet-file-lowlevel-method-change]/content: content changed '{sha256}823cbb079548be98b892725b133df610d0bff46b33e38b72d269306d32b73df2' to '{sha256}0549defd0a7d6d840e3a69b82566505924cacbe2a79392970ec28cddc763949e'

Notice: /Stage[main]/Main/File_llmethod[/home/demo/puppet-file-lowlevel-method-change]/content: content changed '{sha256}0549defd0a7d6d840e3a69b82566505924cacbe2a79392970ec28cddc763949e' to '{sha256}823cbb079548be98b892725b133df610d0bff46b33e38b72d269306d32b73df2'

Improving memory footprint

So far so good. While our current implementation apparently works, it has at least one major flaw. If the managed file already exists, the provider stores the file’s whole content in memory.

demo@85c63b50bfa3:~$ cat > file_llmethod_change_big.pp <<EOF

$file = '/home/demo/puppet-file-lowlevel-method-change_big'

file_llmethod {$file: content => 'This is Sparta!'}

EOF

demo@85c63b50bfa3:~$ rm -f /home/demo/puppet-file-lowlevel-method-change_big

demo@85c63b50bfa3:~$ ulimit -Sv 200000

demo@85c63b50bfa3:~$ bin/puppet apply --modulepath modules file_llmethod_change_big.pp

Notice: Compiled catalog for 85c63b50bfa3 in environment production in 0.02 seconds

Notice: /Stage[main]/Main/File_llmethod[/home/demo/puppet-file-lowlevel-method-change_big]/ensure: defined content as '{sha256}823cbb079548be98b892725b133df610d0bff46b33e38b72d269306d32b73df2'

Notice: Applied catalog in 0.02 seconds

demo@85c63b50bfa3:~$ dd if=/dev/zero of=/home/demo/puppet-file-lowlevel-method-change_big seek=8G bs=1 count=1

1+0 records in

1+0 records out

1 byte copied, 8.3047e-05 s, 12.0 kB/s

demo@85c63b50bfa3:~$ bin/puppet apply --modulepath modules file_llmethod_change_big.pp

Notice: Compiled catalog for 85c63b50bfa3 in environment production in 0.02 seconds

Error: Could not run: failed to allocate memory

Instead, the implementation should only store the checksum so that Puppet can decide based on checksums if our content= setter needs to be invoked.

This also means that the Puppet catalog’s content needs to be checksummed by munge before it it processed by Puppet’s internal comparison routine. Luckily we also have access to the original value via shouldorig.

# modules/demo/lib/puppet/type/file_llmethod.rb

require 'digest'

Puppet::Type.newtype(:file_llmethod) do

newparam(:path, namevar: true) do

validate do |value|

fail "#{value} is not a String" unless value.is_a?(String)

fail "#{value} is not an absolute path" unless File.absolute_path?(value)

end

end

newproperty(:content) do

validate do |value|

fail "#{value} is not a String" unless value.is_a?(String)

end

munge do |value|

"{sha256}#{Digest::SHA256.hexdigest(value)}"

end

# No need to override change_to_s with munging

end

newproperty(:ensure) do

define_method(:change_to_s) do |currentvalue, newvalue|

if currentvalue == :absent

should = @resource.property(:content).should

"defined content as '#{should}'"

else

super(currentvalue, newvalue)

end

end

newvalues(:present, :absent)

defaultto(:present)

end

end

# modules/demo/lib/puppet/provider/file_llmethod/ruby.rb

Puppet::Type.type(:file_llmethod).provide(:ruby) do

...

def content

File.open(@resource[:path], 'r') do |file|

sha = Digest::SHA256.new

while chunk = file.read(2**16)

sha << chunk

end

"{sha256}#{sha.hexdigest}"

end

end

def content=(value)

# value is munged, but we need to write the original

File.write(@resource[:path], @resource.parameter(:content).shouldorig[0])

end

end

Now we can manage big files:

demo@85c63b50bfa3:~$ ulimit -Sv 200000

demo@85c63b50bfa3:~$ dd if=/dev/zero of=/home/demo/puppet-file-lowlevel-method-change_big seek=8G bs=1 count=1

1+0 records in

1+0 records out

1 byte copied, 9.596e-05 s, 10.4 kB/s

demo@85c63b50bfa3:~$ bin/puppet apply --modulepath modules file_llmethod_change_big.pp

Notice: Compiled catalog for 85c63b50bfa3 in environment production in 0.02 seconds

Notice: /Stage[main]/Main/File_llmethod[/home/demo/puppet-file-lowlevel-method-change_big]/content: content changed '{sha256}ef17a425c57a0e21d14bec2001d8fa762767b97145b9fe47c5d4f2fda323697b' to '{sha256}823cbb079548be98b892725b133df610d0bff46b33e38b72d269306d32b73df2'

Ensure it the Puppet way

There is still something not right. Maybe you have noticed that our provider’s content getter attempts to open a file unconditionally, and yet the file_llmethod_create.pp run has not produced an error. It seems that an ensure transition from absent to present short-circuits the content getter, even though we have not expressed a wish to do so.

It turns out that an ensure property gets special treatment by Puppet. If we had attempted to use a makeitso property instead of ensure, there would be no short-circuiting and the content getter would raise an exception.

We will not fix the content getter though. If Puppet has special treatment for ensure, we should use Puppet’s intended mechanism for it, and declare the type ensurable:

# modules/demo/lib/puppet/type/file_llmethod.rb

require 'digest'

Puppet::Type.newtype(:file_llmethod) do

ensurable

newparam(:path, namevar: true) do

validate do |value|

fail "#{value} is not a String" unless value.is_a?(String)

fail "#{value} is not an absolute path" unless File.absolute_path?(value)

end

end

newproperty(:content) do

validate do |value|

fail "#{value} is not a String" unless value.is_a?(String)

end

munge do |value|

"{sha256}#{Digest::SHA256.hexdigest(value)}"

end

end

end

With ensurable the provider needs to implement three new methods, but we can drop the ensure accessors:

# modules/demo/lib/puppet/provider/file_llmethod/ruby.rb

Puppet::Type.type(:file_llmethod).provide(:ruby) do

def exists?

File.exist?(@resource[:name])

end

def create

self.content=(:dummy)

end

def destroy

File.unlink(@resource[:name])

end

def content

File.open(@resource[:path], 'r') do |file|

sha = Digest::SHA256.new

while chunk = file.read(2**16)

sha << chunk

end

"{sha256}#{sha.hexdigest}"

end

end

def content=(value)

# value is munged, but we need to write the original

File.write(@resource[:path], @resource.parameter(:content).shouldorig[0])

end

end

However, now we have lost the SHA256 checksum on file creation:

demo@85c63b50bfa3:~$ bin/puppet apply --modulepath modules file_llmethod_create.pp Notice: Compiled catalog for 85c63b50bfa3 in environment production in 0.02 seconds Notice: /Stage[main]/Main/File[/home/demo/puppet-file-lowlevel-method-create]/ensure: removed Notice: /Stage[main]/Main/File_llmethod[/home/demo/puppet-file-lowlevel-method-create]/ensure: created

To get it back, we replace ensurable by an adapted implementation of it, which includes our previous change_to_s override:

newproperty(:ensure, :parent => Puppet::Property::Ensure) do

defaultvalues

define_method(:change_to_s) do |currentvalue, newvalue|

if currentvalue == :absent

should = @resource.property(:content).should

"defined content as '#{should}'"

else

super(currentvalue, newvalue)

end

end

end

demo@85c63b50bfa3:~$ bin/puppet apply --modulepath modules file_llmethod_create.pp

Notice: Compiled catalog for 85c63b50bfa3 in environment production in 0.02 seconds

Notice: /Stage[main]/Main/File[/home/demo/puppet-file-lowlevel-method-create]/ensure: removed

Notice: /Stage[main]/Main/File_llmethod[/home/demo/puppet-file-lowlevel-method-create]/ensure: defined content as '{sha256}823cbb079548be98b892725b133df610d0bff46b33e38b72d269306d32b73df2'

Our final low-level prototype is thus as follows.

Final low-level prototype

# modules/demo/lib/puppet/type/file_llmethod.rb

# frozen_string_literal: true

require 'digest'

Puppet::Type.newtype(:file_llmethod) do

newparam(:path, namevar: true) do

validate do |value|

fail "#{value} is not a String" unless value.is_a?(String)

fail "#{value} is not an absolute path" unless File.absolute_path?(value)

end

end

newproperty(:content) do

validate do |value|

fail "#{value} is not a String" unless value.is_a?(String)

end

munge do |value|

"{sha256}#{Digest::SHA256.hexdigest(value)}"

end

end

newproperty(:ensure, :parent => Puppet::Property::Ensure) do

defaultvalues

define_method(:change_to_s) do |currentvalue, newvalue|

if currentvalue == :absent

should = @resource.property(:content).should

"defined content as '#{should}'"

else

super(currentvalue, newvalue)

end

end

end

end

# modules/demo/lib/puppet/provider/file_llmethod/ruby.rb

# frozen_string_literal: true

Puppet::Type.type(:file_llmethod).provide(:ruby) do

def exists?

File.exist?(@resource[:name])

end

def create

self.content=(:dummy)

end

def destroy

File.unlink(@resource[:name])

end

def content

File.open(@resource[:path], 'r') do |file|

sha = Digest::SHA256.new

while (chunk = file.read(2**16))

sha << chunk

end

"{sha256}#{sha.hexdigest}"

end

end

def content=(_value)

# value is munged, but we need to write the original

File.write(@resource[:path], @resource.parameter(:content).shouldorig[0])

end

end

Resource-API prototype

According to the Resource-API documentation we need to define our new file_rsapi type by calling Puppet::ResourceApi.register_type with several parameters, amongst which are the desired attributes, even ensure.

# modules/demo/lib/puppet/type/file_rsapi.rb

require 'puppet/resource_api'

Puppet::ResourceApi.register_type(

name: 'file_rsapi',

attributes: {

content: {

desc: 'description of content parameter',

type: 'String'

},

ensure: {

default: 'present',

desc: 'description of ensure parameter',

type: 'Enum[present, absent]'

},

path: {

behaviour: :namevar,

desc: 'description of path parameter',

type: 'Pattern[/\A\/([^\n\/\0]+\/*)*\z/]'

},

},

desc: 'description of file_rsapi'

)

The path type uses a built-in Puppet data type. Stdlib::Absolutepath would have been more convenient but external data types are not possible with the Resource-API yet.

In comparison with our low-level prototype, the above type definition has no SHA256-munging and SHA256-output counterparts. The canonicalize provider feature looks similar to munging, but we skip it for now.

The Resource-API documentation tells us to implement a get and a set method in our provider, stating

The get method reports the current state of the managed resources. It returns an enumerable of all existing resources. Each resource is a hash with attribute names as keys, and their respective values as values.

This demand is the first bummer, as we definitely do not want to read all files with their content and store it in memory. We can ignore this demand – how would the Resource-API know anyway.

However, the documented signature is def get(context) {...} where context has no information about the resource we want to manage.

This would have been a show-stopper, if the simple_get_filter provider feature didn’t exist, which changes the signature to def get(context, names = nil) {...}.

Our first version of file_rsapi is thus the following.

Basic functionality

# modules/demo/lib/puppet/type/file_rsapi.rb

require 'puppet/resource_api'

Puppet::ResourceApi.register_type(

name: 'file_rsapi',

features: %w[simple_get_filter],

attributes: {

content: {

desc: 'description of content parameter',

type: 'String'

},

ensure: {

default: 'present',

desc: 'description of ensure parameter',

type: 'Enum[present, absent]'

},

path: {

behaviour: :namevar,

desc: 'description of path parameter',

type: 'Pattern[/\A\/([^\n\/\0]+\/*)*\z/]'

},

},

desc: 'description of file_rsapi'

)

# modules/demo/lib/puppet/provider/file_rsapi/file_rsapi.rb

require 'digest'

class Puppet::Provider::FileRsapi::FileRsapi

def get(context, names)

(names or []).map do |name|

File.exist?(name) ? {

path: name,

ensure: 'present',

content: filedigest(name),

} : nil

end.compact # remove non-existing resources

end

def set(context, changes)

changes.each do |path, change|

if change[:should][:ensure] == 'present'

File.write(path, change[:should][:content])

elsif File.exist?(path)

File.delete(path)

end

end

end

def filedigest(path)

File.open(path, 'r') do |file|

sha = Digest::SHA256.new

while chunk = file.read(2**16)

sha << chunk

end

"{sha256}#{sha.hexdigest}"

end

end

end

The desired content is written correctly into the file, but we have again no SHA256 checksum on creation as well as unnecessary writes, because the checksum from get does not match the cleartext from the catalog:

demo@85c63b50bfa3:~$ cat > file_rsapi_create.pp <<EOF

$file = '/home/demo/puppet-file-rsapi-create'

file {$file: ensure => absent} ->

file_rsapi {$file: content => 'This is Sparta!'}

EOF

demo@85c63b50bfa3:~$ bin/puppet apply --modulepath modules file_rsapi_create.pp

Notice: Compiled catalog for 85c63b50bfa3 in environment production in 0.03 seconds

Notice: /Stage[main]/Main/File[/home/demo/puppet-file-rsapi-create]/ensure: removed

Notice: /Stage[main]/Main/File_rsapi[/home/demo/puppet-file-rsapi-create]/ensure: defined 'ensure' as 'present'

Notice: Applied catalog in 0.02 seconds

demo@85c63b50bfa3:~$ cat > file_rsapi_change.pp <<EOF

$file = '/home/demo/puppet-file-rsapi-change'

file {$file: content => 'This is madness'} ->

file_rsapi {$file: content => 'This is Sparta!'}

EOF

demo@85c63b50bfa3:~$ bin/puppet apply --modulepath modules file_rsapi_change.pp

Notice: Compiled catalog for 85c63b50bfa3 in environment production in 0.03 seconds

Notice: /Stage[main]/Main/File[/home/demo/puppet-file-rsapi-change]/content: content changed '{sha256}823cbb079548be98b892725b133df610d0bff46b33e38b72d269306d32b73df2' to '{sha256}0549defd0a7d6d840e3a69b82566505924cacbe2a79392970ec28cddc763949e'

Notice: /Stage[main]/Main/File_rsapi[/home/demo/puppet-file-rsapi-change]/content: content changed '{sha256}0549defd0a7d6d840e3a69b82566505924cacbe2a79392970ec28cddc763949e' to 'This is Sparta!'

Notice: Applied catalog in 0.03 seconds

Hence we enable and implement the canonicalize provider feature.

Canonicalize

According to the documentation, canonicalize is applied to the results of get as well as to the catalog properties. On the one hand, we do not want to read the file’s content into memory, on the other hand cannot checksum the file’s content twice.

An easy way would be to check whether the canonicalized call happens after the get call:

def canonicalize(context, resources)

if @stage == :get

# do nothing, is-state canonicalization already performed by get()

else

# catalog canonicalization

...

end

end

def get(context, paths)

@stage = :get

...

end

While this works for the current implementation of the Resource-API, there is no guarantee about the order of canonicalize calls. Instead we subclass from String and handle checksumming internally. We also add some state change calls to the appropriate context methods.

Our final Resource-API-based prototype implementation is:

# modules/demo/lib/puppet/type/file_rsapi.rb

# frozen_string_literal: true

require 'puppet/resource_api'

Puppet::ResourceApi.register_type(

name: 'file_rsapi',

features: %w[simple_get_filter canonicalize],

attributes: {

content: {

desc: 'description of content parameter',

type: 'String'

},

ensure: {

default: 'present',

desc: 'description of ensure parameter',

type: 'Enum[present, absent]'

},

path: {

behaviour: :namevar,

desc: 'description of path parameter',

type: 'Pattern[/\A\/([^\n\/\0]+\/*)*\z/]'

}

},

desc: 'description of file_rsapi'

)

# modules/demo/lib/puppet/provider/file_rsapi/file_rsapi.rb

# frozen_string_literal: true

require 'digest'

require 'pathname'

class Puppet::Provider::FileRsapi::FileRsapi

class CanonicalString < String

attr_reader :original

def class

# Mask as String for YAML.dump to mitigate

# Error: Transaction store file /var/cache/puppet/state/transactionstore.yaml

# is corrupt ((/var/cache/puppet/state/transactionstore.yaml): Tried to

# load unspecified class: Puppet::Provider::FileRsapi::FileRsapi::CanonicalString)

String

end

def self.from(obj)

return obj if obj.is_a?(self)

return new(filedigest(obj)) if obj.is_a?(Pathname)

new("{sha256}#{Digest::SHA256.hexdigest(obj)}", obj)

end

def self.filedigest(path)

File.open(path, 'r') do |file|

sha = Digest::SHA256.new

while (chunk = file.read(2**16))

sha << chunk

end

"{sha256}#{sha.hexdigest}"

end

end

def initialize(canonical, original = nil)

@original = original

super(canonical)

end

end

def canonicalize(_context, resources)

resources.each do |resource|

next if resource[:ensure] == 'absent'

resource[:content] = CanonicalString.from(resource[:content])

end

resources

end

def get(_context, names)

(names or []).map do |name|

next unless File.exist?(name)

{

content: CanonicalString.from(Pathname.new(name)),

ensure: 'present',

path: name

}

end.compact # remove non-existing resources

end

def set(context, changes)

changes.each do |path, change|

if change[:should][:ensure] == 'present'

File.write(path, change[:should][:content].original)

if change[:is][:ensure] == 'present'

# The only other possible change is due to content,

# but content change transition info is covered implicitly

else

context.created("#{path} with content '#{change[:should][:content]}'")

end

elsif File.exist?(path)

File.delete(path)

context.deleted(path)

end

end

end

end

It gives us:

demo@85c63b50bfa3:~$ cat > file_rsapi_create.pp <<EOF

$file = '/home/demo/puppet-file-rsapi-create'

file {$file: ensure => absent} ->

file_rsapi {$file: content => 'This is Sparta!'}

EOF

demo@85c63b50bfa3:~$ bin/puppet apply --modulepath modules file_rsapi_create.pp

Notice: Compiled catalog for 85c63b50bfa3 in environment production in 0.03 seconds

Notice: /Stage[main]/Main/File[/home/demo/puppet-file-rsapi-create]/ensure: removed

Notice: /Stage[main]/Main/File_rsapi[/home/demo/puppet-file-rsapi-create]/ensure: defined 'ensure' as 'present'

Notice: file_rsapi: Created: /home/demo/puppet-file-rsapi-create with content '{sha256}823cbb079548be98b892725b133df610d0bff46b33e38b72d269306d32b73df2'

demo@85c63b50bfa3:~$ cat > file_rsapi_change.pp <<EOF

$file = '/home/demo/puppet-file-rsapi-change'

file {$file: content => 'This is madness'} ->

file_rsapi {$file: content => 'This is Sparta!'}

EOF

demo@85c63b50bfa3:~$ bin/puppet apply --modulepath modules file_rsapi_change.pp

Notice: Compiled catalog for 85c63b50bfa3 in environment production in 0.03 seconds

Notice: /Stage[main]/Main/File[/home/demo/puppet-file-rsapi-change]/content: content changed '{sha256}823cbb079548be98b892725b133df610d0bff46b33e38b72d269306d32b73df2' to '{sha256}0549defd0a7d6d840e3a69b82566505924cacbe2a79392970ec28cddc763949e'

Notice: /Stage[main]/Main/File_rsapi[/home/demo/puppet-file-rsapi-change]/content: content changed '{sha256}0549defd0a7d6d840e3a69b82566505924cacbe2a79392970ec28cddc763949e' to '{sha256}823cbb079548be98b892725b133df610d0bff46b33e38b72d269306d32b73df2'

demo@85c63b50bfa3:~$ cat > file_rsapi_remove.pp <<EOF

$file = '/home/demo/puppet-file-rsapi-remove'

file {$file: ensure => present} ->

file_rsapi {$file: ensure => absent}

EOF

demo@85c63b50bfa3:~$ bin/puppet apply --modulepath modules file_rsapi_remove.pp

Notice: Compiled catalog for 85c63b50bfa3 in environment production in 0.03 seconds

Notice: /Stage[main]/Main/File[/home/demo/puppet-file-rsapi-remove]/ensure: created

Notice: /Stage[main]/Main/File_rsapi[/home/demo/puppet-file-rsapi-remove]/ensure: undefined 'ensure' from 'present'

Notice: file_rsapi: Deleted: /home/demo/puppet-file-rsapi-remove

Thoughts

The apparent need for a CanonicalString masking as String makes it look like we are missing something. If the Resource-API only checked data types after canonicalization, we could make get return something simpler than CanonicalString to signal an already canonicalized value.

The Resource-API’s default demand to return all existing resources simplifies development when one had this plan anyway. The low-level version of doing so is often a combination of prefetch and instances.

Introduction

Redis is a widely popular in-memory key-value high-performance database, which can also be used as a cache and message broker. It has been a go-to choice for many due to its performance and versatility. Many cloud providers offer Redis-based solutions:

- Amazon Web Services (AWS) – Amazon ElastiCache for Redis

- Microsoft Azure – Azure Cache for Redis

- Google Cloud Platform (GCP) – Google Cloud Memorystore for Redis

However, due to recent changes in the licensing model of Redis, its prominence and usage are changing. Redis was initially developed under the open-source BSD license, allowing developers to freely use, modify and distribute the source code for both commercial and non-commercial purposes. As a result, Redis quickly gained popularity in the developer community.

But, Redis has recently changed to a dual source-available license. To be precise, in the future, it will be available under RSALv2 (Redis Source Available License Version 2) or SSPLv1 (Server Side Public License Version 1), commercial use requires individual agreements, potentially increasing costs for cloud service providers. For a detailed overview of these changes, refer to the Redis licensing page. Based on the Redis Community Edition, the source code will remain freely available for developers, customers and partners of the company. However, cloud service providers and others who want to use Redis as part of commercial offerings will have to make individual agreements with the provider.

Due to these recent changes in Redis’s licensing model, many developers and organizations are re-evaluating their in-memory key-value database choices. Valkey, an open-source fork of Redis, maintains the high performance and versatility while ensuring unrestricted use for both developers and commercial entities. The Linux Foundation forked the project and contributors are now supporting the Valkey project. More information can be found here and here. Its commitment to open-source principles has gained support from major cloud providers, including AWS. Amazon Web Services (AWS) announced “AWS is committed to supporting open source Valkey for the long term“, more information can be found here. So it may be the right time to switch the infrastructure from Redis to Valkey.

In this article, we will set up a Valkey instance with TLS and outline the steps to migrate your data from Redis seamlessly.

Overview of possible migration approaches

In general, there are several approaches to migrate:

- Reuse the database file

In this approach, Redis is shutted down to update the rdb file on disk and Valkey will be started using this file in its data directory. - Use

REPLICAOFto connect Valkey to the Redis instance

Register the new Valkey instance as a replica of a Redis master to stream the data. The Valkey instance and its network must be able to reach the Redis service. - Automated data migration to Valkey

Scripting the migration can be used on a machine that can reach both the Redis and Valkey databases.

In this bog article, we encounter that direct access to the file system of the Redis server in the cloud is not feasible to reuse the database file and that the Valkey service and Redis service are in different networks and cannot reach themselves for setting up a replica. As a result, we choose the third option and run an automated data migration script on a different machine, which can connect to both servers and transfer the data.

Setup of Valkey

In case you are using a cloud service, please consult their instructions how to setup a Valkey instance. Since it is a new project there are only a few distributions, which provides ready-to-use packages like Red Hat Enterprise Linux 8 and 9 via Extra Packages for Enterprise Linux (EPEL). In this blog post, we use an on-premises Debian 12 server to host the Valkey server in version 7.2.6 with TLS . Please consult your distribution guides to install Valkey or use the manual provided on GitHub. The migration itself with be done by a Python 3 script using TLS.

Start the server and establish a client connection:

In this bog article, we will use a server with the listed TLS parameters. We specify all used TLS parameters including port 0 to disable the non-TLS port completely:

$ valkey-server --tls-port 6379 --port 0 --tls-cert-file ./tls/redis.crt --tls-key-file ./tls/redis.key --tls-ca-cert-file ./tls/ca.crt

.+^+.

.+#########+.

.+########+########+. Valkey 7.2.6 (579cca5f/0) 64 bit

.+########+' '+########+.

.########+' .+. '+########. Running in standalone mode

|####+' .+#######+. '+####| Port: 6379

|###| .+###############+. |###| PID: 436767

|###| |#####*'' ''*#####| |###|

|###| |####' .-. '####| |###|

|###| |###( (@@@) )###| |###| https://valkey.io

|###| |####. '-' .####| |###|

|###| |#####*. .*#####| |###|

|###| '+#####| |#####+' |###|

|####+. +##| |#+' .+####|

'#######+ |##| .+########'

'+###| |##| .+########+'

'| |####+########+'

+#########+'

'+v+'

436767:M 27 Aug 2024 16:08:56.058 * Server initialized

436767:M 27 Aug 2024 16:08:56.058 * Loading RDB produced by valkey version 7.2.6

[...]

436767:M 27 Aug 2024 16:08:56.058 * Ready to accept connections tlsNow it is time to test the connection with a client using TLS:

$ valkey-cli --tls --cert ./tls/redis.crt --key ./tls/redis.key --cacert ./tls/ca.crt -p 6379 127.0.0.1:6379> INFO SERVER # Server server_name:valkey valkey_version:7.2.6 [...]

Automated data migration to Valkey

Finally, we migrate the data in this example using a Python 3 script. This Python script establishes connections to both the Redis source and Valkey target databases, fetches all the keys from the Redis database and creates or updates each key-value pair in the Valkey database. This approach is not off the shelf and uses the redis-py library, which provides a list of examples. By using Python 3 the process could even be extended to filter unwanted data, alter values to be suitable for the new environment or by adding sanity checks. The script, which is used here, provides progress updates during the migration process:

#!/usr/bin/env python3

import redis

# Connect to the Redis source database, which is password protected, via IP and port

redis_client = redis.StrictRedis(host='172.17.0.3', port=6379, password='secret', db=0)

# Connect to the Valkey target database, which is using TLS

ssl_certfile="./tls/client.crt"

ssl_keyfile="./tls/client.key"

ssl_ca_certs="./tls/ca.crt"

valkey_client = redis.Redis(

host="192.168.0.3",

port=6379,

ssl=True,

ssl_certfile=ssl_certfile,

ssl_keyfile=ssl_keyfile,

ssl_cert_reqs="required",

ssl_ca_certs=ssl_ca_certs,

)

# Fetch all keys from the Redis database

keys = redis_client.keys('*')

print("Found", len(keys), "Keys in Source!")

# Migrate each key-value pair to the Valkey database

for counter, key in enumerate(keys):

value = redis_client.get(key)

valkey_client.set(key, value)

print("Status: ", round((counter+1) / len(keys) * 100, 1), "%", end='\r')

print()

To start the process execute the script:

$ python3 redis_to_tls_valkey.py Found 569383 Keys in Source! Status: 100.0 %

As a last step, configure your application to connect to the new Valkey server.

Conclusion

Since the change of Redis’ license, the new project Valkey is gaining more and more attraction. Migrating to Valkey ensures continued access to a robust, open-source in-memory database without the licensing restrictions of Redis. Whether you’re running your infrastructure on-premises or in the cloud, this guide provides the steps needed for a successful migration. Migrating from a cloud instance to a new environment can be cumbersome, because of no direct file access or isolated networks. Depending on these circumstances, we used a Python script, which is a flexible way to implement various steps to master the task.

If you find this guide helpful and in case you need support to migrate your databases, feel free to contact us. We like to support you on-premises or in cloud environments.

Mastering Cloud Infrastructure with Pulumi: Introduction

In today’s rapidly changing landscape of cloud computing, managing infrastructure as code (IaC) has become essential for developers and IT professionals. Pulumi, an open-source IaC tool, brings a fresh perspective to the table by enabling infrastructure management using popular programming languages like JavaScript, TypeScript, Python, Go, and C#. This approach offers a unique blend of flexibility and power, allowing developers to leverage their existing coding skills to build, deploy, and manage cloud infrastructure. In this post, we’ll explore the world of Pulumi and see how it pairs with Amazon FSx for NetApp ONTAP—a robust solution for scalable and efficient cloud storage.

Pulumi – The Theory

Why Pulumi?

Pulumi distinguishes itself among IaC tools for several compelling reasons:

- Use Familiar Programming Languages: Unlike traditional IaC tools that rely on domain-specific languages (DSLs), Pulumi allows you to use familiar programming languages. This means no need to learn new syntax, and you can incorporate sophisticated logic, conditionals, and loops directly in your infrastructure code.

- Seamless Integration with Development Workflows: Pulumi integrates effortlessly with existing development workflows and tools, making it a natural fit for modern software projects. Whether you’re managing a simple web app or a complex, multi-cloud architecture, Pulumi provides the flexibility to scale without sacrificing ease of use.

Challenges with Pulumi

Like any tool, Pulumi comes with its own set of challenges:

- Learning Curve: While Pulumi leverages general-purpose languages, developers need to be proficient in the language they choose, such as Python or TypeScript. This can be a hurdle for those unfamiliar with these languages.

- Growing Ecosystem: As a relatively new tool, Pulumi’s ecosystem is still expanding. It might not yet match the extensive plugin libraries of older IaC tools, but its vibrant and rapidly growing community is a promising sign of things to come.

State Management in Pulumi: Ensuring Consistency Across Deployments

Effective infrastructure management hinges on proper state handling. Pulumi excels in this area by tracking the state of your infrastructure, enabling it to manage resources efficiently. This capability ensures that Pulumi knows exactly what needs to be created, updated, or deleted during deployments. Pulumi offers several options for state storage:

- Local State: Stored directly on your local file system. This option is ideal for individual projects or simple setups.

- Remote State: By default, Pulumi stores state remotely on the Pulumi Service (a cloud-hosted platform provided by Pulumi), but it also allows you to configure storage on AWS S3, Azure Blob Storage, or Google Cloud Storage. This is particularly useful in team environments where collaboration is essential.

Managing state effectively is crucial for maintaining consistency across deployments, especially in scenarios where multiple team members are working on the same infrastructure.

Other IaC Tools: Comparing Pulumi to Traditional IaC Tools

When comparing Pulumi to other Infrastructure as Code (IaC) tools, several drawbacks of traditional approaches become evident:

- Domain-Specific Language (DSL) Limitations: Many IaC tools depend on DSLs, such as Terraform’s HCL, requiring users to learn a specialized language specific to the tool.

- YAML/JSON Constraints: Tools that rely on YAML or JSON can be both restrictive and verbose, complicating the management of more complex configurations.

- Steep Learning Curve: The necessity to master DSLs or particular configuration formats adds to the learning curve, especially for newcomers to IaC.

- Limited Logical Capabilities: DSLs often lack support for advanced logic constructs such as loops, conditionals, and reusability. This limitation can lead to repetitive code that is challenging to maintain.

- Narrow Ecosystem: Some IaC tools have a smaller ecosystem, offering fewer plugins, modules, and community-driven resources.

- Challenges with Code Reusability: The inability to reuse code across different projects or components can hinder efficiency and scalability in infrastructure management.

- Testing Complexity: Testing infrastructure configurations written in DSLs can be challenging, making it difficult to ensure the reliability and robustness of the infrastructure code.

Pulumi – In Practice

Introduction

In the this section, we’ll dive into a practical example to better understand Pulumi’s capabilities. We’ll also explore how to set up a project using Pulumi with AWS and automate it using GitHub Actions for CI/CD.

Prerequisites

Before diving into using Pulumi with AWS and automating your infrastructure management through GitHub Actions, ensure you have the following prerequisites in place:

- Pulumi CLI: Begin by installing the Pulumi CLI by following the official installation instructions. After installation, verify that Pulumi is correctly set up and accessible in your system’s PATH by running a quick version check.

- AWS CLI: Install the AWS CLI, which is essential for interacting with AWS services. Configure the AWS CLI with your AWS credentials to ensure you have access to the necessary AWS resources. Ensure your AWS account is equipped with the required permissions, especially for IAM, EC2, S3, and any other AWS services you plan to manage with Pulumi.

- AWS IAM User/Role for GitHub Actions: Create a dedicated IAM user or role in AWS specifically for use in your GitHub Actions workflows. This user or role should have permissions necessary to manage the resources in your Pulumi stack. Store the AWS_ACCESS_KEY_ID and AWS_SECRET_ACCESS_KEY securely as secrets in your GitHub repository.

- Pulumi Account: Set up a Pulumi account if you haven’t already. Generate a Pulumi access token and store it as a secret in your GitHub repository to facilitate secure automation.

- Python and Pip: Install Python (version 3.7 or higher is recommended) along with Pip, which are necessary for Pulumi’s Python SDK. Once Python is installed, proceed to install Pulumi’s Python SDK along with any required AWS packages to enable infrastructure management through Python.

- GitHub Account: Ensure you have an active GitHub account to host your code and manage your repository. Create a GitHub repository where you’ll store your Pulumi project and related automation workflows. Store critical secrets like AWS_ACCESS_KEY_ID, AWS_SECRET_ACCESS_KEY, and your Pulumi access token securely in the GitHub repository’s secrets section.

- GitHub Runners: Utilize GitHub-hosted runners to execute your GitHub Actions workflows, or set up self-hosted runners if your project requires them. Confirm that the runners have all necessary tools installed, including Pulumi, AWS CLI, Python, and any other dependencies your Pulumi project might need.

Project Structure

When working with Infrastructure as Code (IaC) using Pulumi, maintaining an organized project structure is essential. A clear and well-defined directory structure not only streamlines the development process but also improves collaboration and deployment efficiency. In this post, we’ll explore a typical directory structure for a Pulumi project and explain the significance of each component.

Overview of a Typical Pulumi Project Directory

A standard Pulumi project might be organized as follows:

/project-root

├── .github

│ └── workflows

│ └── workflow.yml # GitHub Actions workflow for CI/CD

├── __main__.py # Entry point for the Pulumi program

├── infra.py # Infrastructure code

├── pulumi.dev.yml # Pulumi configuration for the development environment

├── pulumi.prod.yml # Pulumi configuration for the production environment

├── pulumi.yml # Pulumi configuration (common or default settings)

├── requirements.txt # Python dependencies

└── test_infra.py # Tests for infrastructure code

NetApp FSx on AWS

Introduction

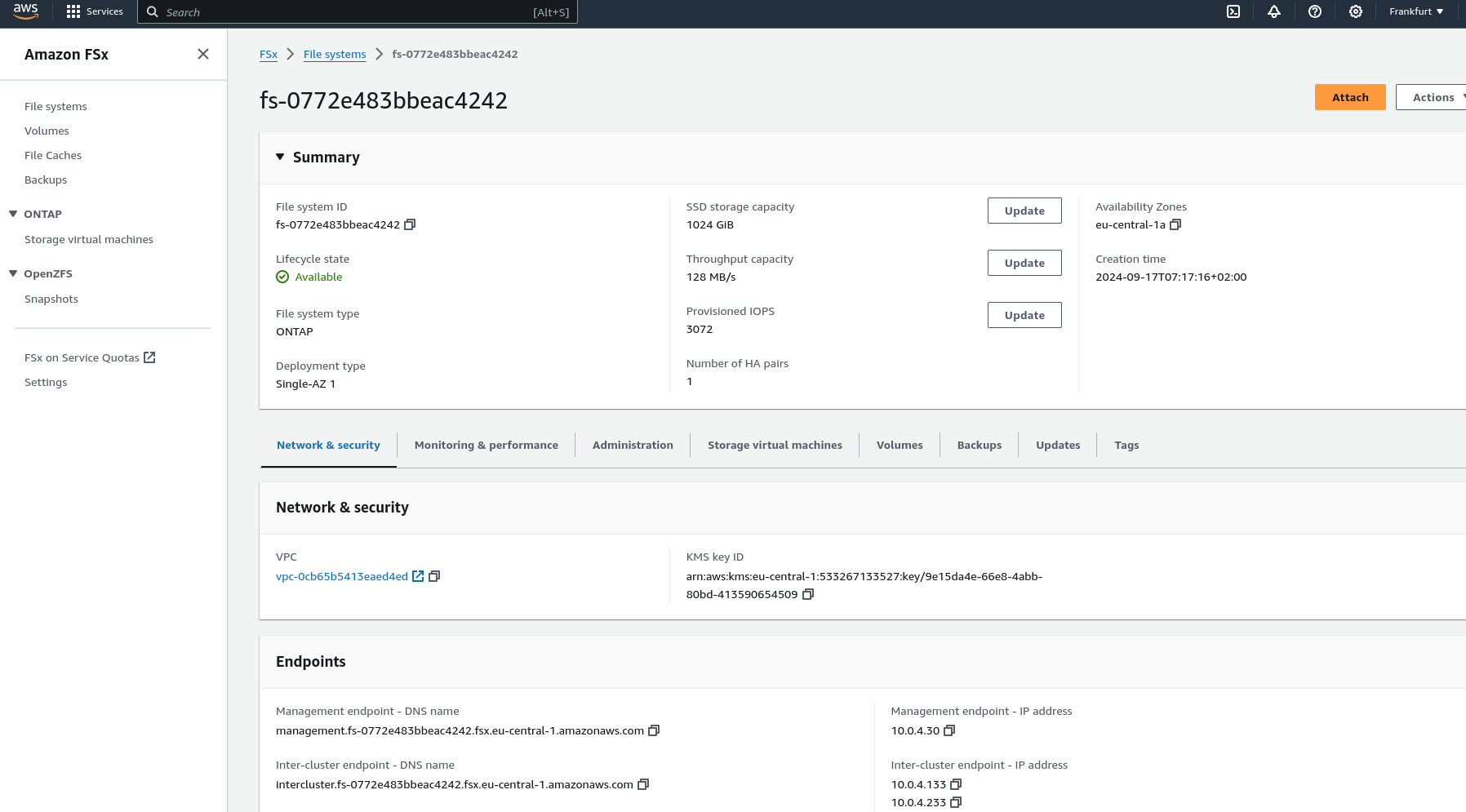

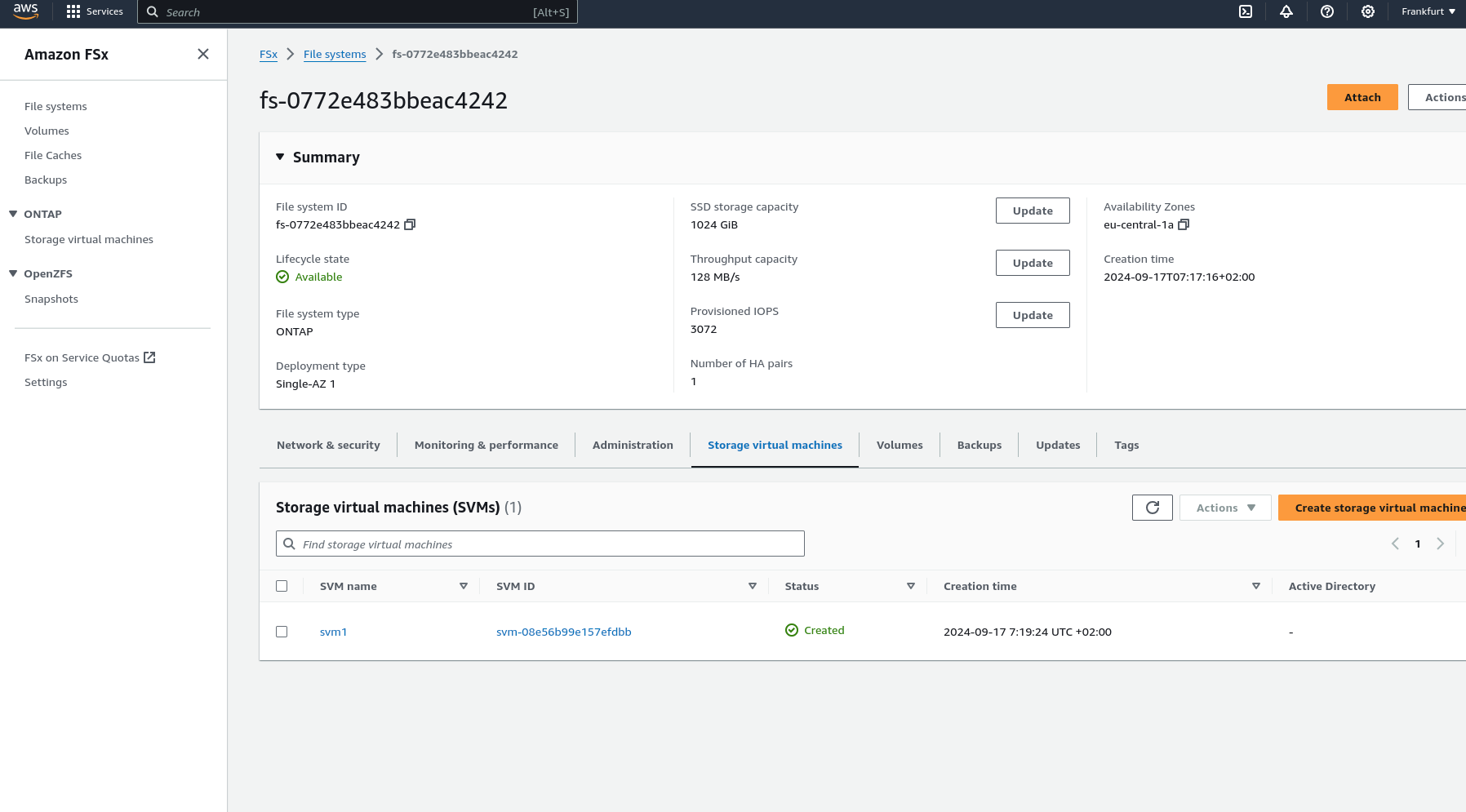

Amazon FSx for NetApp ONTAP offers a fully managed, scalable storage solution built on the NetApp ONTAP file system. It provides high-performance, highly available shared storage that seamlessly integrates with your AWS environment. Leveraging the advanced data management capabilities of ONTAP, FSx for NetApp ONTAP is ideal for applications needing robust storage features and compatibility with existing NetApp systems.

Key Features

- High Performance: FSx for ONTAP delivers low-latency storage designed to handle demanding, high-throughput workloads.

- Scalability: Capable of scaling to support petabytes of storage, making it suitable for both small and large-scale applications.

- Advanced Data Management: Leverages ONTAP’s comprehensive data management features, including snapshots, cloning, and disaster recovery.

- Multi-Protocol Access: Supports NFS and SMB protocols, providing flexible access options for a variety of clients.

- Cost-Effectiveness: Implements tiering policies to automatically move less frequently accessed data to lower-cost storage, helping optimize storage expenses.

What It’s About

In the next sections, we’ll walk through the specifics of setting up each component using Pulumi code, illustrating how to create a VPC, configure subnets, set up a security group, and deploy an FSx for NetApp ONTAP file system, all while leveraging the robust features provided by both Pulumi and AWS.

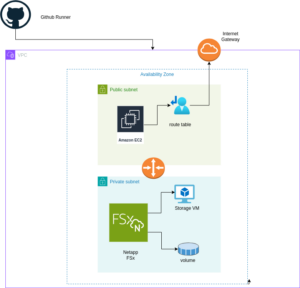

Architecture Overview

A visual representation of the architecture we’ll deploy using Pulumi: Single AZ Deployment with FSx and EC2

The diagram above illustrates the architecture for deploying an FSx for NetApp ONTAP file system within a single Availability Zone. The setup includes a VPC with public and private subnets, an Internet Gateway for outbound traffic, and a Security Group controlling access to the FSx file system and the EC2 instance. The EC2 instance is configured to mount the FSx volume using NFS, enabling seamless access to storage.

Setting up Pulumi

Follow these steps to set up Pulumi and integrate it with AWS:

Install Pulumi: Begin by installing Pulumi using the following command:

curl -fsSL https://get.pulumi.com | shInstall AWS CLI: If you haven’t installed it yet, install the AWS CLI to manage AWS services:

pip install awscliConfigure AWS CLI: Configure the AWS CLI with your credentials:

aws configureCreate a New Pulumi Project: Initialize a new Pulumi project with AWS and Python:

pulumi new aws-pythonConfigure Your Pulumi Stack: Set the AWS region for your Pulumi stack:

pulumi config set aws:region eu-central-1Deploy Your Stack: Deploy your infrastructure using Pulumi:

pulumi preview ; pulumi upExample: VPC, Subnets, and FSx for NetApp ONTAP

Let’s dive into an example Pulumi project that sets up a Virtual Private Cloud (VPC), subnets, a security group, an Amazon FSx for NetApp ONTAP file system, and an EC2 instance.

Pulumi Code Example: VPC, Subnets, and FSx for NetApp ONTAP

The first step is to define all the parameters required to set up the infrastructure. You can use the following example to configure these parameters as specified in the pulumi.dev.yaml file.

This pulumi.dev.yaml file contains configuration settings for a Pulumi project. It specifies various parameters for the deployment environment, including the AWS region, availability zones, and key name. It also defines CIDR blocks for subnets. These settings are used to configure and deploy cloud infrastructure resources in the specified AWS region.

config:

aws:region: eu-central-1

demo:availabilityZone: eu-central-1a

demo:keyName: XYZ

demo:subnet1CIDER: 10.0.3.0/24

demo:subnet2CIDER: 10.0.4.0/24

The following code snippet should be placed in the infra.py file. It details the setup of the VPC, subnets, security group, and FSx for NetApp ONTAP file system. Each step in the code is explained through inline comments.

import pulumi import pulumi_aws as aws import pulumi_command as command import os # Retrieve configuration values from Pulumi configuration files aws_config = pulumi.Config("aws") region = aws_config.require("region") # The AWS region where resources will be deployed demo_config = pulumi.Config("demo") availability_zone = demo_config.require("availabilityZone") # Availability Zone for the deployment subnet1_cidr = demo_config.require("subnet1CIDER") # CIDR block for the public subnet subnet2_cidr = demo_config.require("subnet2CIDER") # CIDR block for the private subnet key_name = demo_config.require("keyName") # Name of the SSH key pair for EC2 instance access# Create a new VPC with DNS support enabled vpc = aws.ec2.Vpc( "fsxVpc", cidr_block="10.0.0.0/16", # VPC CIDR block enable_dns_support=True, # Enable DNS support in the VPC enable_dns_hostnames=True # Enable DNS hostnames in the VPC ) # Create an Internet Gateway to allow internet access from the VPC internet_gateway = aws.ec2.InternetGateway( "vpcInternetGateway", vpc_id=vpc.id # Attach the Internet Gateway to the VPC ) # Create a public route table for routing internet traffic via the Internet Gateway public_route_table = aws.ec2.RouteTable( "publicRouteTable", vpc_id=vpc.id, routes=[aws.ec2.RouteTableRouteArgs( cidr_block="0.0.0.0/0", # Route all traffic (0.0.0.0/0) to the Internet Gateway gateway_id=internet_gateway.id )] ) # Create a single public subnet in the specified Availability Zone public_subnet = aws.ec2.Subnet( "publicSubnet", vpc_id=vpc.id, cidr_block=subnet1_cidr, # CIDR block for the public subnet availability_zone=availability_zone, # The specified Availability Zone map_public_ip_on_launch=True # Assign public IPs to instances launched in this subnet ) # Create a single private subnet in the same Availability Zone private_subnet = aws.ec2.Subnet( "privateSubnet", vpc_id=vpc.id, cidr_block=subnet2_cidr, # CIDR block for the private subnet availability_zone=availability_zone # The same Availability Zone ) # Associate the public subnet with the public route table to enable internet access public_route_table_association = aws.ec2.RouteTableAssociation( "publicRouteTableAssociation", subnet_id=public_subnet.id, route_table_id=public_route_table.id ) # Create a security group to control inbound and outbound traffic for the FSx file system security_group = aws.ec2.SecurityGroup( "fsxSecurityGroup", vpc_id=vpc.id, description="Allow NFS traffic", # Description of the security group ingress=[ aws.ec2.SecurityGroupIngressArgs( protocol="tcp", from_port=2049, # NFS protocol port to_port=2049, cidr_blocks=["0.0.0.0/0"] # Allow NFS traffic from anywhere ), aws.ec2.SecurityGroupIngressArgs( protocol="tcp", from_port=111, # RPCBind port for NFS to_port=111, cidr_blocks=["0.0.0.0/0"] # Allow RPCBind traffic from anywhere ), aws.ec2.SecurityGroupIngressArgs( protocol="udp", from_port=111, # RPCBind port for NFS over UDP to_port=111, cidr_blocks=["0.0.0.0/0"] # Allow RPCBind traffic over UDP from anywhere ), aws.ec2.SecurityGroupIngressArgs( protocol="tcp", from_port=22, # SSH port for EC2 instance access to_port=22, cidr_blocks=["0.0.0.0/0"] # Allow SSH traffic from anywhere ) ], egress=[ aws.ec2.SecurityGroupEgressArgs( protocol="-1", # Allow all outbound traffic from_port=0, to_port=0, cidr_blocks=["0.0.0.0/0"] # Allow all outbound traffic to anywhere ) ] ) # Create the FSx for NetApp ONTAP file system in the private subnet file_system = aws.fsx.OntapFileSystem( "fsxFileSystem", subnet_ids=[private_subnet.id], # Deploy the FSx file system in the private subnet preferred_subnet_id=private_subnet.id, # Preferred subnet for the FSx file system security_group_ids=[security_group.id], # Attach the security group to the FSx file system deployment_type="SINGLE_AZ_1", # Single Availability Zone deployment throughput_capacity=128, # Throughput capacity in MB/s storage_capacity=1024 # Storage capacity in GB ) # Create a Storage Virtual Machine (SVM) within the FSx file system storage_virtual_machine = aws.fsx.OntapStorageVirtualMachine( "storageVirtualMachine", file_system_id=file_system.id, # Associate the SVM with the FSx file system name="svm1", # Name of the SVM root_volume_security_style="UNIX" # Security style for the root volume ) # Create a volume within the Storage Virtual Machine (SVM) volume = aws.fsx.OntapVolume( "fsxVolume", storage_virtual_machine_id=storage_virtual_machine.id, # Associate the volume with the SVM name="vol1", # Name of the volume junction_path="/vol1", # Junction path for mounting size_in_megabytes=10240, # Size of the volume in MB storage_efficiency_enabled=True, # Enable storage efficiency features tiering_policy=aws.fsx.OntapVolumeTieringPolicyArgs( name="SNAPSHOT_ONLY" # Tiering policy for the volume ), security_style="UNIX" # Security style for the volume ) # Extract the DNS name from the list of SVM endpoints dns_name = storage_virtual_machine.endpoints.apply(lambda e: e[0]['nfs'][0]['dns_name']) # Get the latest Amazon Linux 2 AMI for the EC2 instance ami = aws.ec2.get_ami( most_recent=True, owners=["amazon"], filters=[{"name": "name", "values": ["amzn2-ami-hvm-*-x86_64-gp2"]}] # Filter for Amazon Linux 2 AMI ) # Create an EC2 instance in the public subnet ec2_instance = aws.ec2.Instance( "fsxEc2Instance", instance_type="t3.micro", # Instance type for the EC2 instance vpc_security_group_ids=[security_group.id], # Attach the security group to the EC2 instance subnet_id=public_subnet.id, # Deploy the EC2 instance in the public subnet ami=ami.id, # Use the latest Amazon Linux 2 AMI key_name=key_name, # SSH key pair for accessing the EC2 instance tags={"Name": "FSx EC2 Instance"} # Tag for the EC2 instance ) # User data script to install NFS client and mount the FSx volume on the EC2 instance user_data_script = dns_name.apply(lambda dns: f"""#!/bin/bash sudo yum update -y sudo yum install -y nfs-utils sudo mkdir -p /mnt/fsx if ! mountpoint -q /mnt/fsx; then sudo mount -t nfs {dns}:/vol1 /mnt/fsx fi """) # Retrieve the private key for SSH access from environment variables while running with Github Actions private_key_content = os.getenv("PRIVATE_KEY") print(private_key_content) # Ensure the FSx file system is available before executing the script on the EC2 instance pulumi.Output.all(file_system.id, ec2_instance.public_ip).apply(lambda args: command.remote.Command( "mountFsxFileSystem", connection=command.remote.ConnectionArgs( host=args[1], user="ec2-user", private_key=private_key_content ), create=user_data_script, opts=pulumi.ResourceOptions(depends_on=[volume]) ))

Pytest with Pulumi

# Importing necessary libraries

import pulumi

import pulumi_aws as aws

from typing import Any, Dict, List

# Setting up configuration values for AWS region and various parameters

pulumi.runtime.set_config('aws:region', 'eu-central-1')

pulumi.runtime.set_config('demo:availabilityZone1', 'eu-central-1a')

pulumi.runtime.set_config('demo:availabilityZone2', 'eu-central-1b')

pulumi.runtime.set_config('demo:subnet1CIDER', '10.0.3.0/24')

pulumi.runtime.set_config('demo:subnet2CIDER', '10.0.4.0/24')

pulumi.runtime.set_config('demo:keyName', 'XYZ') - Change based on your own key

# Creating a class MyMocks to mock Pulumi's resources for testing

class MyMocks(pulumi.runtime.Mocks):

def new_resource(self, args: pulumi.runtime.MockResourceArgs) -> List[Any]:

# Initialize outputs with the resource's inputs

outputs = args.inputs

# Mocking specific resources based on their type

if args.typ == "aws:ec2/instance:Instance":

# Mocking an EC2 instance with some default values

outputs = {

**args.inputs, # Start with the given inputs

"ami": "ami-0eb1f3cdeeb8eed2a", # Mock AMI ID

"availability_zone": "eu-central-1a", # Mock availability zone

"publicIp": "203.0.113.12", # Mock public IP

"publicDns": "ec2-203-0-113-12.compute-1.amazonaws.com", # Mock public DNS

"user_data": "mock user data script", # Mock user data

"tags": {"Name": "test"} # Mock tags

}

elif args.typ == "aws:ec2/securityGroup:SecurityGroup":

# Mocking a Security Group with default ingress rules

outputs = {

**args.inputs,

"ingress": [

{"from_port": 80, "cidr_blocks": ["0.0.0.0/0"]}, # Allow HTTP traffic from anywhere

{"from_port": 22, "cidr_blocks": ["192.168.0.0/16"]} # Allow SSH traffic from a specific CIDR block

]

}

# Returning a mocked resource ID and the output values

return [args.name + '_id', outputs]

def call(self, args: pulumi.runtime.MockCallArgs) -> Dict[str, Any]:

# Mocking a call to get an AMI

if args.token == "aws:ec2/getAmi:getAmi":

return {

"architecture": "x86_64", # Mock architecture

"id": "ami-0eb1f3cdeeb8eed2a", # Mock AMI ID

}

# Return an empty dictionary if no specific mock is needed

return {}

# Setting the custom mocks for Pulumi

pulumi.runtime.set_mocks(MyMocks())

# Import the infrastructure to be tested

import infra

# Define a test function to validate the AMI ID of the EC2 instance

@pulumi.runtime.test

def test_instance_ami():

def check_ami(ami_id: str) -> None:

print(f"AMI ID received: {ami_id}")

# Assertion to ensure the AMI ID is the expected one

assert ami_id == "ami-0eb1f3cdeeb8eed2a", 'EC2 instance must have the correct AMI ID'

# Running the test to check the AMI ID

pulumi.runtime.run_in_stack(lambda: infra.ec2_instance.ami.apply(check_ami))

# Define a test function to validate the availability zone of the EC2 instance

@pulumi.runtime.test

def test_instance_az():

def check_az(availability_zone: str) -> None:

print(f"Availability Zone received: {availability_zone}")

# Assertion to ensure the instance is in the correct availability zone

assert availability_zone == "eu-central-1a", 'EC2 instance must be in the correct availability zone'

# Running the test to check the availability zone

pulumi.runtime.run_in_stack(lambda: infra.ec2_instance.availability_zone.apply(check_az))

# Define a test function to validate the tags of the EC2 instance

@pulumi.runtime.test

def test_instance_tags():

def check_tags(tags: Dict[str, Any]) -> None:

print(f"Tags received: {tags}")

# Assertions to ensure the instance has tags and a 'Name' tag

assert tags, 'EC2 instance must have tags'

assert 'Name' in tags, 'EC2 instance must have a Name tag'

# Running the test to check the tags

pulumi.runtime.run_in_stack(lambda: infra.ec2_instance.tags.apply(check_tags))

# Define a test function to validate the user data script of the EC2 instance

@pulumi.runtime.test

def test_instance_userdata():

def check_user_data(user_data_script: str) -> None:

print(f"User data received: {user_data_script}")

# Assertion to ensure the instance has user data configured

assert user_data_script is not None, 'EC2 instance must have user_data_script configured'

# Running the test to check the user data script

pulumi.runtime.run_in_stack(lambda: infra.ec2_instance.user_data.apply(check_user_data))

Github Actions

Introduction

GitHub Actions is a powerful automation tool integrated within GitHub, enabling developers to automate their workflows, including testing, building, and deploying code. Pulumi, on the other hand, is an Infrastructure as Code (IaC) tool that allows you to manage cloud resources using familiar programming languages. In this post, we’ll explore why you should use GitHub Actions and its specific purpose when combined with Pulumi.

Why Use GitHub Actions and Its Importance

GitHub Actions is a powerful tool for automating workflows within your GitHub repository, offering several key benefits, especially when combined with Pulumi:

- Integrated CI/CD: GitHub Actions seamlessly integrates Continuous Integration and Continuous Deployment (CI/CD) directly into your GitHub repository. This automation enhances consistency in testing, building, and deploying code, reducing the risk of manual errors.

- Custom Workflows: It allows you to create custom workflows for different stages of your software development lifecycle, such as code linting, running unit tests, or managing complex deployment processes. This flexibility ensures your automation aligns with your specific needs.

- Event-Driven Automation: You can trigger GitHub Actions with events like pushes, pull requests, or issue creation. This event-driven approach ensures that tasks are automated precisely when needed, streamlining your workflow.

- Reusable Code: GitHub Actions supports reusable “actions” that can be shared across multiple workflows or repositories. This promotes code reuse and maintains consistency in automation processes.

- Built-in Marketplace: The GitHub Marketplace offers a wide range of pre-built actions from the community, making it easy to integrate third-party services or implement common tasks without writing custom code.

- Enhanced Collaboration: By using GitHub’s pull request and review workflows, teams can discuss and approve changes before deployment. This process reduces risks and improves collaboration on infrastructure changes.

- Automated Deployment: GitHub Actions automates the deployment of infrastructure code, using Pulumi to apply changes. This automation reduces the risk of manual errors and ensures a consistent deployment process.

- Testing: Running tests before deploying with GitHub Actions helps confirm that your infrastructure code works correctly, catching potential issues early and ensuring stability.

- Configuration Management: It manages and sets up necessary configurations for Pulumi and AWS, ensuring your environment is correctly configured for deployments.

- Preview and Apply Changes: GitHub Actions allows you to preview changes before applying them, helping you understand the impact of modifications and minimizing the risk of unintended changes.

- Cleanup: You can optionally destroy the stack after testing or deployment, helping control costs and maintain a clean environment.

Execution

To execute the GitHub Actions workflow:

- Placement: Save the workflow YAML file in your repository’s .github/workflows directory. This setup ensures that GitHub Actions will automatically detect and execute the workflow whenever there’s a push to the main branch of your repository.

- Workflow Actions: The workflow file performs several critical actions:

- Environment Setup: Configures the necessary environment for running the workflow.

- Dependency Installation: Installs the required dependencies, including Pulumi CLI and other Python packages.

- Testing: Runs your tests to verify that your infrastructure code functions as expected.

- Preview and Apply Changes: Uses Pulumi to preview and apply any changes to your infrastructure.

- Cleanup: Optionally destroys the stack after tests or deployment to manage costs and maintain a clean environment.

By incorporating this workflow, you ensure that your Pulumi infrastructure is continuously integrated and deployed with proper validation, significantly improving the reliability and efficiency of your infrastructure management process.

Example: Deploy infrastructure with Pulumi

name: Pulumi Deployment

on:

push:

branches:

- main

env:

# Environment variables for AWS credentials and private key.

AWS_ACCESS_KEY_ID: ${{ secrets.AWS_ACCESS_KEY_ID }}

AWS_SECRET_ACCESS_KEY: ${{ secrets.AWS_SECRET_ACCESS_KEY }}

AWS_DEFAULT_REGION: ${{ secrets.AWS_DEFAULT_REGION }}

PRIVATE_KEY: ${{ secrets.PRIVATE_KEY }}

jobs:

pulumi-deploy:

runs-on: ubuntu-latest

environment: dev

steps:

- name: Checkout code

uses: actions/checkout@v3

# Check out the repository code to the runner.

- name: Configure AWS credentials

uses: aws-actions/configure-aws-credentials@v3

with:

aws-access-key-id: ${{ secrets.AWS_ACCESS_KEY_ID }}

aws-secret-access-key: ${{ secrets.AWS_SECRET_ACCESS_KEY }}

aws-region: eu-central-1

# Set up AWS credentials for use in subsequent actions.

- name: Set up SSH key

run: |

mkdir -p ~/.ssh

echo "${{ secrets.SSH_PRIVATE_KEY }}" > ~/.ssh/XYZ.pem

chmod 600 ~/.ssh/XYZ.pem

# Create an SSH directory, add the private SSH key, and set permissions.

- name: Set up Python

uses: actions/setup-python@v4

with:

python-version: '3.9'

# Set up Python 3.9 environment for running Python-based tasks.

- name: Set up Node.js

uses: actions/setup-node@v3

with:

node-version: '14'

# Set up Node.js 14 environment for running Node.js-based tasks.

- name: Install project dependencies

run: npm install

working-directory: .

# Install Node.js project dependencies specified in `package.json`.

- name: Install Pulumi

run: npm install -g pulumi

# Install the Pulumi CLI globally.

- name: Install dependencies

run: |

python -m pip install --upgrade pip

pip install -r requirements.txt

working-directory: .

# Upgrade pip and install Python dependencies from `requirements.txt`.

- name: Login to Pulumi

run: pulumi login

env:

PULUMI_ACCESS_TOKEN: ${{ secrets.PULUMI_ACCESS_TOKEN }}

# Log in to Pulumi using the access token stored in secrets.

- name: Set Pulumi configuration for tests

run: pulumi config set aws:region eu-central-1 --stack dev

# Set Pulumi configuration to specify AWS region for the `dev` stack.

- name: Pulumi stack select

run: pulumi stack select dev

working-directory: .

# Select the `dev` stack for Pulumi operations.

- name: Run tests

run: |

pulumi config set aws:region eu-central-1

pytest

working-directory: .

# Set AWS region configuration and run tests using pytest.

- name: Preview Pulumi changes

run: pulumi preview --stack dev

working-directory: .

# Preview the changes that Pulumi will apply to the `dev` stack.

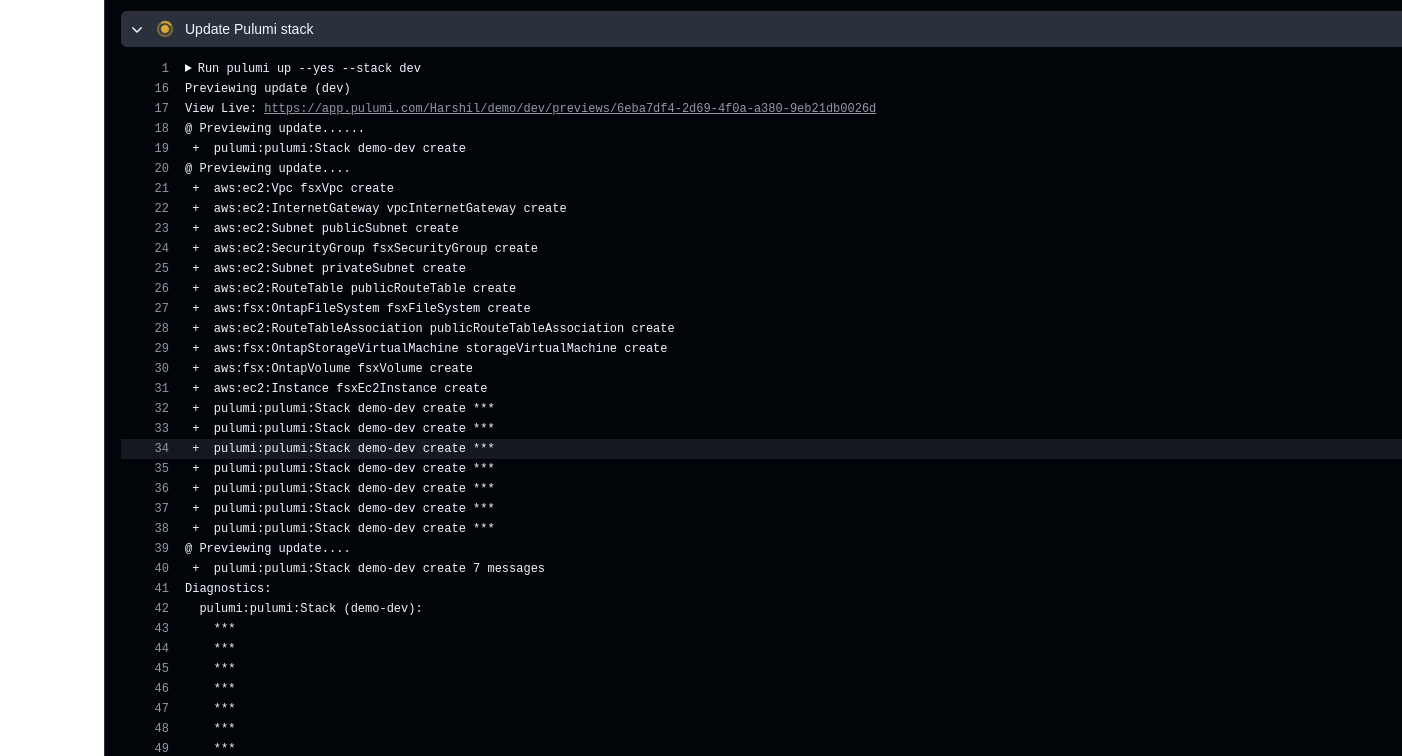

- name: Update Pulumi stack

run: pulumi up --yes --stack dev

working-directory: .

# Apply the changes to the `dev` stack with Pulumi.

- name: Pulumi stack output

run: pulumi stack output

working-directory: .

# Retrieve and display outputs from the Pulumi stack.

- name: Cleanup Pulumi stack

run: pulumi destroy --yes --stack dev

working-directory: .

# Destroy the `dev` stack to clean up resources.

- name: Pulumi stack output (after destroy)

run: pulumi stack output

working-directory: .

# Retrieve and display outputs from the Pulumi stack after destruction.

- name: Logout from Pulumi

run: pulumi logout

# Log out from the Pulumi session.Output:

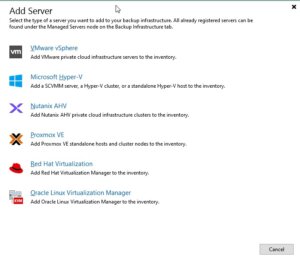

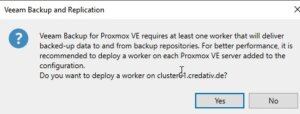

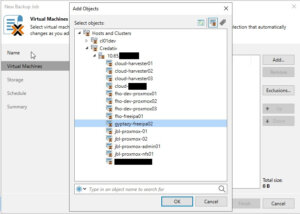

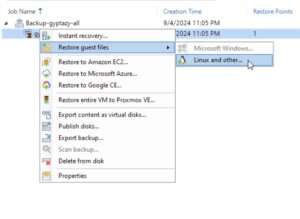

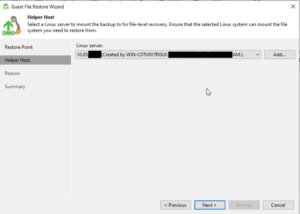

Veeam & Proxmox VE

Veeam has made a strategic move by integrating the open-source virtualization solution Proxmox VE (Virtual Environment) into its portfolio. Signaling its commitment into the evolving needs of the open-source community and the open-source virtualization market, this integration positions Veeam as a forward-thinking player in the industry, ready to support the rising tide of open-source solutions. The combination of Veeam’s data protection solutions with the flexibility of Proxmox VE’s platform offers enterprises a compelling alternative that promises cost savings and enhanced data security.

With the Proxmox VE, now also one of the most important and often requested open-source solution and hypervisor is being natively supported – and it could definitely make a turn in the virtualization market!

Opportunities for Open-Source Virtualization