There are two ways to authenticate yourself as a client to Icinga2. On the one hand there is the possibility to authenticate yourself by username and password. The other option is authentication using client certificates. With the automated query of the Icinga2 API, the setup of client certificates is not only safety-technically advantageous, but also in the implementation on the client side much more practical.

Unfortunately, the official Icinga2 documentation does not provide a description of the exact certificate creation process. Therefore here is a short manual:

After installing Icinga2 the API feature has to be activated first:

icinga2 feature enable api

The next step is to configure the Icinga2-node as master, the easiest way to do this is with the “node-wizard” program:

icinga2 node wizard

Icinga2 creates the necessary CA certificates with which the client certificates still to be created must be signed. Now the client certificate is created:

icinga2 pki new-cert --cn --key .key --csr .csr

The parameter cn stands for the so-called common-name. This is the name used in the Icinga2 user configuration to assign the user certificate to the user. Usually the common name is the FQDN. In this scenario, however, this name is freely selectable. All other names can also be freely chosen, but it is recommended to use a name that suggests that the three files belong together.

Now the certificate has to be signed by the CA, Icinga2:

icinga2 pki sign-csr --csr .csr --cert .crt

Finally, the API user must be created in the file “api-user.conf”. This file is located in the subfolder of each Icinga2 configuration:

object ApiUser {

client_cn =

permissions = []

}For a detailed explanation of the user’s assignment of rights, it is worth taking a look at the documentation.

Last but not least Icinga2 has to be restarted. Then the user can access the Icinga2 API without entering a username and password, if he passes the certificates during the query.

You can read up on the services we provide for Icinga2 righthere.

This post was originally written by Bernd Borowski.

One would think that microcode updates are basically unproblematic on modern Linux distributions. This is fundamentally correct. Nevertheless, there are always edge cases in which distribution developers may have missed something.

Using the example of Ubuntu 18.04 LTS “Bionic Beaver” in connection with the XEN Hypervisor this becomes obvious when it comes to processors microcode updates.

Ubuntu delivers updated microcode packages for both AMD and Intel. However, these are apparently not applied to the processor.

XEN Microkernel

The reason for this is not to obvious. In XEN, the host system is already paravirtualized and cannot directly influence the CPU for security reasons. Accordingly, manual attempts to change the current microcode fail.

Therefore, the XEN microkernel has to take care of the microcode patching. Instructed correctyl, it will do so at boot time.

Customize command line in Grub

For the XEN kernel to patch the microcode of the CPU, it must have access to the microcode code files at boot time and on the other hand, he must also have the order to apply them. We can achieve the latter by Grub boot loader configuration. To do so, we setup a parameter in the kernel command line.

In the case of Ubuntu 18.04 LTS, the grub configuration file can be found at /etc/default/grub.

There you should find the file xen.cfg. This is of course only the case if the XEN Hypervisor package is installed. Open the config file in your editor and look for the location of the variable GRUB_CMDLINE_XEN_DEFAULT. Add the parameter ucode=scan. In the default state, the line of the xen.cfg then should look like this:

GRUB_CMDLINE_XEN_DEFAULT="ucode=scan"

Customize Initramfs

In addition to the instruction, the microkernel of the XEN hypervisor also needs access to the respective microcode files as well as the ‘Intel Microcode Tool’, if applicable.

While the microcode packages are usually already installed correctly, the the Intel tool may had to be made accessible via sudo apt-get install iucode tool. Care must also be taken to ensure that the microcode files also get into the initial ramdisk. For this purpose, Ubuntu already has matching scripts available.

In the default state, the system tries to select the applicable microcodes for the CPU in the InitramFS. Unfortunately, this does not succeed always, so you might have to help here.

With the command sudo lsinitrd /boot/initrd.img-4.15.0-46-generic you can, for example, check which contents are stored in the InitramFS with the name initrd.img-4.15.0-46-generic. If on an Intel system there is something from AMD but not Intel shown, the automatic processor detection went wrong when creating the initramdisk.

To get this right, you need to look at the files amd64-microcode and intel-microcode in the directory /etc/default. Each of these two config files has a INITRAMFS variable AMD64UCODE_INITRAMFS or IUCODE_TOOL_INITRAMFS. The valid values to configure are “no,” “auto,” or “early”. Default is “auto”. With “auto” the system tries the auto discovery mentioned above. If it doesn’t work, you should set the value to early in the file matching the manufacturer of your CPU, and the other setup file to no. If the manufacturer is Intel, you can use the file intel-microcode to set the following additional variable:

IUCODE_TOOL_SCANCPUS=yes

This causes the script set to perform advanced CPU detection based on the Intel CPU, so that only the microcode files are included in the InitramFS that match the CPU. This helps avoiding an oversized initial ramdisk.

Finalize changes

Both the changes to the grub config and the adjustments to the InitramFS must also be finalized. This is done via

sudo update-initramfs -u sudo update-grub

A subsequent restart of the hypervisor will then let the XEN microkernel integrate the microcode patches provided in the InitramFS to the CPU.

Is it worth the effort?

Adjustments to the microcode of the processors are important. CPU manufacturers troubleshoot the “hardware” they sell. This fixes can be very important to maintain the integrity oder security of your server system – as we saw last year when the Spectre and Meltdown bugs got undisclosed. Of course, microcode updates can also be seen as negative, since the fixes for “Spectre” as well as “Meltdown” impose performance losses. Here it is necessary to consider whether one should integrate the microcode updates or not. This depends on risk vs. reward. Here there are quite different views, which are to be considered in the context of the system application.

A virtualization host, which runs third party virtual machines has whole other security requirements than a hypervisor who is deeply digged into the internal infrastructure and only runs trusted VMs. Between these two extremes, there are, of course, a few shades to deal with.

In this article we will look at the highly available operation of PostgreSQL® in a Kubernetes environment. A topic that is certainly of particular interest to many of our PostgreSQL® users.

Together with our partner company MayaData, we will demonstrate below the application possibilities and advantages of the extremely powerful open source project – OpenEBS.

OpenEBS is a freely available storage management system, whose development is supported and backed by MayaData.

We would like to thank Murat-Karslioglu from MayaData and our colleague Adrian Vondendriesch for this interesting and helpful article. This article simultaneously also appeared on OpenEBS.io.

PostgreSQL® anywhere — via Kubernetes with some help from OpenEBS and credativ engineering

by Murat Karslioglu, OpenEBS and Adrian Vondendriesch, credativ

Introduction

If you are already running Kubernetes on some form of cloud whether on-premises or as a service, you understand the ease-of-use, scalability and monitoring benefits of Kubernetes — and you may well be looking at how to apply those benefits to the operation of your databases.

PostgreSQL® remains a preferred relational database, and although setting up a highly available Postgres cluster from scratch might be challenging at first, we are seeing patterns emerging that allow PostgreSQL® to run as a first class citizen within Kubernetes, improving availability, reducing management time and overhead, and limiting cloud or data center lock-in.

There are many ways to run high availability with PostgreSQL®; for a list, see the PostgreSQL® Documentation. Some common cloud-native Postgres cluster deployment projects include Crunchy Data’s, Sorint.lab’s Stolon and Zalando’s Patroni/Spilo. Thus far we are seeing Zalando’s operator as a preferred solution in part because it seems to be simpler to understand and we’ve seen it operate well.

Some quick background on your authors:

- OpenEBS is a broadly deployed OpenSource storage and storage management project sponsored by MayaData.

- credativ is a leading open source support and engineering company with particular depth in PostgreSQL®.

In this blog, we’d like to briefly cover how using cloud-native or “container attached” storage can help in the deployment and ongoing operations of PostgreSQL® on Kubernetes. This is the first of a series of blogs we are considering — this one focuses more on why users are adopting this pattern and future ones will dive more into the specifics of how they are doing so.

At the end you can see how to use a Storage Class and a preferred operator to deploy PostgreSQL® with OpenEBS underlying

If you are curious about what container attached storage of CAS is you can read more from the Cloud Native Computing Foundation (CNCF) here.

Conceptually you can think of CAS as being the decomposition of previously monolithic storage software into containerized microservices that themselves run on Kubernetes. This gives all the advantages of running Kubernetes that already led you to run Kubernetes — now applied to the storage and data management layer as well. Of special note is that like Kubernetes, OpenEBS runs anywhere so the same advantages below apply whether on on-premises or on any of the many hosted Kubernetes services.

PostgreSQL® plus OpenEBS

®-with-OpenEBS-persistent-volumes.png”> (for cluster deployment)

Install OpenEBS

- If OpenEBS is not installed in your K8s cluster, this can be done from here. If OpenEBS is already installed, go to the next step.

- Connect to MayaOnline (Optional): Connecting the Kubernetes cluster to MayaOnline provides good visibility of storage resources. MayaOnline has various support options for enterprise customers.

Configure cStor Pool

- If cStor Pool is not configured in your OpenEBS cluster, this can be done from here. As PostgreSQL® is a StatefulSet application, it requires a single storage replication factor. If you prefer additional redundancy you can always increase the replica count to 3.

During cStor Pool creation, make sure that the maxPools parameter is set to >=3. If a cStor pool is already configured, go to the next step. Sample YAML named openebs-config.yaml for configuring cStor Pool is provided in the Configuration details below.

openebs-config.yaml

#Use the following YAMLs to create a cStor Storage Pool. # and associated storage class. apiVersion: openebs.io/v1alpha1 kind: StoragePoolClaim metadata: name: cstor-disk spec: name: cstor-disk type: disk poolSpec: poolType: striped # NOTE — Appropriate disks need to be fetched using `kubectl get disks` # # `Disk` is a custom resource supported by OpenEBS with `node-disk-manager` # as the disk operator # Replace the following with actual disk CRs from your cluster `kubectl get disks` # Uncomment the below lines after updating the actual disk names. disks: diskList: # Replace the following with actual disk CRs from your cluster from `kubectl get disks` # — disk-184d99015253054c48c4aa3f17d137b1 # — disk-2f6bced7ba9b2be230ca5138fd0b07f1 # — disk-806d3e77dd2e38f188fdaf9c46020bdc # — disk-8b6fb58d0c4e0ff3ed74a5183556424d # — disk-bad1863742ce905e67978d082a721d61 # — disk-d172a48ad8b0fb536b9984609b7ee653 — -

Create Storage Class

- You must configure a StorageClass to provision cStor volume on a cStor pool. In this solution, we are using a StorageClass to consume the cStor Pool which is created using external disks attached on the Nodes. The storage pool is created using the steps provided in the Configure StoragePool section. In this solution, PostgreSQL® is a deployment. Since it requires replication at the storage level the cStor volume replicaCount is 3. Sample YAML named openebs-sc-pg.yaml to consume cStor pool with cStorVolume Replica count as 3 is provided in the configuration details below.

openebs-sc-pg.yaml

apiVersion: storage.k8s.io/v1

kind: StorageClass

metadata:

name: openebs-postgres

annotations:

openebs.io/cas-type: cstor

cas.openebs.io/config: |

- name: StoragePoolClaim

value: "cstor-disk"

- name: ReplicaCount

value: "3"

provisioner: openebs.io/provisioner-iscsi

reclaimPolicy: Delete

---Launch and test Postgres Operator

- Clone Zalando’s Postgres Operator.

git clone https://github.com/zalando/postgres-operator.git cd postgres-operator

Use the OpenEBS storage class

- Edit manifest file and add openebs-postgres as the storage class.

nano manifests/minimal-postgres-manifest.yaml

After adding the storage class, it should look like the example below:

apiVersion: "acid.zalan.do/v1"

kind: postgresql

metadata:

name: acid-minimal-cluster

namespace: default

spec:

teamId: "ACID"

volume:

size: 1Gi

storageClass: openebs-postgres

numberOfInstances: 2

users:

# database owner

zalando:

- superuser

- createdb

# role for application foo

foo_user: []

#databases: name->owner

databases:

foo: zalando

postgresql:

version: "10"

parameters:

shared_buffers: "32MB"

max_connections: "10"

log_statement: "all"Start the Operator

- Run the command below to start the operator

kubectl create -f manifests/configmap.yaml # configuration kubectl create -f manifests/operator-service-account-rbac.yaml # identity and permissions kubectl create -f manifests/postgres-operator.yaml # deployment

Create a Postgres cluster on OpenEBS

Optional: The operator can run in a namespace other than default. For example, to use the test namespace, run the following before deploying the operator’s manifests:

kubectl create namespace test kubectl config set-context $(kubectl config current-context) — namespace=test

- Run the command below to deploy from the example manifest:

kubectl create -f manifests/minimal-postgres-manifest.yaml

2. It only takes a few seconds to get the persistent volume (PV) for the pgdata-acid-minimal-cluster-0 up. Check PVs created by the operator using the kubectl get pv command:

$ kubectl get pv NAME CAPACITY ACCESS MODES RECLAIM POLICY STATUS CLAIM STORAGECLASS REASON AGE pvc-8852ceef-48fe-11e9–9897–06b524f7f6ea 1Gi RWO Delete Bound default/pgdata-acid-minimal-cluster-0 openebs-postgres 8m44s pvc-bfdf7ebe-48fe-11e9–9897–06b524f7f6ea 1Gi RWO Delete Bound default/pgdata-acid-minimal-cluster-1 openebs-postgres 7m14s

Connect to the Postgres master and test

- If it is not installed previously, install psql client:

sudo apt-get install postgresql-client

2. Run the command below and note the hostname and host port.

kubectl get service — namespace default |grep acid-minimal-cluster

3. Run the commands below to connect to your PostgreSQL® DB and test. Replace the [HostPort] below with the port number from the output of the above command:

export PGHOST=$(kubectl get svc -n default -l application=spilo,spilo-role=master -o jsonpath="{.items[0].spec.clusterIP}")

export PGPORT=[HostPort]

export PGPASSWORD=$(kubectl get secret -n default postgres.acid-minimal-cluster.credentials -o ‘jsonpath={.data.password}’ | base64 -d)

psql -U postgres -c ‘create table foo (id int)’Congrats you now have the Postgres-Operator and your first test database up and running with the help of cloud-native OpenEBS storage.

Partnership and future direction

As this blog indicates, the teams at MayaData / OpenEBS and credativ are increasingly working together to help organizations running PostgreSQL® and other stateful workloads. In future blogs, we’ll provide more hands-on tips.

We are looking for feedback and suggestions on where to take this collaboration. Please provide feedback below or find us on Twitter or on the OpenEBS slack community.

Patroni is a PostgreSQL high availability solution with a focus on containers and Kubernetes. Until recently, the available Debian packages had to be configured manually and did not integrate well with the rest of the distribution. For the upcoming Debian 10 “Buster” release, the Patroni packages have been integrated into Debian’s standard PostgreSQL framework by credativ. They now allow for an easy setup of Patroni clusters on Debian or Ubuntu.

Patroni employs a “Distributed Consensus Store” (DCS) like Etcd, Consul or Zookeeper in order to reliably run a leader election and orchestrate automatic failover. It further allows for scheduled switchovers and easy cluster-wide changes to the configuration. Finally, it provides a REST interface that can be used together with HAProxy in order to build a load balancing solution. Due to these advantages Patroni has gradually replaced Pacemaker as the go-to open-source project for PostgreSQL high availability.

However, many of our customers run PostgreSQL on Debian or Ubuntu systems and so far Patroni did not integrate well into those. For example, it does not use the postgresql-common framework and its instances were not displayed in pg_lsclusters output as usual.

Integration into Debian

In a collaboration with Patroni lead developer Alexander Kukushkin from Zalando the Debian Patroni package has been integrated into the postgresql-common framework to a large extent over the last months. This was due to changes both in Patroni itself as well as additional programs in the Debian package. The current Version 1.5.5 of Patroni contains all these changes and is now available in Debian “Buster” (testing) in order to setup Patroni clusters.

The packages are also available on apt.postgresql.org and thus installable on Debian 9 “Stretch” and Ubuntu 18.04 “Bionic Beaver” LTS for any PostgreSQL version from 9.4 to 11.

The most important part of the integration is the automatic generation of a suitable Patroni configuration with the pg_createconfig_patroni command. It is run similar to pg_createcluster with the desired PostgreSQL major version and the instance name as parameters:

pg_createconfig_patroni 11 test

This invocation creates a file /etc/patroni/11-test.yml, using the DCS configuration from /etc/patroni/dcs.yml which has to be adjusted according to the local setup. The rest of the configuration is taken from the template /etc/patroni/config.yml.in which is usable in itself but can be customized by the user according to their needs. Afterwards the Patroni instance is started via systemd similar to regular PostgreSQL instances:

systemctl start patroni@11-test

A simple 3-node Patroni cluster can be created and started with the following few commands, where the nodes pg1, pg2 and pg3 are considered to be hostnames and the local file dcs.yml contains the DCS configuration:

for i in pg1 pg2 pg3; do ssh $i 'apt -y install postgresql-common'; done

for i in pg1 pg2 pg3; do ssh $i 'sed -i "s/^#create_main_cluster = true/create_main_cluster = false/" /etc/postgresql-common/createcluster.conf'; done

for i in pg1 pg2 pg3; do ssh $i 'apt -y install patroni postgresql'; done

for i in pg1 pg2 pg3; do scp ./dcs.yml $i:/etc/patroni; done

for i in pg1 pg2 pg3; do ssh @$i 'pg_createconfig_patroni 11 test' && systemctl start patroni@11-test'; done

Afterwards, you can get the state of the Patroni cluster via

ssh pg1 'patronictl -c /etc/patroni/11-patroni.yml list'

+---------+--------+------------+--------+---------+----+-----------+

| Cluster | Member | Host | Role | State | TL | Lag in MB |

+---------+--------+------------+--------+---------+----+-----------+

| 11-test | pg1 | 10.0.3.111 | Leader | running | 1 | |

| 11-test | pg2 | 10.0.3.41 | | stopped | | unknown |

| 11-test | pg3 | 10.0.3.46 | | stopped | | unknown |

+---------+--------+------------+--------+---------+----+-----------+

Leader election has happened and pg1 has become the primary. It created its instance with the Debian-specific pg_createcluster_patroni program that runs pg_createcluster in the background. Then the two other nodes clone from the leader using the pg_clonecluster_patroni program which sets up an instance using pg_createcluster and then runs pg_basebackup from the primary. After that, all nodes are up and running:

+---------+--------+------------+--------+---------+----+-----------+

| Cluster | Member | Host | Role | State | TL | Lag in MB |

+---------+--------+------------+--------+---------+----+-----------+

| 11-test | pg1 | 10.0.3.111 | Leader | running | 1 | 0 |

| 11-test | pg2 | 10.0.3.41 | | running | 1 | 0 |

| 11-test | pg3 | 10.0.3.46 | | running | 1 | 0 |

+---------+--------+------------+--------+---------+----+-----------+

The well-known Debian postgresql-common commands work as well:

ssh pg1 'pg_lsclusters' Ver Cluster Port Status Owner Data directory Log file 11 test 5432 online postgres /var/lib/postgresql/11/test /var/log/postgresql/postgresql-11-test.log

Failover Behaviour

If the primary is abruptly shutdown, its leader token will expire after a while and Patroni will eventually initiate failover and a new leader election:

+---------+--------+-----------+------+---------+----+-----------+

| Cluster | Member | Host | Role | State | TL | Lag in MB |

+---------+--------+-----------+------+---------+----+-----------+

| 11-test | pg2 | 10.0.3.41 | | running | 1 | 0 |

| 11-test | pg3 | 10.0.3.46 | | running | 1 | 0 |

+---------+--------+-----------+------+---------+----+-----------+

[...]

+---------+--------+-----------+--------+---------+----+-----------+

| Cluster | Member | Host | Role | State | TL | Lag in MB |

+---------+--------+-----------+--------+---------+----+-----------+

| 11-test | pg2 | 10.0.3.41 | Leader | running | 2 | 0 |

| 11-test | pg3 | 10.0.3.46 | | running | 1 | 0 |

+---------+--------+-----------+--------+---------+----+-----------+

[...]

+---------+--------+-----------+--------+---------+----+-----------+

| Cluster | Member | Host | Role | State | TL | Lag in MB |

+---------+--------+-----------+--------+---------+----+-----------+

| 11-test | pg2 | 10.0.3.41 | Leader | running | 2 | 0 |

| 11-test | pg3 | 10.0.3.46 | | running | 2 | 0 |

+---------+--------+-----------+--------+---------+----+-----------+

The old primary will rejoin the cluster as standby once it is restarted:

+---------+--------+------------+--------+---------+----+-----------+

| Cluster | Member | Host | Role | State | TL | Lag in MB |

+---------+--------+------------+--------+---------+----+-----------+

| 11-test | pg1 | 10.0.3.111 | | running | | unknown |

| 11-test | pg2 | 10.0.3.41 | Leader | running | 2 | 0 |

| 11-test | pg3 | 10.0.3.46 | | running | 2 | 0 |

+---------+--------+------------+--------+---------+----+-----------+

[...]

+---------+--------+------------+--------+---------+----+-----------+

| Cluster | Member | Host | Role | State | TL | Lag in MB |

+---------+--------+------------+--------+---------+----+-----------+

| 11-test | pg1 | 10.0.3.111 | | running | 2 | 0 |

| 11-test | pg2 | 10.0.3.41 | Leader | running | 2 | 0 |

| 11-test | pg3 | 10.0.3.46 | | running | 2 | 0 |

+---------+--------+------------+--------+---------+----+-----------+

If a clean rejoin is not possible due to additional transactions on the old timeline the old primary gets re-cloned from the current leader. In case the data is too large for a quick re-clone, pg_rewind can be used. In this case a password needs to be set for the postgres user and regular database connections (as opposed to replication connections) need to be allowed between the cluster nodes.

Creation of additional Instances

It is also possible to create further clusters with pg_createconfig_patroni, one can either assign a PostgreSQL port explicitly via the --port option, or let pg_createconfig_patroni assign the next free port as is known from pg_createcluster:

for i in pg1 pg2 pg3; do ssh $i 'pg_createconfig_patroni 11 test2 && systemctl start patroni@11-test2'; done

ssh pg1 'patronictl -c /etc/patroni/11-test2.yml list'

+----------+--------+-----------------+--------+---------+----+-----------+

| Cluster | Member | Host | Role | State | TL | Lag in MB |

+----------+--------+-----------------+--------+---------+----+-----------+

| 11-test2 | pg1 | 10.0.3.111:5433 | Leader | running | 1 | 0 |

| 11-test2 | pg2 | 10.0.3.41:5433 | | running | 1 | 0 |

| 11-test2 | pg3 | 10.0.3.46:5433 | | running | 1 | 0 |

+----------+--------+-----------------+--------+---------+----+-----------+

Ansible Playbook

In order to easily deploy a 3-node Patroni cluster we have created an Ansible playbook on Github. It automates the installation and configuration of PostgreSQL and Patroni on the three nodes, as well as the DCS server on a fourth node.

Questions and Help

Do you have any questions or need help? Feel free to write to info@credativ.com.

The migration of IT landscapes to cloud environments is common today. However, the question often remains how to monitor these newly created infrastructures and react to failures. For traditional infrastructure, monitoring tools such as Icinga are used. These tools are often deployed using configuration management methods, such as Ansible or Puppet.

In cloud environments, this is somewhat different. On the one hand, virtual machines are certainly used here, where these methods would be applicable. On the other hand, modern cloud environments also thrive on abstracting away from the virtual machine and offering services, for example as SaaS, in a decoupled manner. But how does one monitor such services?

Manual configuration of an Icinga2 monitoring system is not advisable, as these cloud environments, in particular, are characterized by high dynamism. The risk of not monitoring a service that was quickly added but later becomes important is high.

The Icinga2 and IcingaWeb2 project responded to these requirements for dynamic monitoring environments some time ago with the Director plugin. The Director plugin, using so-called importer modules, is capable of dynamically reading resources contained in various environments, such as “VMware VSphere” or “Amazon AWS“. These can then be automatically integrated into classic Icinga monitoring via rules.

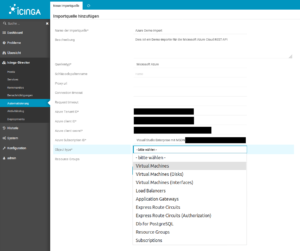

Sponsored by DPD Deutschland GmbH, credativ GmbH is developing an extension module for the IcingaWeb2 Director, which allows dynamic integration of resources from the “Microsoft Azure Cloud“. This module queries the Microsoft Azure REST API and returns various resource types, which can then be automatically added to your monitoring via the Director. The module is already in use by our customers and will be further developed according to demand.

Ten resource types are already supported

Currently, the Azure Importer Module supports the following resource types:

- Virtual Machines

- Virtual Disks

- Network Interfaces for Virtual Machines

- Load Balancers

- Application Gateways

- Microsoft.DBforPostgreSQL® servers (SaaS)

- Express Route Circuits

- Authorizations for Express Route Circuits

- Resource Groups

- Subscriptions

The module is now available as an open source solution

The respective tested versions are available here for download as a release.

Do you require support with monitoring?

Key Takeaways

- The migration of IT landscapes to cloud environments requires effective monitoring.

- The Icinga Director plugin enables the automatic integration of resources from various cloud environments.

- credativ GmbH is developing an Azure Importer Module for IcingaWeb2 that dynamically registers Microsoft Azure resources.

- The module already supports ten different resource types and is available as open source under the MIT license.

- Existing customers of credativ GmbH can quickly request assistance for monitoring support.

The message broker RabbitMQ is highly popular in business environments. Our customers often use the software to bridge different systems, departments, or data pools. Similarly, a message broker like RabbitMQ can be used to absorb short-term load peaks and pass them on normalized to backend systems. Operating the Erlang-written message broker is straightforward to seamless for most system administrators. In fact, the system operates very stably in normal operation. With some of our customers, I see systems where the core component RabbitMQ operates largely maintenance-free.

This is a good thing!

Typically, a message broker is a very central component of an IT landscape, which means that unplanned downtime for such a component should be avoided at all costs. Planned downtime for such a component often requires coordination with many departments or business units and is therefore only feasible with considerable effort.

Based on Erlang/OTP, RabbitMQ provides the tools to operate durably and stably. Due to its Erlang/OTP foundation, RabbitMQ is also very easy to use in cluster operation, which can increase availability. To set up a cluster, not much more is needed than a shared secret (the so-called Erlang cookie) and appropriately configured names for the individual nodes. Service discovery systems can be used, but fundamentally, names resolvable via DNS or host files are sufficient.

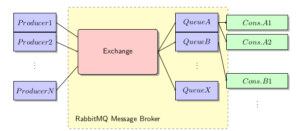

RabbitMQ Concept

In RabbitMQ, producers communicate directly with an exchange. One or more queues are connected to an exchange, from which consumers, in turn, read messages. RabbitMQ, as a message broker, ensures that messages are routed according to specifications and distributed to the queues. It ensures that no message from a queue is consumed twice, and that persistent messages under its responsibility are not lost.

In cluster operation, it is irrelevant whether the queue actually resides on the node to which the producer or consumer is connected. The internal routing organizes this independently.

Queues can be persisted to disk or used exclusively “in-memory”. The latter are naturally deleted by a restart of RabbitMQ. The same applies to content within queues. These can also be delivered by the producer “in-memory” or persistently.

Furthermore, various exchange types can be configured, and priorities and Time-to-Live features can be set, which further expand the possibilities of RabbitMQ’s use.

Problem Areas in Daily SysAdmin Operations

Provided that appropriate sizing was considered when selecting the system for operating a RabbitMQ node – especially memory for the maximum message backlog to be compensated – there is little maintenance work involved in operating RabbitMQ. The system administrator may also be involved with the management and distribution of queues, etc., within RabbitMQ for organizational reasons, but this is fundamentally a task of application administration and highly use-case specific.

Avoid Split-Brain

In fact, there is little for the system administrator to consider in daily operations with RabbitMQ. For one, in cluster operation, it can happen that individual nodes need to be shut down for maintenance purposes, or that nodes have diverged due to a node failure or a network partition. If configured correctly, the latter should not occur. I will dedicate a separate article to proper cluster configuration. Here, care should be taken not to allow the default behavior for network partitioning (“split-brain“), but to choose a sensible alternative, such as “Pause-Minority”, which enables a quorum-based decision of the remaining network segments. In one of the upcoming versions, there will likely also be a Raft implementation for clusters.

Upgrades Can Pose Challenges

A problem that will eventually arise in daily operations is the question of upgrading RabbitMQ from a major or minor version to a higher one. Unfortunately, this is not straightforward and requires preparatory work and planning, especially in cluster operation. I plan to cover this topic in detail in a later blog post.

It is important to note in advance: Cluster upgrades are only possible for patch-level changes, for example, from version 3.7.1 to version 3.7.3 or similar. However, caution is advised here as well; even at patch levels, there are isolated version jumps that cannot be easily mixed within the cluster. Information on this can be found in the release notes, which should definitely be consulted beforehand.

Single-node servers can usually be easily upgraded to a more recent version while shut down. However, the notes in all release notes for the affected major, minor, and patch levels should be read. Intermediate steps may be necessary here.

For all RabbitMQ upgrades, it must also always be considered that, in addition to the message broker, the associated Erlang/OTP platform may also need to be adjusted. This is not to be expected for patch-level changes.

What can we do for you?

We would be pleased to advise you on the concept of your RabbitMQ deployment and support you in choosing whether this tool or perhaps other popular open-source systems such as Apache Kafka are suitable for you.

Should something not work or if you require support in operating these components, please let us know. We look forward to your call.

Guide: RabbitMQ Cheat Sheet

To make the work easier for the interested system administrator, I have compiled a so-called CheatSheet for RabbitMQ. This work was supported by one of our customers, who kindly gave us permission to publish it. Therefore, you can download the current copy here:

Download RabbitMQ Cheat Sheet as PDF file.

Further information on RabbitMQ can also be found here:

VXLAN stands for “Virtual eXtensible Local Area Network”. Standardized in RFC 7348 in August 2014, VXLAN is also available today as a virtual network interface in current Linux kernels. But what is VXLAN?

What is VXLAN?

When one reads the keywords “Virtual” and “LAN”, most rightly think of VLAN. Here, a large physical network is logically divided into smaller networks. For this purpose, the corresponding connections are marked with VLAN tags. This can be done either at the sending host (tagged VLAN) or, for example, by the switch (port-based VLAN). These markings are already made at Layer 2, the Data Link Layer in the OSI model. This allows them to be effectively evaluated at a very low network level, thereby suppressing unwanted communication in the network. The IEEE 802.1Q standard defines a 12-bit width for the VLAN tag, thus fundamentally resulting in 4096 possible VLAN networks on an Ethernet installation.

VXLAN was developed to circumvent this limitation. With VXLAN, a transmission technology based on OSI Layer 3 or Layer 4 is introduced, which creates virtual Layer 2 environments. With VXLAN logic, approximately 16 million (2 to the power of 24) VXLAN Layer 2 networks are possible, which in turn can map 4096 VLAN network segments. This should initially be sufficient even for very large Ethernet installations.

How can one set up such a VXLAN?

A VXLAN interface can then be set up, for example, with

ip link add vxlan0 type vxlan id 42 group 239.1.1.1 dev eth0

available. This command creates the device “vxlan0” as a VXLAN with ID 42 on the physical interface “eth0”. Multiple VXLANs are distinguished based on their ID. The instruction “group

Using the command line

ip addr add 10.0.0.1/24 dev vxlan0

one assigns a fixed IP address to the newly created VXLAN network interface, here in the example 10.0.0.1

The command

ip link set up dev vxlan0

activates the newly created network interface “vxlan0”. This creates a virtual network based on IP multicast on the physical interface “eth0”.

The interface “vxlan0” now behaves in principle exactly like an Ethernet interface. All other computers that select VXLAN ID 42 and multicast group 239.1.1.1 will thus become part of this virtual Ethernet. On this, one could now again set up various VLANs, for example, with

ip link add link vxlan0 name vlan1 type vlan id 1

set up a new VLAN on the VXLAN interface. In this case, one would not need to assign an IP address to the VXLAN interface.

What are its practical applications?

Fundamentally, VXLAN is suitable for use cases in very large Ethernets, such as in cloud environments, to overcome the 4096 VLAN limit.

Use as a Test Environment for Network Services

Alternatively, VXLAN can be very effectively used in test environments or virtualized environments where full control over the Layer 2 network to be used is required. If one wishes to test network infrastructure components or their configuration, such a completely isolated network is ideal. This also allows one to bypass control structures introduced by virtualization environments, which are particularly obstructive for such tests. My first practical experience with VXLAN was during the testing of a more complex DHCP setup on several virtual machines in OpenStack. On the network interfaces provided by OpenStack, the test was impossible for me, as I had only limited access to the network configurations on the virtualization host side, and OpenStack filters out DHCP packets from the network stream. This problem could be elegantly circumvented by setting up the test network on VXLAN. At the same time, this ensured that the DHCP test had no impact on other parts of the OpenStack network. Furthermore, the Ethernet connection provided by OpenStack remained permanently usable for maintenance and monitoring purposes.

For example, in unicast operation, scenarios are also conceivable where a Layer 2 network spanned over VXLAN is transported across multiple locations. There are switches or routers that support VXLAN and can serve as VTEPs (VXLAN Tunnel Endpoints). These can be used, for example, to connect two multicast VXLAN networks via unicast between the VTEPs, thereby transparently spanning a large VXLAN.

Is VXLAN secure?

VXLAN adds another Layer 2 infrastructure on top of an existing Ethernet infrastructure. This operates with UDP packets in unicast or multicast. Encryption at the VXLAN level is not provided and would need to be handled by higher protocol layers if required. IPSec solutions or, for example, TLS are options here. Fundamentally, VXLAN is at a comparable security level to most other Layer 2 network protocols.

Possible Issues?

With VXLAN, users may encounter a “familiar acquaintance” in the form of MTU issues. A standard Ethernet frame has a length of 1,518 bytes. After deducting the Ethernet header, 1,500 bytes remain for payload. VXLAN extends the Ethernet header by 50 bytes, which reduces the available payload to 1,450 bytes. This should be considered when setting the MTU. So-called Jumbo Frames are affected accordingly. Here, the additional 50 bytes must also be taken into account.

What does credativ offer?

We are pleased to support and advise you on the design and operation of your network environment. Among other things, we work in the areas of DevOps, network infrastructure, and network design. credativ GmbH has employees with expertise in highly complex network setups for data centers on real hardware as well as in virtual environments. Our focus is on implementation with open-source software.

In this blog, we describe the integration of Icinga2 into Graphite and Grafana on Debian.

What is Graphite

Graphite stores performance data over a configurable period. Services can send metrics to Graphite via a defined interface, which are then stored in a structured manner for the desired period. Possible examples of such metrics include CPU utilization or web server access numbers. Graphs can now be generated from the various metrics via Graphite’s integrated web interface. This allows us to detect and observe changes in values over different periods. A good example of such a trend analysis is disk space utilization. With the help of a trend graph, it is easy to see at what rate the space requirement is growing and approximately when a storage replacement will be necessary.

What is Grafana

Although Graphite offers its own web interface, it is not particularly attractive or flexible. This is where Grafana steps in.

Grafana is a frontend for various metric storage systems. For example, it supports Graphite, InfluxDB, and OpenTSDB. Grafana offers an intuitive interface for creating representative graphs from metrics. It also has a variety of functions to optimize the appearance and display of graphs. Subsequently, graphs can be grouped into dashboards. Parameterization of graphs is also possible. This also allows you to display only a graph from a specific host.

Installing Icinga2

At this point, only the installation required for Graphite is described. Current versions of Icinga2 packages for Debian can be obtained directly from the Debmon Project. The Debmon Project, run by official Debian package maintainers, provides current versions of various monitoring tools for Debian releases in a timely manner. To integrate these packages, the following commands are required:

# add debmon cat <<EOF >/etc/apt/sources.list.d/debmon.list deb http://debmon.org/debmon debmon-jessie main EOF # add debmon key wget -O - http://debmon.org/debmon/repo.key 2>/dev/null | apt-key add - # update repos apt-get update

Next, we can install Icinga2:

apt-get install icinga2

Installing Graphite and Graphite-Web

After Icinga2 is installed, Graphite and Graphite-web can also be installed.

# install packages for icinga2 and graphite-web and carbon apt-get install icinga2 graphite-web graphite-carbon libapache2-mod-wsgi apache2

Configuring Icinga2 with Graphite

Icinga2 must be configured to export all collected metrics to Graphite. The Graphite component that receives this data is called “Carbon”. In our example installation, Carbon runs on the same host as Icinga2 and also uses the default port. For this reason, no further configuration of Icinga2 is necessary; it is sufficient to enable the export.

The command does this. icinga2 feature enable graphite

Next, Icinga2 must be restarted: service icinga2 restart

If the Carbon server runs on a different host or a different port, the Icinga2 configuration can be adjusted in the file /etc/icinga2/features-enabled/graphite.conf. Details can be found in the Icinga2 documentation.

If the configuration was successful, a number of files should appear shortly in “/var/lib/graphite/whisper/icinga“. If this is not the case, you should check the Icinga2 log file (located in “/var/log/icinga2/icinga2.log“).

Configuring Graphite-web

Grafana uses Graphite’s web frontend as an interface for the metrics stored by Graphite. For this reason, it is necessary to configure Graphite-web correctly. For performance reasons, we operate Graphite-web as a WSGI module. A number of configuration steps are required for this:

- First, we create a user database for Graphite-web. Since we will not have many users, we use sqlite as the backend for our user data at this point. For this purpose, we execute the following commands, which initialize the user database and assign it to the user under which the web frontend runs:

graphite-manage syncdb chown _graphite:_graphite /var/lib/graphite/graphite.db

- Next, we activate the WSGI module in Apache:

a2enmod wsgi - For simplicity, the web interface should run in its own virtual host and on its own port. To ensure Apache also listens on this port, we add the line “Listen 8000” to the file “/etc/apache2/ports.conf“.

- The Graphite Debian package already provides an Apache configuration file that we can use for our purposes, with slight modifications.

cp /usr/share/graphite-web/apache2-graphite.conf /etc/apache2/sites-available/graphite.confTo ensure the virtual host also uses port 8000, we must replace the line<VirtualHost *:80>

with

<VirtualHost *:8000>

.

- Then we activate the new virtual host via

a2ensite graphiteand restart Apache:systemctl restart apache2 - Graphite-web should now be accessible at http://YOURIP:8000/. If this is not the case, the Apache log files under “/var/log/apache2/” could provide valuable information.

Configuring Grafana

Grafana is currently not included in Debian. However, the author offers an Apt repository through which Grafana can be installed. Even if the repository refers to Wheezy, the packages also work under Debian Jessie.

The repository is only accessible via HTTPS. For this reason, HTTPS support for apt must first be installed: apt-get install apt-transport-https

Next, the repository can be integrated.

# add repo (package for wheezy works on jessie) cat <<EOF >/etc/apt/sources.list.d/grafana.list deb https://packagecloud.io/grafana/stable/debian/ wheezy main EOF # add key curl -s https://packagecloud.io/gpg.key | sudo apt-key add - # update repos apt-get update

Subsequently, the package can be installed: apt-get install grafana. For Grafana to run, we still need to enable the service systemctl enable grafana-server.service and start it systemctl start grafana-server.

Grafana is now accessible at http://YOURIP:3000/. The default username and password in our example is “admin”. This password should, of course, be replaced with a secure password at the next opportunity.

Next, Grafana must be configured to use Graphite as a data source. For simplicity, the configuration is explained via a screencast.

You are currently viewing a placeholder content from Default. To access the actual content, click the button below. Please note that doing so will share data with third-party providers.

After successfully integrating Graphite as a data source, we can create our first graph. There is also a short screencast for this here.

You are currently viewing a placeholder content from Default. To access the actual content, click the button below. Please note that doing so will share data with third-party providers.

Congratulations, you have now successfully installed and configured Icinga2, Graphite, and Grafana. For all further steps, please refer to the documentation of the respective projects:

The page postfix.org/OVERVIEW.html presents and explains the individual parts of Postfix and their functions: services, queues, etc. Although some of the relationships are outlined in a schematic ASCII overview, there is no overall overview. To facilitate understanding of Postfix and make it easier to get started with the topic, we have created this overview as far as possible based on the information on the page:

The graphic is also available as a PDF.

However, it should be noted that the diagram does not take into account all connections and relationships. For example, the flush process is greatly simplified, as communication with smtpd and qmgr is not shown. The integration of policyd’s, such as for greylisting, is also missing.

The sources in ODG format are stored in a Github repository – unfortunately, however, the paths in the ODG file do not seem to be particularly stable. Many thanks again to Patrick Ben Koetter for the original idea to create such an image!

This article was originally written by Roland Wolters.

The vast majority of Debian installations are simplified with the use of Preseeding and Netboot. Friedrich Weber, a school student on a work experience placement with us at our German office has observed the process and captured it in a Howto here:

Imagine the following situation: you find yourself with ten to twenty brand new Notebooks and the opportunity to install them with Debian and customise to your own taste. In any case it would be great fun to manually perform the Debian installation and configuration on each Notebook.

This is where Debian Preseed comes into play. The concept is simple and self-explanatory; usually, whoever is doing the installation will be faced with a number of issues during the process (e.g. language, partitioning, packages, Bootloader, etc.) In terms of Preseed, all of these issues can now be resolved. Only those which are not already accounted for in Preseed remain for the Debian installer. In the ideal situation these would become apparent at the outset of the installation, where the solution would differ depending on the target system and which the administrator must deal with manually – only when these have been dealt with can the installation be left to run unattended.

Preseed functions on some simple inbuilt configuration data: preseed.cfg. It includes, as detailed above, the questions which must be answered during installation, and in debconf-format. Data such as this consists of several rows, each row of which defines a debconf configuration option – a response to a question – for example:

d-i debian-installer/locale string de_DE.UTF-8

The first element of these lines is the name of the package, which is configured (d-i is here an abbreviation of debian installer), the second element is the name of the option, which is set, as the third element of the type of option (a string) and the rest is the value of the option.

In this example, we set the language to German using UTF-8-coding. You can put lines like this together yourself, even simpler with the tool debconf-get-selections: these commands provide straight forward and simple options, which can be set locally.

From the selection you can choose your desired settings, adjusted if necessary and copied into preseed.cfg. Here is an example of preseed.cfg:

d-i debian-installer/locale string de_DE.UTF-8 d-i debian-installer/keymap select de-latin1 d-i console-keymaps-at/keymap select de d-i languagechooser/language-name-fb select German d-i countrychooser/country-name select Germany d-i console-setup/layoutcode string de_DE d-i clock-setup/utc boolean true d-i time/zone string Europe/Berlin d-i clock-setup/ntp boolean true d-i clock-setup/ntp-server string ntp1 tasksel tasksel/first multiselect standard, desktop, gnome-desktop, laptop d-i pkgsel/include string openssh-client vim less rsync

In addition to language and timezone settings, selected tasks and packages are also set with these options. If left competely unattended, the installation will not complete, but will make a good start.

Now onto the question of where Preseed pulls its data from. It is in fact possible to use Preseed with CD and DVD images or USB sticks, but generally more comfortable to use a Debian Netboot Image, essentially an installer, which is started across the network and which can cover its Preseed configuration.

This boot across the network is implemented with PXE and requires a system that can boot from a network card. Next, the system depends on booting from the network card. It travels from a DHCO server to an IP address per broadcast.

This DHCP server transmits not only a suitable IP, but also to the IP of a so-called Bootserver. A Bootserver is a TFTP-Server, which provides a Bootloader to assist the Administrator with the desired Debian Installer. At the same time the Debian Installer can be shared with the Boot options that Preseed should use and where he can find the Preseed configuration. Here is a snippet of the PXELINUX configuration data pxelinux.cfg/default:

label i386 kernel debian-installer/i386/linux append vga=normal initrd=debian-installer/i386/initrd.gz netcfg/choose_interface=eth0 domain=example.com locale=de_DE debian-installer/country=DE debian-installer/language=de debian-installer/keymap=de-latin1-nodeadkeys console-keymaps-at/keymap=de-latin1-nodeadkeys auto-install/enable=false preseed/url=http://$server/preseed.cfg DEBCONF_DEBUG=5 -- quiet

When the user types i386, the debian-installer/i386/linux kernel (found on the TFTP server) is downloaded and run. This is in addition to a whole load of bootoptions given along the way. The debian installer allows the provision of debconf options as boot parameters. It is good practice for the installer to somehow communicate where to find the Preseed communication on the network (preseed/url).

In order to download this Preseed configuration, it must also be somehow built into the network. The options for that will be handed over (the options for the hostnames would be deliberately omitted here, as every target system has its own Hostname). auto-install/enable would delay the language set up so that it is only enabled after the network configuration, in order that these installations are read through preseed.cfg.

It is not necessary as the language set up will also be handed over to the kernel options to ensure that the network configuration is German. The examples and configuration excerpts mentioned here are obviously summarised and shortened. Even so, this blog post should have given you a glimpse into the concept of Preseed in connection with netboot. Finally, here is a complete version of preseed.cfg:

d-i debian-installer/locale string de_DE.UTF-8 d-i debian-installer/keymap select de-latin1 d-i console-keymaps-at/keymap select de d-i languagechooser/language-name-fb select German d-i countrychooser/country-name select Germany d-i console-setup/layoutcode string de_DE # Network d-i netcfg/choose_interface select auto d-i netcfg/get_hostname string debian d-i netcfg/get_domain string example.com # Package mirror d-i mirror/protocol string http d-i mirror/country string manual d-i mirror/http/hostname string debian.example.com d-i mirror/http/directory string /debian d-i mirror/http/proxy string d-i mirror/suite string lenny # Timezone d-i clock-setup/utc boolean true d-i time/zone string Europe/Berlin d-i clock-setup/ntp boolean true d-i clock-setup/ntp-server string ntp.example.com # Root-Account d-i passwd/make-user boolean false d-i passwd/root-password password secretpassword d-i passwd/root-password-again password secretpassword # Further APT-Options d-i apt-setup/non-free boolean false d-i apt-setup/contrib boolean false d-i apt-setup/security-updates boolean true d-i apt-setup/local0/source boolean false d-i apt-setup/local1/source boolean false d-i apt-setup/local2/source boolean false # Tasks tasksel tasksel/first multiselect standard, desktop d-i pkgsel/include string openssh-client vim less rsync d-i pkgsel/upgrade select safe-upgrade # Popularity-Contest popularity-contest popularity-contest/participate boolean true # Command to be followed after the installation. `in-target` means that the following # Command is followed in the installed environment, rather than in the installation environment. # Here http://$server/skript.sh nach /tmp is downloaded, enabled and implemented. d-i preseed/late_command string in-target wget -P /tmp/ http://$server/skript.sh; in-target chmod +x /tmp/skript.sh; in-target /tmp/skript.sh>

All Howtos of this blog are grouped together in the Howto category – and if you happen to be looking for Support and Services for Debian you’ve come to the right place at credativ.

This post was originally written by Irenie White.

The administration of a large number of servers can be quite tiresome without a central configuration management. This article gives a first introduction into the configuration management tool, Puppet.

Introduction

In our daily work at the Open Source Support Center we maintain a large number of servers. Managing larger clusters or setups means maintaining dozens of machines with an almost identical configuration and only slight variations, if any. Without central configuration management, making small changes to the configuration would mean repeating the same step on all machines. This is where Puppet comes into play.

As with all configuration management tools, Puppet uses a central server which manages the configuration. The clients query the server on a regular basis for new configuration via an encrypted connection. If a new configuration is found, it is imported as the server instructs: the client imports new files, modifies rights, starts services and executes commands, whatever the server says. The advantages are obvious:

- Each configuration change is done only once, regardless of the actual number of maintained servers. Unnecessary – and pretty boring – repetition is avoided, lucky us!

- The configuration is streamlined for all machines, which makes it much easier to maintain.

- A central infrastructure makes it easier to quickly get an overview about the setup – “running around” is not necessary anymore.

- Last but not least, a central configuration tree enables you to incorporate a simple version control of your configuration: for example, playing back the configuration “PRE-UPDATE” on all machines of an entire setup only takes a couple of commands!

Technical workflow

Puppet consists of a central server, called “Puppet Master”, and the clients, called “Nodes”. The nodes query the master for the current configuration. The master responds with a list of configuration and management items: files, services which have to be running, commands which need to be executed, and so on – the possibilities are practically endless:

- The master can hand over files which the node copies to a defined place – if it does not already exist.

- The node is asked to check certain file and directory permissions and to correct them if necessary.

- Depending upon the operating system, the node checks the state of services and starts or stops them. It can also check for installed packages and if they are up to date.

- The master can force the node to execute arbitrary commands

- etc.

Of course, in general all tasks can be fulfilled by handing over files from the master to the client. However, in more complex setups this kind of behaviour is not easily arranged, nor does it simplify the setup. Puppet’s strength is that it facilitates abstract system tasks (restart services, ensure installed packages, add users, etc.), regardless of the actual changed files in the background. You can even use the same statement in Puppet to configure different versions of Linux or Unix.

Installation

First, you need the master, the center of all the configuration you want to manage: apt-get install puppetmaster Puppet expects that all machines in the network have FQDNs – but that should be the case anyway in a well maintained network.

Other machines become a node by installing the Puppet client: apt-get install puppet

Puppet, main configuration

The Puppet nodes do not need to be configured – they will check for a machine called Puppet in the local network. As long as that name points to the master you do not have to do anything else.

Since the master provides files to the nodes, the internal file server must be configured accordingly. There are different solutions for the internal file server, depending on the needs of your setup. For example, it might be better for your setup to store all files you provide to the nodes on one place, and the actual configuration you provide to the nodes somewhere else. However, in our example we keep the files and the configuration for the nodes close, as it is outlined in Puppet’s Best Practice Guide and in the Module Configuration part of the Puppet documentation. Thus, it is enough to change the file /etc/puppet/fileserver.conf to:

[modules] allow 192.168.0.1/24 allow *.credativ.de

Configuration of the configuration – Modules

Puppet’s way of managing configuration is to use sets of tasks grouped by topic. For example, all tasks related to SSH should go into the module “ssh”, while all tasks related to apache should be placed in the module “apache” and so on. These sets of tasks are called “Modules” and are the core of Puppet – in a perfect Puppet setup everything is defined in modules! We will explain the structure of a SSH module to highlight the basics and ideas behind Puppet’s modules. We will also try to stay close to the Best Practise Guide to make it easier to check back against the Puppet documentation.

Please note, however, that this example is an example: in a real world setup the SSH configuration would be a bit more dynamic, but we focused on simple and easy-to-understand methods.

The SSH module

We have the following requirements:

- The package open-ssh must be installed and be the newest version.

- Each node’s sshd_config file has to be the same as the one saved on the master.

- In the event that the sshd_config is changed on any node, the sshd service should be restarted.

- The user credativ needs to have certain files in his/her directory $HOME/.ssh.

To comply with these requirements we start by creating some necessary paths:

mkdir -p /etc/puppet/modules/ssh/manifests mkdir -p /etc/puppet/modules/ssh/files

The directory “manifests” contains the actual configuration instructions of the module and the directory “files” provides the files we hand over to the clients.

The instructions themselves are written down in init.pp in the “manifests” directory. The set of instructions to fulfil aims 1 – 4 are grouped in a so called “class”. For each task a “class” has one subsection, a type. So in our case we have four types, one for each aim:

class ssh{

package { "openssh-server":

ensure => latest,

}

file { "/etc/ssh/sshd_config":

owner => root,

group => root,

mode => 644,

source => "puppet:///ssh/sshd_config",

}

service { ssh:

ensure => running,

hasrestart => true,

subscribe => File["/etc/ssh/sshd_config"],

}

file { "/home/credativ/.ssh":

path => "/home/credativ/.ssh",

owner => "credativ",

group => "credativ",

mode => 600,

recurse => true,

source => "puppet:///ssh/ssh",

ensure => [directory, present],

}

}Each type is another task and calls another action on the node:

package

Here we make sure that the package openssh-server is installed in the newest version.

file

A file on the node is compared with the version on the server and overwritten if necessary. Also, the rights are adjusted.

service

Well, as the name says, this deals with services: in our case the service

file

Here we have again the file type, but this time we do not compare a file, but an entire directory.

As mentioned above, the files and directories you configured so that the server provides them to the nodes must be available in the directory /etc/puppet/modules/ssh/files/.

Nodes and modules

We now have three parts: the master, the nodes and the modules. The next step is to tell the master which nodes are related to which modules. First, you must tell the master that this module exists in /etc/puppet/manifests/modules.pp:

import "ssh"

Next, you need to modify /etc/puppet/manifests/nodes.pp. This specifies which module is loaded for which node, and which modules should be loaded as default in the event that a node does not have a special entry. The entries for the nodes support inheritance.

So, for example, to have the module “rsyslog” ready for all nodes but the module “ssh” only ready for the node “external” you need the following entry:

node default {

include rsyslog

}

node 'external' inherits default {

include ssh

}Puppet is now configured!

Certificates – secured communication between nodes and master

As mentioned above, the communication between master and node is encrypted. But that implies you have to verify the partners at least once. This can be done after a node queries the master for the first time. Whenever the master is queried by an unknown node it does not provide the default configuration but instead puts the node on a waiting list. You can check the waiting list with the command: # puppetca --list To verify a node and incorporate it into the Puppet system you need to verify it: # puppetca --sign external.example.com The entire process is explained in more detail in the puppet doceumentation.

Closing words

The example introduced in this article is very simple – as I noted, a real world example would be more complex and dynamic. However, it is a good way to start with Puppet, and the documentation linked throughout this article will help the willing reader to dive deeper into the components of Puppet.

We, here at credativ’s Open Source Support Center have gained considerable experience with Puppet in recent years and really like the framework. Also, in our day to day support and consulting work we see the market growing as more and more customers are interested in the framework. Right now, Puppet is in the fast lane and it will be interesting to see how more established solutions like cfengine will react to this competition.

This post was originally written by Roland Wolters.