If you had the choice, would you rather take Salsa or Guacamole? Let me explain, why you should choose Guacamole over Salsa.

In this blog article, we want to take a look at one of the smaller Apache projects out there called Apache Guacamole. Apache Guacamole allows administrators to run a web based client tool for accessing remote applications and servers. This can include remote desktop systems, applications or terminal sessions. Users can simply access them by using their web browsers. No special client or other tools are required. From there, they can login and access all pre-configured remote connections that have been specified by an administrator.

Thereby, Guacamole supports a wide variety of protocols like VNC, RDP, and SSH. This way, users can basically access anything from remote terminal sessions to full fledged Graphical User Interfaces provided by operation systems like Debian, Ubuntu, Windows and many more.

Convert every window application to a web application

If we spin this idea further, technically every window application that isn’t designed to run as an web application can be transformed to a web application by using Apache Guacamole. We helped a customer to bring its legacy application to Kubernetes, so that other users could use their web browsers to run it. Sure, implementing the application from ground up, so that it follows the Cloud Native principles, is the preferred solution. As always though, efforts, experience and costs may exceed the available time and budget and in that cases, Apache Guacamole can provide a relatively easy way for realizing such projects.

In this blog article, I want to show you, how easy it is to run a legacy window application as a web app on Kubernetes. For this, we will use a Kubernetes cluster created by kind and create a Kubernetes Deployment to make kate – a KDE based text editor – our own web application. It’s just an example, so there might be better application to transform but this one should be fine to show you the concepts behind Apache Guacamole.

So, without further ado, let’s create our kate web application.

Preparation of Kubernetes

Before we can start, we must make sure that we have a Kubernetes cluster, that we can test on. If you already have a cluster, simply skip this section. If not, let’s spin one up by using kind.

kind is a lightweight implementation of Kubernetes that can be run on every machine. It’s written in Go and can be installed like this:

# For AMD64 / x86_64

[ $(uname -m) = x86_64 ] && curl -Lo ./kind https://kind.sigs.k8s.io/dl/v0.22.0/kind-linux-amd64

# For ARM64

[ $(uname -m) = aarch64 ] && curl -Lo ./kind https://kind.sigs.k8s.io/dl/v0.22.0/kind-linux-arm64

chmod +x ./kind

sudo mv ./kind /usr/local/bin/kind

Next, we need to install some dependencies for our cluster. This includes for example docker and kubectl.

$ sudo apt install docker.io kubernetes-client

By creating our Kubernetes Cluster with kind, we need docker because the Kubernetes cluster is running within Docker containers on your host machine. Installing kubectl allows us to access the Kubernetes after creating it.

Once we installed those packages, we can start to create our cluster now. First, we must define a cluster configuration. It defines which ports are accessible from our host machine, so that we can access our Guacamole application. Remember, the cluster itself is operated within Docker containers, so we must ensure that we can access it from our machine. For this, we define the following configuration which we save in a file called cluster.yaml:

kind: Cluster

apiVersion: kind.x-k8s.io/v1alpha4

nodes:

- role: control-plane

extraPortMappings:

- containerPort: 30000

hostPort: 30000

listenAddress: "127.0.0.1"

protocol: TCP

Hereby, we basically map the container’s port 30000 to our local machine’s port 30000, so that we can easily access it later on. Keep this in mind because it will be the port that we will use with our web browser to access our kate instance.

Ultimately, this configuration is consumed by kind . With it, you can also adjust multiple other parameters of your cluster besides of just modifying the port configuration which are not mentioned here. It’s worth to take a look kate’s documentation for this.

As soon as you saved the configuration to cluster.yaml, we can now start to create our cluster:

$ sudo kind create cluster --name guacamole --config cluster.yaml

Creating cluster "guacamole" ...

✓ Ensuring node image (kindest/node:v1.29.2) 🖼

✓ Preparing nodes 📦

✓ Writing configuration 📜

✓ Starting control-plane 🕹️

✓ Installing CNI 🔌

✓ Installing StorageClass 💾

Set kubectl context to "kind-guacamole"

You can now use your cluster with:

kubectl cluster-info --context kind-guacamole

Have a question, bug, or feature request? Let us know! https://kind.sigs.k8s.io/#community 🙂

Since we don’t want to run everything in root context, let’s export the kubeconfig, so that we can use it with kubectl by using our unpriviledged user:

$ sudo kind export kubeconfig \

--name guacamole \

--kubeconfig $PWD/config

$ export KUBECONFIG=$PWD/config

$ sudo chown $(logname): $KUBECONFIG

By doing so, we are ready and can access our Kubernetes cluster using kubectl now. This is our baseline to start migrating our application.

Creation of the Guacamole Deployment

In order to run our application on Kubernetes, we need some sort of workload resource. Typically, you could create a Pod, Deployment, Statefulset or Daemonset to run workloads on a cluster.

Let’s create the Kubernetes Deployment for our own application. The example shown below shows the deployment’s general structure. Each container definition will have their dedicated examples afterwards to explain them in more detail.

apiVersion: apps/v1

kind: Deployment

metadata:

labels:

app: web-based-kate

name: web-based-kate

spec:

replicas: 1

selector:

matchLabels:

app: web-based-kate

template:

metadata:

labels:

app: web-based-kate

spec:

containers:

# The guacamole server component that each

# user will connect to via their browser

- name: guacamole-server

image: docker.io/guacamole/guacamole:1.5.4

...

# The daemon that opens the connection to the

# remote entity

- name: guacamole-guacd

image: docker.io/guacamole/guacd:1.5.4

...

# Our own self written application that we

# want to make accessible via the web.

- name: web-based-kate

image: registry.example.com/own-app/web-based-kate:0.0.1

...

volumes:

- name: guacamole-config

secret:

secretName: guacamole-config

- name: guacamole-server

emptyDir: {}

- name: web-based-kate-home

emptyDir: {}

- name: web-based-kate-tmp

emptyDir: {}

As you can see, we need three containers and some volumes for our application. The first two containers are dedicated to Apache Guacamole itself. First, it’s the server component which is the external endpoint for clients to access our web application. It serves the web server as well as the user management and configuration to run Apache Guacamole.

Next to this, there is the guacd daemon. This is the core component of Guacamole which creates the remote connections to the application based on the configuration done to the server. This daemon forwards the remote connection to the clients by making it accessible to the Guacamole server which then forwards the connection to the end user.

Finally, we have our own application. It will offer a connection endpoint to the guacd daemon using one of Guacamole’s supported protocols and provide the Graphical User Interface (GUI).

Guacamole Server

Now, let’s deep dive into each container specification. We are starting with the Guacamole server instance. This one handles the session and user management and contains the configuration which defines what remote connections are available and what are not.

- name: guacamole-server

image: docker.io/guacamole/guacamole:1.5.4

env:

- name: GUACD_HOSTNAME

value: "localhost"

- name: GUACD_PORT

value: "4822"

- name: GUACAMOLE_HOME

value: "/data/guacamole/settings"

- name: HOME

value: "/data/guacamole"

- name: WEBAPP_CONTEXT

value: ROOT

volumeMounts:

- name: guacamole-config

mountPath: /data/guacamole/settings

- name: guacamole-server

mountPath: /data/guacamole

ports:

- name: http

containerPort: 8080

securityContext:

allowPrivilegeEscalation: false

privileged: false

readOnlyRootFilesystem: true

capabilities:

drop: ["all"]

resources:

limits:

cpu: "250m"

memory: "256Mi"

requests:

cpu: "250m"

memory: "256Mi"

Since it needs to connect to the guacd daemon, we have to provide the connection information for guacd by passing them into the container using environment variables like GUACD_HOSTNAME or GUACD_PORT. In addition, Guacamole would usually be accessible via http://<your domain>/guacamole.

This behavior however can be adjusted by modifying the WEBAPP_CONTEXT environment variable. In our case for example, we don’t want a user to type in /guacamole to access it but simply using it like this http://<your domain>/

Guacamole Guacd

Then, there is the guacd daemon.

- name: guacamole-guacd

image: docker.io/guacamole/guacd:1.5.4

args:

- /bin/sh

- -c

- /opt/guacamole/sbin/guacd -b 127.0.0.1 -L $GUACD_LOG_LEVEL -f

securityContext:

allowPrivilegeEscalation: true

privileged: false

readOnlyRootFileSystem: true

capabilities:

drop: ["all"]

resources:

limits:

cpu: "250m"

memory: "512Mi"

requests:

cpu: "250m"

memory: "512Mi"

It’s worth mentioning that you should modify the arguments used to start the guacd container. In the example above, we want guacd to only listen to localhost for security reasons. All containers within the same pod share the same network namespace. As a a result, they can access each other via localhost. This said, there is no need to make this service accessible to over services running outside of this pod, so we can limit it to localhost only. To achieve this, you would need to set the -b 127.0.0.1 parameter which sets the corresponding listen address. Since you need to overwrite the whole command, don’t forget to also specify the -L and -f parameter. The first parameter sets the log level and the second one set the process in the foreground.

Web Based Kate

To finish everything off, we have the kate application which we want to transform to a web application.

- name: web-based-kate

image: registry.example.com/own-app/web-based-kate:0.0.1

env:

- name: VNC_SERVER_PORT

value: "5900"

- name: VNC_RESOLUTION_WIDTH

value: "1280"

- name: VNC_RESOLUTION_HEIGHT

value: "720"

securityContext:

allowPrivilegeEscalation: true

privileged: false

readOnlyRootFileSystem: true

capabilities:

drop: ["all"]

volumeMounts:

- name: web-based-kate-home

mountPath: /home/kate

- name: web-based-kate-tmp

mountPath: /tmp

Configuration of our Guacamole setup

After having the deployment in place, we need to prepare the configuration for our Guacamole setup. In order to know, what users exist and which connections should be offered, we need to provide a mapping configuration to Guacamole.

In this example, a simple user mapping is shown for demonstration purposes. It uses a static mapping defined in a XML file that is handed over to the Guacamole server. Typically, you would use other authentication methods instead like a database or LDAP.

This said however, let’s continue with our static one. For this, we simply define a Kubernetes Secret which is mounted into the Guacamole server. Hereby, it defines two configuration files. One is the so called guacamole.properties. This is Guacamole’s main configuration file. Next to this, we also define the user-mapping.xml which contains all available users and their connections.

apiVersion: v1

kind: Secret

metadata:

name: guacamole-config

stringData:

guacamole.properties: |

enable-environment-properties: true

user-mapping.xml: |

<user-mapping>

<authorize username="admin" password="PASSWORD" encoding="sha256">

<connection name="web-based-kate">

<protocol>vnc</protocol>

<param name="hostname">localhost</param>

<param name="port">5900</param>

</connection>

</authorize>

</user-mapping>

As you can see, we only defined on specific user called admin which can use a connection called web-based-kate. In order to access the kate instance, Guacamole would use VNC as the configured protocol. To make this happen, our web application must offer a VNC Server port on the other side, so that the guacd daemon can then access it to forward the remote session to clients. Keep in mind that you need to replace the string PASSWORD to a proper sha256 sum which contains the password. The sha256 sum could look like this for example:

$ echo -n "test" | sha256sum

9f86d081884c7d659a2feaa0c55ad015a3bf4f1b2b0b822cd15d6c15b0f00a08 -

Next, the hostname parameter is referencing the corresponding VNC server of our kate container. Since we are starting our container alongside with our Guacamole containers within the same pod, the Guacamole Server as well as the guacd daemon can access this application via localhost. There is no need to set up a Kubernetes Service in front of it since only guacd will access the VNC server and forward the remote session via HTTP to clients accessing Guacamole via their web browsers. Finally, we also need to specify the VNC server port which is typically 5900 but this could be adjusted if needed.

The corresponding guacamole.properties is quite short. By enabling the enabling-environment-properties configuration parameter, we make sure that every Guacamole configuration parameter can also be set via environment variables. This way, we don’t need to modify this configuration file each and every time when we want to adjust the configuration but we only need to provide updated environment variables to the Guacamole server container.

Make Guacamole accessible

Last but not least, we must make the Guacamole server accessible for clients. Although each provided service can access each other via localhost, the same does not apply to clients trying to access Guacamole. Therefore, we must make Guacamole’s server port 8080 available to the outside world. This can be achieved by creating a Kubernetes Service of type NodePort. This service is forwarding each request from a local node port to the corresponding container that is offering the configured target port. In our case, this would be the Guacamole server container which is offering port 8080.

apiVersion: v1

kind: Service

metadata:

name: web-based-kate

spec:

type: NodePort

selector:

app: web-based-kate

ports:

- name: http

protocol: TCP

port: 8080

targetPort: 8080

nodePort: 30000

This specific port is then mapped to the Node’s 30000 port for which we also configured the kind cluster in such a way that it forwards its node port 30000 to the host system’s port 30000. This port is the one that we would need to use to access Guacamole with our web browsers.

Prepartion of the Application container

Before we can start to deploy our application, we need to prepare our kate container. For this, we simply create a Debian container that is running kate. Keep in mind that you would typically use lightweight base images like alpine to run applications like this. For this demonstration however, we use the Debian images since it is easier to spin it up but in general you only need a small friction of the functionality that is provided by this base image. Moreover – from an security point of view – you want to keep your images small to minimize the attack surface and make sure it is easier to maintain. For now however, we will continue with the Debian image.

In the example below, you can see a Dockerfile for the kate container.

FROM debian:12

# Install all required packages

RUN apt update && \

apt install -y x11vnc xvfb kate

# Add user for kate

RUN adduser kate --system --home /home/kate -uid 999

# Copy our entrypoint in the container

COPY entrypoint.sh /opt

USER 999

ENTRYPOINT [ "/opt/entrypoint.sh" ]

Here you see that we create a dedicated user called kate (User ID 999) for which we also create a home directory. This home directory is used for all files that kate is creating during runtime. Since we set the readOnlyRootFilesystem to true, we must make sure that we mount some sort of writable volume (e.g EmptyDir) to kate’s home directory. Otherwise, kate wouldn’t be able to write any runtime data then.

Moreover, we have to install the following three packages:

- x11vnc

- xvfb

- kate

These are the only packages we need for our container. In addition, we also need to create an entrypoint script to start the application and prepare the container accordingly. This entrypoint script creates the configuration for kate, starts it in a virtual display by using xvfb-run and provides this virtual display to end users by using the VNC server via x11vnc. In the meantime, xdrrinfo is used to check if the virtual display came up successfully after starting kate. If it takes to long, the entrypoint script will fail by returning the exit code 1.

By doing this, we ensure that the container is not stuck in an infinite loop during a failure and let Kubernetes restart the container whenever it couldn’t start the application successfully. Furthermore, it is important to check if the virtual display came up prior of handing it over to the VNC server because the VNC server would crash if the virtual display is not up and running since it needs something to share. On the other hand though, our container will be killed whenever kate is terminated because it would also terminate the virtual display and in the end it would then also terminate the VNC server which let’s the container exit, too. This way, we don’t need take care of it by our own.

#!/bin/bash

set -e

# If no resolution is provided

if [ -z $VNC_RESOLUTION_WIDTH ]; then

VNC_RESOLUTION_WIDTH=1920

fi

if [ -z $VNC_RESOLUTION_HEIGHT ]; then

VNC_RESOLUTION_HEIGHT=1080

fi

# If no server port is provided

if [ -z $VNC_SERVER_PORT ]; then

VNC_SERVER_PORT=5900

fi

# Prepare configuration for kate

mkdir -p $HOME/.local/share/kate

echo "[MainWindow0]

"$VNC_RESOLUTION_WIDTH"x"$VNC_RESOLUTION_HEIGHT" screen: Height=$VNC_RESOLUTION_HEIGHT

"$VNC_RESOLUTION_WIDTH"x"$VNC_RESOLUTION_HEIGHT" screen: Width=$VNC_RESOLUTION_WIDTH

"$VNC_RESOLUTION_WIDTH"x"$VNC_RESOLUTION_HEIGHT" screen: XPosition=0

"$VNC_RESOLUTION_WIDTH"x"$VNC_RESOLUTION_HEIGHT" screen: YPosition=0

Active ViewSpace=0

Kate-MDI-Sidebar-Visible=false" > $HOME/.local/share/kate/anonymous.katesession

# We need to define an XAuthority file

export XAUTHORITY=$HOME/.Xauthority

# Define execution command

APPLICATION_CMD="kate"

# Let's start our application in a virtual display

xvfb-run \

-n 99 \

-s ':99 -screen 0 '$VNC_RESOLUTION_WIDTH'x'$VNC_RESOLUTION_HEIGHT'x16' \

-f $XAUTHORITY \

$APPLICATION_CMD &

# Let's wait until the virtual display is initalize before

# we proceed. But don't wait infinitely.

TIMEOUT=10

while ! (xdriinfo -display :99 nscreens); do

sleep 1

let TIMEOUT-=1

done

# Now, let's make the virtual display accessible by

# exposing it via the VNC Server that is listening on

# localhost and the specified port (e.g. 5900)

x11vnc \

-display :99 \

-nopw \

-localhost \

-rfbport $VNC_SERVER_PORT \

-forever

After preparing those files, we can now create our image and import it to our Kubernetes cluster by using the following commands:

# Do not forget to give your entrypoint script

# the proper permissions do be executed

$ chmod +x entrypoint.sh

# Next, build the image and import it into kind,

# so that it can be used from within the clusters.

$ sudo docker build -t registry.example.com/own-app/web-based-kate:0.0.1 .

$ sudo kind load -n guacamole docker-image registry.example.com/own-app/web-based-kate:0.0.1

The image will be imported to kind, so that every workload resource operated in our kind cluster can access it. If you use some other Kubernetes cluster, you would need to upload this to a registry that your cluster can pull images from.

Finally, we can also apply our previously created Kubernetes manifests to the cluster. Let’s say we saved everything to one file called kuberentes.yaml. Then, you can simply apply it like this:

$ kubectl apply -f kubernetes.yaml

deployment.apps/web-based-kate configured

secret/guacamole-config configured

service/web-based-kate unchanged

This way, a Kubernetes Deployment, Secret and Service is created which ultimately creates a Kubernetes Pod which we can access afterwards.

$ kubectl get pod

NAME READY STATUS RESTARTS AGE

web-based-kate-7894778fb6-qwp4z 3/3 Running 0 10m

Verification of our Deployment

Now, it’s money time! After preparing everything, we should be able to access our web based kate application by using our web browser. As mentioned earlier, we configured kind in such a way that we can access our application by using our local port 30000. Every request to this port is forwarded to the kind control plane node from where it is picked up by the Kubernetes Service of type NodePort. This one is then forwarding all requests to our designated Guacamole server container which is offering the web server for accessing remote application’s via Guacamole.

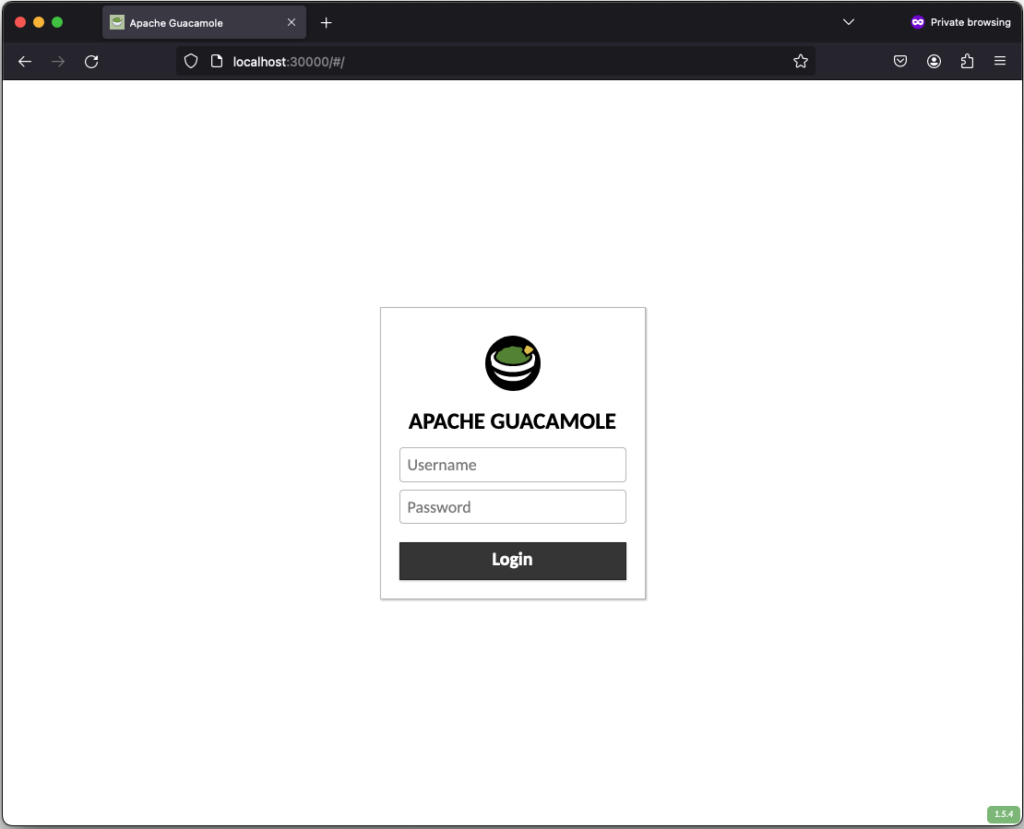

If everything works out, you should be able to see the the following login screen:

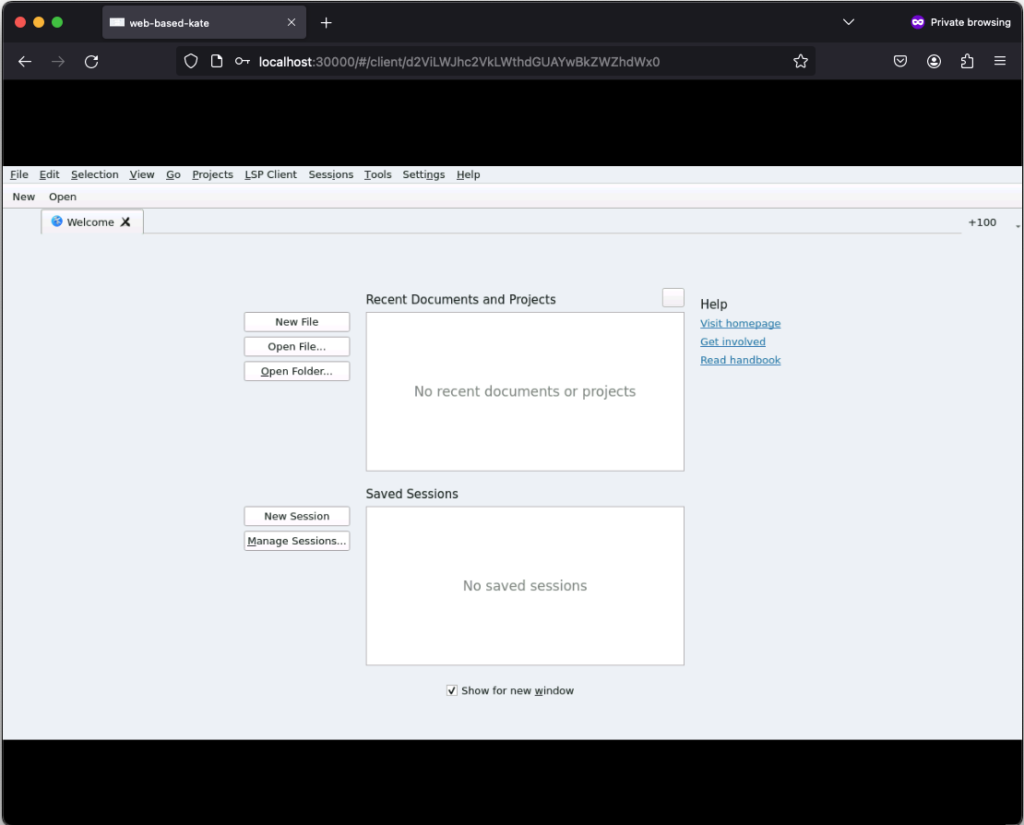

After successfully login in, the remote connection is established and you should be able to see the welcome screen from kate:

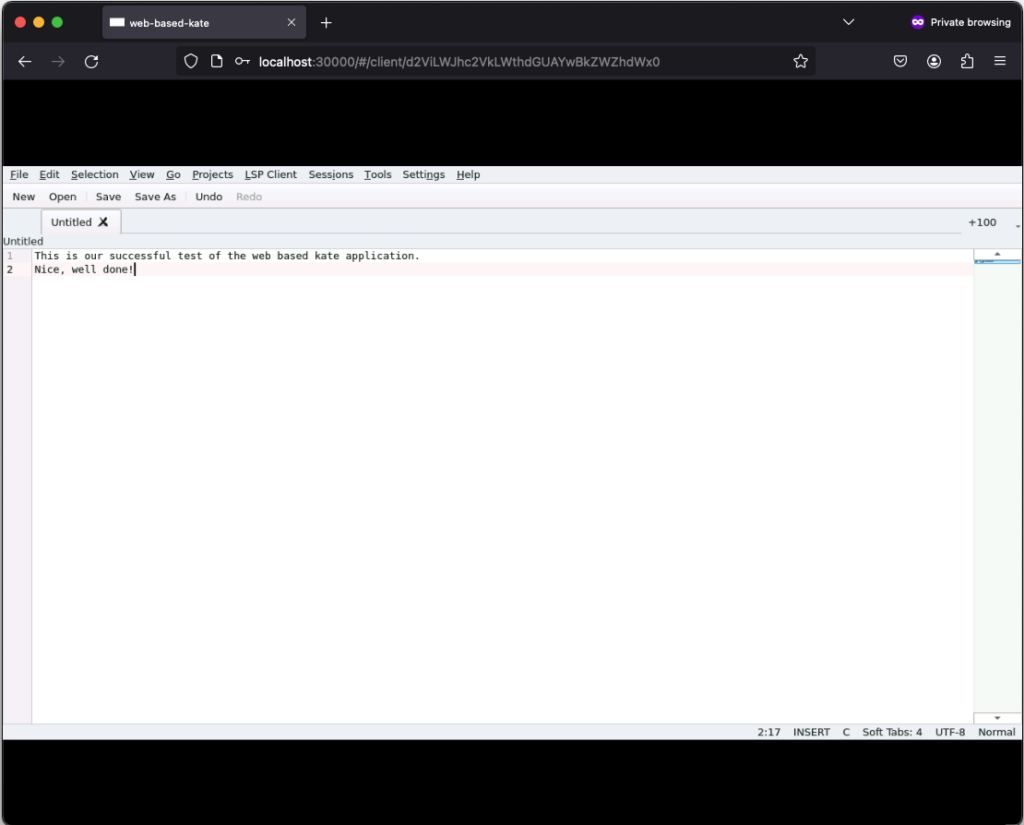

If you click on New, you can create a new text file:

Those text files can even be saved but keep in mind that they will only exist as long as our Kubernetes Pod exists. Once it gets deleted, the corresponding EmptyDir, that we mounted into our kate container, gets deleted as well and all files in it are lost. Moreover, the container is set to read-only meaning that a user can only write files to the volumes (e.g. EmptyDir) that we mounted to our container.

Conclusion

After seeing that it’s relatively easy to convert every application to a web based one by using Apache Guacamole, there is only one major question left…

What do you prefer the most. Salsa or Guacamole?

This years All Systems Go! took place on 2023-09-13 and 2023-09-14 at the nice location of Neue Mälzerei in Berlin, Germany. One Debian Developer and employee of NetApp had the opportunity to attend this conference: Bastian Blank.

All Systems Go! focuses on low-level user-space for Linux systems. Everything that is above the kernel, but is so fundamental that systems won’t work without.

A lot of talks happened, ranging from how to use TPM with Linux for measured boot, how to overcome another Y2038 problem, up to just how to actually boot fast. Videos for all talks are kindly provided by the nice people of the C3VOC.

DebConf23 https://debconf23.debconf.org/ took place from 2023-09-10 to –17 in Kochi, India.

Four employees (three Debian developers) from NetApp had the opportunity to participate in the annual event, which is the most important conference in the Debian world: Christoph Senkel, Andrew Lee, Michael Banck and Noël Köthe.

DebCamp

What is DebCamp? DebCamp usually takes place a week before DebConf begins. For participants, DebCamp is a hacking session that takes place just before DebConf. It’s a week dedicated to Debian contributors focusing on their Debian-related projects, tasks, or problems without interruptions.

DebCamps are largely self-organized since it’s a time for people to work. Some prefer to work individually, while others participate in or organize sprints. Both approaches are encouraged, although it’s recommended to plan your DebCamp week in advance.

During this DebCamp, there are the following public sprints:

- Debian Boot Camp: An introduction for newcomers on how to contribute to Debian more deeply.

- GPG keys 101: Get your keys ready for DebConf’s key signing party: An introduction to PGP/GPG keys and the web of trust, primarily targeting those new to Debian who don’t have a GPG key yet.

In addition to the organizational part, our colleague Andrew also attended and arranged private sprints during DebCamp:

- Debian Installer hacking: Debian installer is a complex project with multiple components. We had an on-site d-i hacker, Alper Nebi Yasak, who guided us in addressing issues specific to zh_TW locale users in the Debian installer.

- LXQt/LXDE hacking session: LXQt and LXDE are lightweight desktop environments for Linux users. Our colleague Andrew Lee leads the LXQt team in Debian and also provided assistance to the LXDE team in the absence of their original team leader from Ukraine.

It also allows the DebConf committee to work together with the local team to prepare additional details for the conference. During DebCamp, the organization team typically handles the following tasks:

- Setting up the Frontdesk: This involves providing conference badges (with maps and additional information) and distributing SWAG such as food vouchers, conference t-shirts, and sponsor gifts.

- Setting up the network: This includes configuring the network in conference rooms, hack labs, and video team equipment for live streaming during the event.

- Accommodation arrangements: Assigning rooms for participants to check in to on-site accommodations.

- Food arrangements: Catering to various dietary requirements, including regular, vegetarian, vegan, halal, gluten-free (regular, vegetarian, vegan), and accommodating special religious and allergy-related needs.

- Setting up a conference bar: Providing a relaxed environment for participants to socialize and get to know each other.

- Writing daily announcements: Keeping participants informed about ongoing activities.

- Organizing day trip options.

- Arranging parties.

Conference talks

The conference itself started on Sunday 10. September with an opening, some organizational stuff, GPG keysigning information (the fingerprint was printed on the badge) and a big welcome to everyone onsite and in the world.

Most talks of DebConf were live streamed and are available in the video archive. The topics were broad from very technical (e.g., “What’s new in the Linux kernel”) over organizational (e.g., “DebConf committee”) to social (e.g., “Adulting”).

Schedule: https://debconf23.debconf.org/schedule/

Videos: https://meetings-archive.debian.net/pub/debian-meetings/2023/DebConf23/

Thanks a lot, to the voluntarily organized video team for this video transmission coverage.

Lightning Talks

On the last day of DebConf, the traditional lightning talks were held. One talk in particular was noticed, the presentation of extrepo by Wouter Verhelst. At NetApp, we use bookworm-based Debian ThinkPad’s. However, in a corporate environment, non-packaged software needs to be used from time to time, and extrepo is a very elegant way to solve this problem by providing, maintaining and keeping UpToDate a list of 3rd-party APT repositories like Slack or Docker Desktop.

Sadya

On Tuesday, an incredibly special lunch was offered at DebConf: a traditional Kerala vegetarian banquet (Sadya in Maralayam), which is served on a banana leaf and eaten by hand. It was quite unusual for the European part of the attendees at first, but a wonderful experience once one got into it.

Daytrip

On Wednesday, the Daytrip happened and everybody could choose out of five different trips: https://wiki.debian.org/DebConf/23/DayTrip

The houseboat trip was a bus tour to Alappuzha about 60 km away from the conference venue. It was interesting to see (and hear) the typical bus, car, motorbike and Tuktuk road traffic in action. During the boat trip the participants socialized and visited the local landscape outside the city.

Unfortunately, we had an accident at one of the daytrip options. Abraham a local Indian participant drowned while swimming.

https://debconf23.debconf.org/news/2023-09-14-mourning-abraham/

It was a big shock for everybody and all events including the traditional formal dinner were cancelled for Thursday. The funeral with the family was on Friday morning and DebConf people had the opportunity with organized buses to participate and say goodbye.

NetApp internal dinner on Friday

The NetApp team at DebConf wanted to take the chance to go to a local restaurant (“were the locals go eating”) and enjoyed very tasty food.

DebConf24

Sunday was the last day of DebConf23. As usual, the upcoming DebConf24 was very briefly presented and there was a call for bids for DebConf25.

Maybe see you in Haifa, Israel next year.

https://www.debconf.org/goals.shtml

Authors: Andrew Lee, Michael Banck and Noël Köthe

Congratulations to the Debian Community

The Debian Project just released version 11 (aka “bullseye”) of their free operating system. In total, over 6,208 contributors worked on this release and were indispensable in making this launch happen. We would like to thank everyone involved for their combined efforts, hard work, and many hours pent in recent years building this new release that will benefit the entire open source community.

We would also like to acknowledge our in-house Debian developers who contributed to this effort. We really appreciate the work you do on behalf of the community and stand firmly behind your contributions.

What’s New in Debian 11 Bullseye

Debian 11 comes with a number of meaningful changes and enhancements. The new release includes over 13,370 new software packages, for a total of over 57,703 packages on release. Out of these, 35,532 packages have been updated to newer versions, including an update in the kernel from 4.19 in “buster” to 5.10 in bullseye.

Bullseye expands on the capabilities of driverless printing with Common Unix Printing System (CUPS) and driverless scanning with Scanner Access Now Easy (SANE). While it was possible to use CUPS for driverless printing with buster, bullseye comes with the package ipp-usb, which allows a USB device to be treated as a network device and thus extend driverless printing capabilities. SANE connects to this when set up correctly and connected to a USB port.

As in previous releases, Debian 11 comes with a Debian Edu / Skolelinux version. Debian Edu has been a complete solution for schools for many years. Debian Edu can provide the entire network for a school and then only users and machines need to be added after installation. This can also be easily managed via the web interface GOsa².

Debian 11 bullseye can be downloaded here.

https://www.debian.org/devel/debian-installer/index.en.html

For more information and greater technical detail on the new Debian 11 release, please refer to the official release notes on Debian.org

https://www.debian.org/releases/bullseye/amd64/release-notes/.

Contributions by Instaclustr Employees

Our Debian roots run deep here. credativ, which was acquired by Instaclustr in March 2021, has always been an active part of the Debian community and visited every DebConf since 2004. Debian also serves as the operating system at the heart of the Instaclustr Managed Platform.

For the release of Debian 11, our team has taken over various responsibilities in the community. Our contributions include:

- 90% of the PostgreSQL packaging of the new release Maintenance work on various packages

- Support as Debian-Sys-Admin

- Contributions to Debian Edu/Skolelinux

- Development work on kernel images

- Development work on cloud images

- Development work for various Debian backports

- Work on salsa.debian.org

Many of our colleagues have made significant contributions to the current release, including:

- Adrian Vondendriesch

- Alexander Wirt (Formorer)

- Bastian Blank (waldi)

- Christoph Berg (Myon)

- Dominik George (natureshadow)

- Felix Geyer (fgeyer)

- Martin Zobel-Helas (zobel)

- Michael Banck (azeem)

- Michael Meskes (feivel)

- Noël Köthe (Noel)

- Sven Bartscher (kritzefitz)

How to Upgrade

Given that Debian 11 bullseye is a major release, we suggest that everyone running on Debian 10 buster upgrade. The main steps for an upgrade include:

- Make sure to backup any data that should not get lost and prepare for recovery

- Remove non-Debian packages and clean up leftover files and old versions

- Upgrade to latest point release

- Check and prepare your APT source-list files by adding the relevant Internet sources or local mirrors

- Upgrade your packages and then upgrade your system

You can find a more detailed walkthrough of the upgrade process in the Debian documentation.

All existing credativ customers who are running a Debian-based installation are naturally covered by our service and support and are encouraged to reach out.

If you are interested in upgrading from your old Debian version, or if you have questions with regards to your Debian infrastructure, do not hesitate to drop us an email or contact us at info@credativ.de.

Or, you can get started in minutes with any one of these open source technologies like Apache Cassandra, Apache Kafka, Redis, and OpenSearch on the Instaclustr Managed Platform. Sign up for a free trial today.

Patroni is a clustering solution for PostgreSQL® that is getting more and more popular in the cloud and Kubernetes sector due to its operator pattern and integration with Etcd or Consul. Some time ago we wrote a blog post about the integration of Patroni into Debian. Recently, the vip-manager project which is closely related to Patroni has been uploaded to Debian by us. We will present vip-manager and how we integrated it into Debian in the following.

To recap, Patroni uses a distributed consensus store (DCS) for leader-election and failover. The current cluster leader periodically updates its leader-key in the DCS. As soon the key cannot be updated by Patroni for whatever reason it becomes stale. A new leader election is then initiated among the remaining cluster nodes.

PostgreSQL Client-Solutions for High-Availability

From the user’s point of view it needs to be ensured that the application is always connected to the leader, as no write transactions are possible on the read-only standbys. Conventional high-availability solutions like Pacemaker utilize virtual IPs (VIPs) that are moved to the primary node in the case of a failover.

For Patroni, such a mechanism did not exist so far. Usually, HAProxy (or a similar solution) is used which does periodic health-checks on each node’s Patroni REST-API and routes the client requests to the current leader.

An alternative is client-based failover (which is available since PostgreSQL 10), where all cluster members are configured in the client connection string. After a connection failure the client tries each remaining cluster member in turn until it reaches a new primary.

vip-manager

A new and comfortable approach to client failover is vip-manager. It is a service written in Go that gets started on all cluster nodes and connects to the DCS. If the local node owns the leader-key, vip-manager starts the configured VIP. In case of a failover, vip-manager removes the VIP on the old leader and the corresponding service on the new leader starts it there. The clients are configured for the VIP and will always connect to the cluster leader.

Debian-Integration of vip-manager

For Debian, the pg_createconfig_patroni program from the Patroni package has been adapted so that it can now create a vip-manager configuration:

pg_createconfig_patroni 11 test --vip=10.0.3.2

Similar to Patroni, we start the service for each instance:

systemctl start vip-manager@11-test

The output of patronictl shows that pg1 is the leader:

+---------+--------+------------+--------+---------+----+-----------+

| Cluster | Member | Host | Role | State | TL | Lag in MB |

+---------+--------+------------+--------+---------+----+-----------+

| 11-test | pg1 | 10.0.3.247 | Leader | running | 1 | |

| 11-test | pg2 | 10.0.3.94 | | running | 1 | 0 |

| 11-test | pg3 | 10.0.3.214 | | running | 1 | 0 |

+---------+--------+------------+--------+---------+----+-----------+

In journal of ‘pg1’ it can be seen that the VIP has been configured:

Jan 19 14:53:38 pg1 vip-manager[9314]: 2020/01/19 14:53:38 IP address 10.0.3.2/24 state is false, desired true

Jan 19 14:53:38 pg1 vip-manager[9314]: 2020/01/19 14:53:38 Configuring address 10.0.3.2/24 on eth0

Jan 19 14:53:38 pg1 vip-manager[9314]: 2020/01/19 14:53:38 IP address 10.0.3.2/24 state is true, desired true

If LXC containers are used, one can also see the VIP in the output of lxc-ls -f:

NAME STATE AUTOSTART GROUPS IPV4 IPV6 UNPRIVILEGED

pg1 RUNNING 0 - 10.0.3.2, 10.0.3.247 - false

pg2 RUNNING 0 - 10.0.3.94 - false

pg3 RUNNING 0 - 10.0.3.214 - false

The vip-manager packages are available for Debian testing (bullseye) and unstable, as well as for the upcoming 20.04 LTS Ubuntu release (focal) in the official repositories. For Debian stable (buster), as well as for Ubuntu 19.04 and 19.10, packages are available at apt.postgresql.org maintained by credativ, along with the updated Patroni packages with vip-manager integration.

Switchover Behaviour

In case of a planned switchover, e.g. pg2 becomes the new leader:

# patronictl -c /etc/patroni/11-test.yml switchover --master pg1 --candidate pg2 --force

Current cluster topology

+---------+--------+------------+--------+---------+----+-----------+

| Cluster | Member | Host | Role | State | TL | Lag in MB |

+---------+--------+------------+--------+---------+----+-----------+

| 11-test | pg1 | 10.0.3.247 | Leader | running | 1 | |

| 11-test | pg2 | 10.0.3.94 | | running | 1 | 0 |

| 11-test | pg3 | 10.0.3.214 | | running | 1 | 0 |

+---------+--------+------------+--------+---------+----+-----------+

2020-01-19 15:35:32.52642 Successfully switched over to "pg2"

+---------+--------+------------+--------+---------+----+-----------+

| Cluster | Member | Host | Role | State | TL | Lag in MB |

+---------+--------+------------+--------+---------+----+-----------+

| 11-test | pg1 | 10.0.3.247 | | stopped | | unknown |

| 11-test | pg2 | 10.0.3.94 | Leader | running | 1 | |

| 11-test | pg3 | 10.0.3.214 | | running | 1 | 0 |

+---------+--------+------------+--------+---------+----+-----------+

The VIP has now been moved to the new leader:

NAME STATE AUTOSTART GROUPS IPV4 IPV6 UNPRIVILEGED

pg1 RUNNING 0 - 10.0.3.247 - false

pg2 RUNNING 0 - 10.0.3.2, 10.0.3.94 - false

pg3 RUNNING 0 - 10.0.3.214 - false

This can also be seen in the journals, both from the old leader:

Jan 19 15:35:31 pg1 patroni[9222]: 2020-01-19 15:35:31,634 INFO: manual failover: demoting myself

Jan 19 15:35:31 pg1 patroni[9222]: 2020-01-19 15:35:31,854 INFO: Leader key released

Jan 19 15:35:32 pg1 vip-manager[9314]: 2020/01/19 15:35:32 IP address 10.0.3.2/24 state is true, desired false

Jan 19 15:35:32 pg1 vip-manager[9314]: 2020/01/19 15:35:32 Removing address 10.0.3.2/24 on eth0

Jan 19 15:35:32 pg1 vip-manager[9314]: 2020/01/19 15:35:32 IP address 10.0.3.2/24 state is false, desired false

As well as from the new leader pg2:

Jan 19 15:35:31 pg2 patroni[9229]: 2020-01-19 15:35:31,881 INFO: promoted self to leader by acquiring session lock

Jan 19 15:35:31 pg2 vip-manager[9292]: 2020/01/19 15:35:31 IP address 10.0.3.2/24 state is false, desired true

Jan 19 15:35:31 pg2 vip-manager[9292]: 2020/01/19 15:35:31 Configuring address 10.0.3.2/24 on eth0

Jan 19 15:35:31 pg2 vip-manager[9292]: 2020/01/19 15:35:31 IP address 10.0.3.2/24 state is true, desired true

Jan 19 15:35:32 pg2 patroni[9229]: 2020-01-19 15:35:32,923 INFO: Lock owner: pg2; I am pg2

As one can see, the VIP is moved within one second.

Updated Ansible Playbook

Our Ansible-Playbook for the automated setup of a three-node cluster on Debian has also been updated and can now configure a VIP if so desired:

# ansible-playbook -i inventory -e vip=10.0.3.2 patroni.yml

Questions and Help

Do you have any questions or need help? Feel free to write to info@credativ.com.

Yesterday, the fourth beta of the upcoming PostgreSQL®-major version 12 was released.

Compared to its predecessor PostgreSQL® 11, there are many new features:

- Performance improvements for indexes: btree indexes now manage space more efficiently. The REINDEX command now also supports CONCURRENTLY, which was previously only possible with new indexes.

- WITH queries are now embedded in the main query and thus optimized much better by the planner. Previously, WITH queries were always executed independently.

- The native partitioning was further improved. Foreign keys can now also reference partitioned tables. Maintenance commands such as ATTACH PARTITION no longer require an exclusive table lock.

- The support of page checksums and the tool pg_checksums was further improved, also with substantial cooperation by credativ.

- It is now possible to integrate additional storage engines. The “zheap”, which is still under development, will be based on this, which promises more compact data storage with less bloat.

Of course, PostgreSQL® 12 will be tested using sqlsmith, the SQL “fuzzer” from our colleague Andreas Seltenreich. Numerous bugs in different PostgreSQL® versions were found with sqlsmith by using randomly generated SQL queries.

Debian and Ubuntu packages for PostgreSQL® 12 are going to be published on apt.postgresql.org with credativ’s help. This work will be handled by our colleague Christoph Berg.

The release of PostgreSQL® 12 is expected in the next weeks.

Patroni is a PostgreSQL high availability solution with a focus on containers and Kubernetes. Until recently, the available Debian packages had to be configured manually and did not integrate well with the rest of the distribution. For the upcoming Debian 10 “Buster” release, the Patroni packages have been integrated into Debian’s standard PostgreSQL framework by credativ. They now allow for an easy setup of Patroni clusters on Debian or Ubuntu.

Patroni employs a “Distributed Consensus Store” (DCS) like Etcd, Consul or Zookeeper in order to reliably run a leader election and orchestrate automatic failover. It further allows for scheduled switchovers and easy cluster-wide changes to the configuration. Finally, it provides a REST interface that can be used together with HAProxy in order to build a load balancing solution. Due to these advantages Patroni has gradually replaced Pacemaker as the go-to open-source project for PostgreSQL high availability.

However, many of our customers run PostgreSQL on Debian or Ubuntu systems and so far Patroni did not integrate well into those. For example, it does not use the postgresql-common framework and its instances were not displayed in pg_lsclusters output as usual.

Integration into Debian

In a collaboration with Patroni lead developer Alexander Kukushkin from Zalando the Debian Patroni package has been integrated into the postgresql-common framework to a large extent over the last months. This was due to changes both in Patroni itself as well as additional programs in the Debian package. The current Version 1.5.5 of Patroni contains all these changes and is now available in Debian “Buster” (testing) in order to setup Patroni clusters.

The packages are also available on apt.postgresql.org and thus installable on Debian 9 “Stretch” and Ubuntu 18.04 “Bionic Beaver” LTS for any PostgreSQL version from 9.4 to 11.

The most important part of the integration is the automatic generation of a suitable Patroni configuration with the pg_createconfig_patroni command. It is run similar to pg_createcluster with the desired PostgreSQL major version and the instance name as parameters:

pg_createconfig_patroni 11 test

This invocation creates a file /etc/patroni/11-test.yml, using the DCS configuration from /etc/patroni/dcs.yml which has to be adjusted according to the local setup. The rest of the configuration is taken from the template /etc/patroni/config.yml.in which is usable in itself but can be customized by the user according to their needs. Afterwards the Patroni instance is started via systemd similar to regular PostgreSQL instances:

systemctl start patroni@11-test

A simple 3-node Patroni cluster can be created and started with the following few commands, where the nodes pg1, pg2 and pg3 are considered to be hostnames and the local file dcs.yml contains the DCS configuration:

for i in pg1 pg2 pg3; do ssh $i 'apt -y install postgresql-common'; done

for i in pg1 pg2 pg3; do ssh $i 'sed -i "s/^#create_main_cluster = true/create_main_cluster = false/" /etc/postgresql-common/createcluster.conf'; done

for i in pg1 pg2 pg3; do ssh $i 'apt -y install patroni postgresql'; done

for i in pg1 pg2 pg3; do scp ./dcs.yml $i:/etc/patroni; done

for i in pg1 pg2 pg3; do ssh @$i 'pg_createconfig_patroni 11 test' && systemctl start patroni@11-test'; done

Afterwards, you can get the state of the Patroni cluster via

ssh pg1 'patronictl -c /etc/patroni/11-patroni.yml list'

+---------+--------+------------+--------+---------+----+-----------+

| Cluster | Member | Host | Role | State | TL | Lag in MB |

+---------+--------+------------+--------+---------+----+-----------+

| 11-test | pg1 | 10.0.3.111 | Leader | running | 1 | |

| 11-test | pg2 | 10.0.3.41 | | stopped | | unknown |

| 11-test | pg3 | 10.0.3.46 | | stopped | | unknown |

+---------+--------+------------+--------+---------+----+-----------+

Leader election has happened and pg1 has become the primary. It created its instance with the Debian-specific pg_createcluster_patroni program that runs pg_createcluster in the background. Then the two other nodes clone from the leader using the pg_clonecluster_patroni program which sets up an instance using pg_createcluster and then runs pg_basebackup from the primary. After that, all nodes are up and running:

+---------+--------+------------+--------+---------+----+-----------+

| Cluster | Member | Host | Role | State | TL | Lag in MB |

+---------+--------+------------+--------+---------+----+-----------+

| 11-test | pg1 | 10.0.3.111 | Leader | running | 1 | 0 |

| 11-test | pg2 | 10.0.3.41 | | running | 1 | 0 |

| 11-test | pg3 | 10.0.3.46 | | running | 1 | 0 |

+---------+--------+------------+--------+---------+----+-----------+

The well-known Debian postgresql-common commands work as well:

ssh pg1 'pg_lsclusters' Ver Cluster Port Status Owner Data directory Log file 11 test 5432 online postgres /var/lib/postgresql/11/test /var/log/postgresql/postgresql-11-test.log

Failover Behaviour

If the primary is abruptly shutdown, its leader token will expire after a while and Patroni will eventually initiate failover and a new leader election:

+---------+--------+-----------+------+---------+----+-----------+

| Cluster | Member | Host | Role | State | TL | Lag in MB |

+---------+--------+-----------+------+---------+----+-----------+

| 11-test | pg2 | 10.0.3.41 | | running | 1 | 0 |

| 11-test | pg3 | 10.0.3.46 | | running | 1 | 0 |

+---------+--------+-----------+------+---------+----+-----------+

[...]

+---------+--------+-----------+--------+---------+----+-----------+

| Cluster | Member | Host | Role | State | TL | Lag in MB |

+---------+--------+-----------+--------+---------+----+-----------+

| 11-test | pg2 | 10.0.3.41 | Leader | running | 2 | 0 |

| 11-test | pg3 | 10.0.3.46 | | running | 1 | 0 |

+---------+--------+-----------+--------+---------+----+-----------+

[...]

+---------+--------+-----------+--------+---------+----+-----------+

| Cluster | Member | Host | Role | State | TL | Lag in MB |

+---------+--------+-----------+--------+---------+----+-----------+

| 11-test | pg2 | 10.0.3.41 | Leader | running | 2 | 0 |

| 11-test | pg3 | 10.0.3.46 | | running | 2 | 0 |

+---------+--------+-----------+--------+---------+----+-----------+

The old primary will rejoin the cluster as standby once it is restarted:

+---------+--------+------------+--------+---------+----+-----------+

| Cluster | Member | Host | Role | State | TL | Lag in MB |

+---------+--------+------------+--------+---------+----+-----------+

| 11-test | pg1 | 10.0.3.111 | | running | | unknown |

| 11-test | pg2 | 10.0.3.41 | Leader | running | 2 | 0 |

| 11-test | pg3 | 10.0.3.46 | | running | 2 | 0 |

+---------+--------+------------+--------+---------+----+-----------+

[...]

+---------+--------+------------+--------+---------+----+-----------+

| Cluster | Member | Host | Role | State | TL | Lag in MB |

+---------+--------+------------+--------+---------+----+-----------+

| 11-test | pg1 | 10.0.3.111 | | running | 2 | 0 |

| 11-test | pg2 | 10.0.3.41 | Leader | running | 2 | 0 |

| 11-test | pg3 | 10.0.3.46 | | running | 2 | 0 |

+---------+--------+------------+--------+---------+----+-----------+

If a clean rejoin is not possible due to additional transactions on the old timeline the old primary gets re-cloned from the current leader. In case the data is too large for a quick re-clone, pg_rewind can be used. In this case a password needs to be set for the postgres user and regular database connections (as opposed to replication connections) need to be allowed between the cluster nodes.

Creation of additional Instances

It is also possible to create further clusters with pg_createconfig_patroni, one can either assign a PostgreSQL port explicitly via the --port option, or let pg_createconfig_patroni assign the next free port as is known from pg_createcluster:

for i in pg1 pg2 pg3; do ssh $i 'pg_createconfig_patroni 11 test2 && systemctl start patroni@11-test2'; done

ssh pg1 'patronictl -c /etc/patroni/11-test2.yml list'

+----------+--------+-----------------+--------+---------+----+-----------+

| Cluster | Member | Host | Role | State | TL | Lag in MB |

+----------+--------+-----------------+--------+---------+----+-----------+

| 11-test2 | pg1 | 10.0.3.111:5433 | Leader | running | 1 | 0 |

| 11-test2 | pg2 | 10.0.3.41:5433 | | running | 1 | 0 |

| 11-test2 | pg3 | 10.0.3.46:5433 | | running | 1 | 0 |

+----------+--------+-----------------+--------+---------+----+-----------+

Ansible Playbook

In order to easily deploy a 3-node Patroni cluster we have created an Ansible playbook on Github. It automates the installation and configuration of PostgreSQL and Patroni on the three nodes, as well as the DCS server on a fourth node.

Questions and Help

Do you have any questions or need help? Feel free to write to info@credativ.com.

Last weekend, DebCamp, which is the pre-event of the largest Debian conference worldwide – DebConf, started.

This years DebConf will take place in Taiwan from July 29th to August 05th.

After we already took part in this years MiniDebConf in Hamburg, we are now also going to visit Taiwan to attend the DebConf, also because credativ is sponsoring the event this year.

Even if the flights last 16 hours on average, the anticipation of the colleagues is already great and we are very curious what the event has in store for its visitors.

Lectures, Talks and BoFs

Meanwhile the packed lecture program has been published. There will be around 90 presentations in 3 tracks on the following 8 topics:

- Debian blends

- Cloud and containers

- Debian in science

- Embedded

- Packaging, policy, and Debian infrastructure

- Security

- Social context

- Systems administration, automation and orchestration

Many of the presentations can even be streamed. The links are as follows:

- https://debconf18.debconf.org/schedule/venue/1/

- https://debconf18.debconf.org/schedule/venue/2/

- https://debconf18.debconf.org/schedule/venue/3/

We are especially looking forward to the SPI BoF (Software in the Public Interest – Birds of a Feather) and the DSA BoF (Debian System Administrators – Birds of a Feather), in which our colleague and Debian sysadmin Martin Zobel-Helas participates.

Job Fair

For us at credativ, the Job Fair is of particular importance. Here we meet potential new colleagues and hopefully many visitors interested in our company. If you want to have a chat with our colleagues: Noël, Sven and Martin will be available for you at the Job Fair and on all other days of the event.

In addition to exciting discussions, we of course hope for one or two interesting applications that we will receive afterwards.

The Job Fair takes place one day before DebConf18, on 28 July.

Daytrip and other events

Of course there will also be the “Daytrip” this year, which will show the conference visitors the surroundings and the country. Participants have a whole range of options to choose from. Whether you want to explore the city, go hiking, or hold a Taiwanese tea ceremony, there should be something for everyone.

In addition to the daily breakfast, lunch, coffee break and dinner, there will be a cheese-and-wine party on Monday 30 August and a conference dinner on Thursday 2 August. Our colleagues will attend both events and hopefully have some interesting conversations.

Debian and credativ

The free operating system Debian is one of the most popular Linux distributions and has thousands of users worldwide.

Besides Martin Zobel-Helas, the credativ employs many members of the Debian project. Also our managing director, Dr. Michael Meskes, was actively involved in Debian even before credativ GmbH (1999) was founded. This also resulted in a close and long-standing bond with the Debian project and the community for credativ.

This article was originally written by Philip Haas.

Debian 9 “Stretch”, the latest version of Debian, is about to be released and after a full-freeze on February 5th everyone is trying its best to fix the last bugs that are left.

Upon entering the final phase of development in February the test version was “frozen” so that no more packages could be added or removed without the approval of the release team.

However, Stretch has some bugs left, which need to be resolved until the release date, especially the so called release critical bugs (RC). For this purpose, numerous Debian developers host worldwide meet ups.

These meet ups are a long standing tradition and are lovingly called “Bug Squashing Party”. Despite the cute name, these events usually turn out to be one the most focused, intense and hard working days in the life cycle of a new Debian version. Pressured by the upcoming release date, everyone gets together to get rid of the nasty release critical bugs and focus on unfinished packages.

This weekend, from the 17th to the 19th of March, the Debian developers from credativ are hosting a Bug Squashing Party in the German Open Source Support Center in Mönchengladbach.

The Open Source Support Center employs the likely biggest number of European Debian developers in one place. Therefore credativ GmbH is providing the location and technical infrastructure for everyone who decided to join the Bug Squashing Party.

We hope that this year’s meeting is going to be as successful as in the previous years. Developers from all neighbouring countries took part in past events and some even found their future employer.

Coordinating the event are: Martin Zobel-Helas “zobel” (Debian system administrator) and Alexander Wirt “formorer” (Debian Quality Assurance).

If you would like to participate, feel free to sign up!

We are looking forward to your visit.

Here is the announcement on the mailing-list:

https://lists.debian.org/debian-devel-announce/2017/02/msg00006.html

Here is the entry in the Debian wiki:

https://wiki.debian.org/BSP/2017/03/de/credativ

This article was originally written by Philip Haas.

In this post we describe how to integrate Icinga2 with Graphite and Grafana on Debian stable (jessie).

What is Graphite?

Graphite stores performance data on a configurable period. Via a defined interface, services can send metrics to Graphite, which are then stored for the designated period. Possible examples of the type of metrics that it might be useful for are CPU utilization or traffic of a web server. Graphs can be generated from the various metrics via the integrated web interface in Graphite. This allows changes in values to be observed across different time periods. A good example of where this type of trend analysis could be useful is monitoring levels on a hard drive. By using a Trend Graph, it’s clear to see the growth rate of the footprint and anticipate when an exchange of storage will become necessary.

What is Grafana?

Graphite does have a private web interface but it is neither attractive nor flexible. This is where Grafana comes in.

Grafana is a frontend for various storage metrics, supporting Graphite, InfluxDB and OpenTSDB, among others. Grafana offers an intuitive interface through which you can create graphs to represent the metrics and a variety of functions to optimise their appearance and presentation. The graphs can then be summarized in dashboards; you can also opt to display one from a specific host only, through parameterization.

Installation of Icinga2

Here we describe the only installation which is essential for Graphite. Icinga2 packages in the current version of Debian can be obtained directly from Debmon projekt.

At the Debmon project up to date versions of the different monitoring tools software for the different Debian releases are provided by the official Debian package maintainers. To include these packages, use the following commands:

# add debmon cat <<EOF >/etc/apt/sources.list.d/debmon.list deb http://debmon.org/debmon debmon-jessie main EOF # add debmon key wget -O - http://debmon.org/debmon/repo.key 2>/dev/null | apt-key add - # update repos apt-get update

Finally we can install Icinga2:

apt-get install icinga2

Installation of Graphite and Graphite-Web

Once Icinga2 is installed, Graphite and Graphite-Web can also be installed.

# install packages for icinga2 and graphite-web and carbon apt-get install icinga2 graphite-web graphite-carbon libapache2-mod-wsgi apache2

Configuration of Icinga2 with Graphit

Icinga2 must be configured so that all defined metrics are exported to Graphite. The Graphite component receives this data called “Carbon”. In our sample installation Carbon runs on the same host as Icinga2 and uses the default port, meaning no further configuration of Icinga2 is necessary – it is enough to export on.

To do this, simply enter the command: icinga2 feature enable graphite

Subsequently Icinga2 must be restarted: service icinga2 restart

If the Carbon Server is running on a different host or a different port, the configuration of the file /etc/icinga2/features-enabled/graphite.conf Icinga2 should be adjusted. Details can be found in the Icinga2 documentation.

If the configuration was successful, then after a short time a number of files should appear in “/var/lib/graphite/whisper/icinga“. If they don’t, then you should take a look in the log file of Icinga2 (located in “/var/log/icinga2/icinga2.log“)

Configuration of Graphite – Web

Grafana uses the frontend of Graphite as an interface for the stored metrics of Graphite so correct configuration of Graphite-web is very important. For performance reasons, we operate Graphite-web as a WSGI module. A number of configuration steps are necessary:

- First, we create a user database for Graphite-web. Since we will not have many users, at this point we use SQLite as the backend for our user data. We do this using the following commands which initialize the user database and transfer ownership to the user under which the Web front-end runs:

graphite-manage syncdb chown _graphite:_graphite /var/lib/graphite/graphite.db

- Then we activate the WSGI module in Apache:

a2enmod wsgi - For simplicity the web interface should run in a separate virtual host and on its own port. So that Apache listens to this port, we add the line “Listen 8000” to the file “/etc/apache2/ports.conf“

- The Graphite Debian package already provides a configuration file for Apache, which is fine to use with slight adaptations.

cp /usr/share/graphite-web/apache2- graphite.conf /etc/apache2/sites-available/graphite.conf. In order for the virtual host to also use port 8000, we replace the line:<VirtualHost *:80>

with:

<VirtualHost *:8000>

- We can now activate the new virtual host via

a2ensite graphiteand restart Apache:systemctl restart apache2 - You should now be able to reach Graphite-web at http://YOURIP:8000/. If you cannot, the Apache log files in “/var/log/apache2/” can provide valuable information.

Configuration of Grafana

Grafana is not currently included in Debian. However, the author offers an Apt repository from which you can install Grafana. Even if the repository points to Wheezy, the packages should still function under Debian Jessie.

The repository is only accessible via https – so first, you need to install https support for apt: apt-get install apt-transport-https

Next, the repository can be integrated.

# add repo (package for wheezy works on jessie) cat </etc/apt/sources.list.d/grafana.list deb https://packagecloud.io/grafana/stable/debian/ wheezy main EOF # add key curl -s https://packagecloud.io/gpg.key | sudo apt-key add - # update repos apt-get update

After this, the package can be installed: apt-get install grafana For Grafana to run we still need to activate the service systemctl enable grafana-server.service and start systemctl start grafana-server.

Grafana is now accessible at http://YOURIP:3000/. The default user name and password in our example is ‘admin’. This password should, of course, be replaced by a secure password at the earliest opportunity.

Grafana must then be configured so that it uses Graphite as a data source. For simplicity, the configuration is explained in the following screencast:

After setting up Graphite as the data source, we can create our first graph. Here is another short screencast to illustrate this:

Congratulations! You have successfully installed and configured Icinga2, Graphite and Grafana. For subsequent steps, please refer to the documentation for the specific projects:

To read more about credativ’s work with Debian, please read our Debian blogs.

The vast majority of Debian installations are simplified with the use of Preseeding and Netboot. Friedrich Weber, a school student on a work experience placement with us at our German office has observed the process and captured it in a Howto here:

Imagine the following situation: you find yourself with ten to twenty brand new Notebooks and the opportunity to install them with Debian and customise to your own taste. In any case it would be great fun to manually perform the Debian installation and configuration on each Notebook.

This is where Debian Preseed comes into play. The concept is simple and self-explanatory; usually, whoever is doing the installation will be faced with a number of issues during the process (e.g. language, partitioning, packages, Bootloader, etc.) In terms of Preseed, all of these issues can now be resolved. Only those which are not already accounted for in Preseed remain for the Debian installer. In the ideal situation these would become apparent at the outset of the installation, where the solution would differ depending on the target system and which the administrator must deal with manually – only when these have been dealt with can the installation be left to run unattended.

Preseed functions on some simple inbuilt configuration data: preseed.cfg. It includes, as detailed above, the questions which must be answered during installation, and in debconf-format. Data such as this consists of several rows, each row of which defines a debconf configuration option – a response to a question – for example:

d-i debian-installer/locale string de_DE.UTF-8

The first element of these lines is the name of the package, which is configured (d-i is here an abbreviation of debian installer), the second element is the name of the option, which is set, as the third element of the type of option (a string) and the rest is the value of the option.

In this example, we set the language to German using UTF-8-coding. You can put lines like this together yourself, even simpler with the tool debconf-get-selections: these commands provide straight forward and simple options, which can be set locally.

From the selection you can choose your desired settings, adjusted if necessary and copied into preseed.cfg. Here is an example of preseed.cfg:

d-i debian-installer/locale string de_DE.UTF-8 d-i debian-installer/keymap select de-latin1 d-i console-keymaps-at/keymap select de d-i languagechooser/language-name-fb select German d-i countrychooser/country-name select Germany d-i console-setup/layoutcode string de_DE d-i clock-setup/utc boolean true d-i time/zone string Europe/Berlin d-i clock-setup/ntp boolean true d-i clock-setup/ntp-server string ntp1 tasksel tasksel/first multiselect standard, desktop, gnome-desktop, laptop d-i pkgsel/include string openssh-client vim less rsync

In addition to language and timezone settings, selected tasks and packages are also set with these options. If left competely unattended, the installation will not complete, but will make a good start.

Now onto the question of where Preseed pulls its data from. It is in fact possible to use Preseed with CD and DVD images or USB sticks, but generally more comfortable to use a Debian Netboot Image, essentially an installer, which is started across the network and which can cover its Preseed configuration.

This boot across the network is implemented with PXE and requires a system that can boot from a network card. Next, the system depends on booting from the network card. It travels from a DHCO server to an IP address per broadcast.

This DHCP server transmits not only a suitable IP, but also to the IP of a so-called Bootserver. A Bootserver is a TFTP-Server, which provides a Bootloader to assist the Administrator with the desired Debian Installer. At the same time the Debian Installer can be shared with the Boot options that Preseed should use and where he can find the Preseed configuration. Here is a snippet of the PXELINUX configuration data pxelinux.cfg/default:

label i386 kernel debian-installer/i386/linux append vga=normal initrd=debian-installer/i386/initrd.gz netcfg/choose_interface=eth0 domain=example.com locale=de_DE debian-installer/country=DE debian-installer/language=de debian-installer/keymap=de-latin1-nodeadkeys console-keymaps-at/keymap=de-latin1-nodeadkeys auto-install/enable=false preseed/url=http://$server/preseed.cfg DEBCONF_DEBUG=5 -- quiet

When the user types i386, the debian-installer/i386/linux kernel (found on the TFTP server) is downloaded and run. This is in addition to a whole load of bootoptions given along the way. The debian installer allows the provision of debconf options as boot parameters. It is good practice for the installer to somehow communicate where to find the Preseed communication on the network (preseed/url).

In order to download this Preseed configuration, it must also be somehow built into the network. The options for that will be handed over (the options for the hostnames would be deliberately omitted here, as every target system has its own Hostname). auto-install/enable would delay the language set up so that it is only enabled after the network configuration, in order that these installations are read through preseed.cfg.

It is not necessary as the language set up will also be handed over to the kernel options to ensure that the network configuration is German. The examples and configuration excerpts mentioned here are obviously summarised and shortened. Even so, this blog post should have given you a glimpse into the concept of Preseed in connection with netboot. Finally, here is a complete version of preseed.cfg:

d-i debian-installer/locale string de_DE.UTF-8 d-i debian-installer/keymap select de-latin1 d-i console-keymaps-at/keymap select de d-i languagechooser/language-name-fb select German d-i countrychooser/country-name select Germany d-i console-setup/layoutcode string de_DE # Network d-i netcfg/choose_interface select auto d-i netcfg/get_hostname string debian d-i netcfg/get_domain string example.com # Package mirror d-i mirror/protocol string http d-i mirror/country string manual d-i mirror/http/hostname string debian.example.com d-i mirror/http/directory string /debian d-i mirror/http/proxy string d-i mirror/suite string lenny # Timezone d-i clock-setup/utc boolean true d-i time/zone string Europe/Berlin d-i clock-setup/ntp boolean true d-i clock-setup/ntp-server string ntp.example.com # Root-Account d-i passwd/make-user boolean false d-i passwd/root-password password secretpassword d-i passwd/root-password-again password secretpassword # Further APT-Options d-i apt-setup/non-free boolean false d-i apt-setup/contrib boolean false d-i apt-setup/security-updates boolean true d-i apt-setup/local0/source boolean false d-i apt-setup/local1/source boolean false d-i apt-setup/local2/source boolean false # Tasks tasksel tasksel/first multiselect standard, desktop d-i pkgsel/include string openssh-client vim less rsync d-i pkgsel/upgrade select safe-upgrade # Popularity-Contest popularity-contest popularity-contest/participate boolean true # Command to be followed after the installation. `in-target` means that the following # Command is followed in the installed environment, rather than in the installation environment. # Here http://$server/skript.sh nach /tmp is downloaded, enabled and implemented. d-i preseed/late_command string in-target wget -P /tmp/ http://$server/skript.sh; in-target chmod +x /tmp/skript.sh; in-target /tmp/skript.sh>

All Howtos of this blog are grouped together in the Howto category – and if you happen to be looking for Support and Services for Debian you’ve come to the right place at credativ.

This post was originally written by Irenie White.